Patrick L. Combettes

c-lasso -- a Python package for constrained sparse and robust regression and classification

Nov 02, 2020

Abstract:We introduce c-lasso, a Python package that enables sparse and robust linear regression and classification with linear equality constraints. The underlying statistical forward model is assumed to be of the following form: \[ y = X \beta + \sigma \epsilon \qquad \textrm{subject to} \qquad C\beta=0 \] Here, $X \in \mathbb{R}^{n\times d}$is a given design matrix and the vector $y \in \mathbb{R}^{n}$ is a continuous or binary response vector. The matrix $C$ is a general constraint matrix. The vector $\beta \in \mathbb{R}^{d}$ contains the unknown coefficients and $\sigma$ an unknown scale. Prominent use cases are (sparse) log-contrast regression with compositional data $X$, requiring the constraint $1_d^T \beta = 0$ (Aitchion and Bacon-Shone 1984) and the Generalized Lasso which is a special case of the described problem (see, e.g, (James, Paulson, and Rusmevichientong 2020), Example 3). The c-lasso package provides estimators for inferring unknown coefficients and scale (i.e., perspective M-estimators (Combettes and M\"uller 2020a)) of the form \[ \min_{\beta \in \mathbb{R}^d, \sigma \in \mathbb{R}_{0}} f\left(X\beta - y,{\sigma} \right) + \lambda \left\lVert \beta\right\rVert_1 \qquad \textrm{subject to} \qquad C\beta = 0 \] for several convex loss functions $f(\cdot,\cdot)$. This includes the constrained Lasso, the constrained scaled Lasso, and sparse Huber M-estimators with linear equality constraints.

Classification and regression using an outer approximation projection-gradient method

Mar 23, 2017

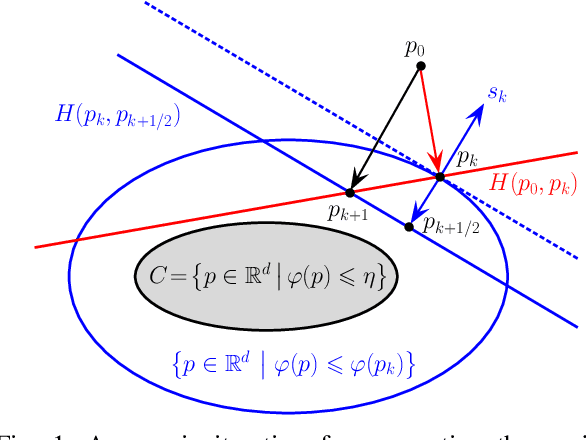

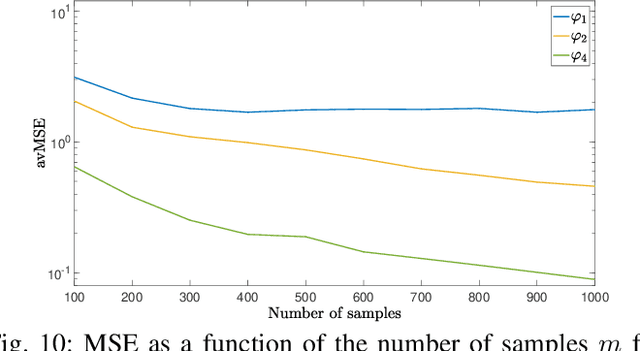

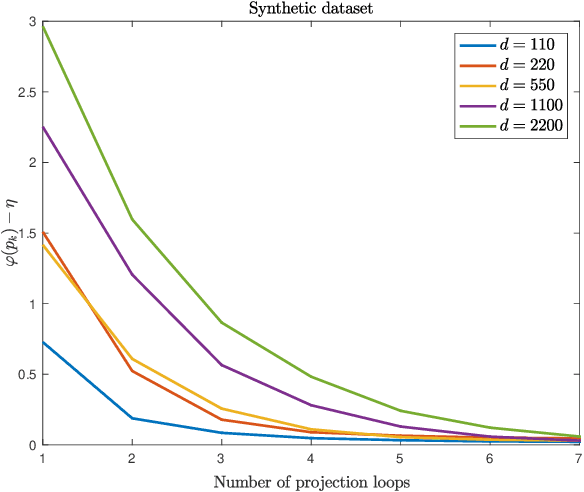

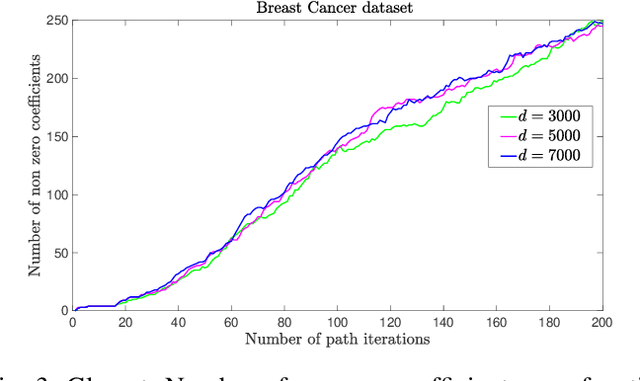

Abstract:This paper deals with sparse feature selection and grouping for classification and regression. The classification or regression problems under consideration consists in minimizing a convex empirical risk function subject to an $\ell^1$ constraint, a pairwise $\ell^\infty$ constraint, or a pairwise $\ell^1$ constraint. Existing work, such as the Lasso formulation, has focused mainly on Lagrangian penalty approximations, which often require ad hoc or computationally expensive procedures to determine the penalization parameter. We depart from this approach and address the constrained problem directly via a splitting method. The structure of the method is that of the classical gradient-projection algorithm, which alternates a gradient step on the objective and a projection step onto the lower level set modeling the constraint. The novelty of our approach is that the projection step is implemented via an outer approximation scheme in which the constraint set is approximated by a sequence of simple convex sets consisting of the intersection of two half-spaces. Convergence of the iterates generated by the algorithm is established for a general smooth convex minimization problem with inequality constraints. Experiments on both synthetic and biological data show that our method outperforms penalty methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge