Patricia Arroba

Heuristics and Metaheuristics for Dynamic Management of Computing and Cooling Energy in Cloud Data Centers

Dec 17, 2023Abstract:Data centers handle impressive high figures in terms of energy consumption, and the growing popularity of Cloud applications is intensifying their computational demand. Moreover, the cooling needed to keep the servers within reliable thermal operating conditions also has an impact on the thermal distribution of the data room, thus affecting to servers' power leakage. Optimizing the energy consumption of these infrastructures is a major challenge to place data centers on a more scalable scenario. Thus, understanding the relationship between power, temperature, consolidation and performance is crucial to enable an energy-efficient management at the data center level. In this research, we propose novel power and thermal-aware strategies and models to provide joint cooling and computing optimizations from a local perspective based on the global energy consumption of metaheuristic-based optimizations. Our results show that the combined awareness from both metaheuristic and best fit decreasing algorithms allow us to describe the global energy into faster and lighter optimization strategies that may be used during runtime. This approach allows us to improve the energy efficiency of the data center, considering both computing and cooling infrastructures, in up to a 21.74\% while maintaining quality of service.

Bringing AI to the edge: A formal M&S specification to deploy effective IoT architectures

May 11, 2023Abstract:The Internet of Things is transforming our society, providing new services that improve the quality of life and resource management. These applications are based on ubiquitous networks of multiple distributed devices, with limited computing resources and power, capable of collecting and storing data from heterogeneous sources in real-time. To avoid network saturation and high delays, new architectures such as fog computing are emerging to bring computing infrastructure closer to data sources. Additionally, new data centers are needed to provide real-time Big Data and data analytics capabilities at the edge of the network, where energy efficiency needs to be considered to ensure a sustainable and effective deployment in areas of human activity. In this research, we present an IoT model based on the principles of Model-Based Systems Engineering defined using the Discrete Event System Specification formalism. The provided mathematical formalism covers the description of the entire architecture, from IoT devices to the processing units in edge data centers. Our work includes the location-awareness of user equipment, network, and computing infrastructures to optimize federated resource management in terms of delay and power consumption. We present an effective framework to assist the dimensioning and the dynamic operation of IoT data stream analytics applications, demonstrating our contributions through a driving assistance use case based on real traces and data.

Runtime data center temperature prediction using Grammatical Evolution techniques

Nov 11, 2022

Abstract:Data Centers are huge power consumers, both because of the energy required for computation and the cooling needed to keep servers below thermal redlining. The most common technique to minimize cooling costs is increasing data room temperature. However, to avoid reliability issues, and to enhance energy efficiency, there is a need to predict the temperature attained by servers under variable cooling setups. Due to the complex thermal dynamics of data rooms, accurate runtime data center temperature prediction has remained as an important challenge. By using Gramatical Evolution techniques, this paper presents a methodology for the generation of temperature models for data centers and the runtime prediction of CPU and inlet temperature under variable cooling setups. As opposed to time costly Computational Fluid Dynamics techniques, our models do not need specific knowledge about the problem, can be used in arbitrary data centers, re-trained if conditions change and have negligible overhead during runtime prediction. Our models have been trained and tested by using traces from real Data Center scenarios. Our results show how we can fully predict the temperature of the servers in a data rooms, with prediction errors below 2 C and 0.5 C in CPU and server inlet temperature respectively.

Data augmentation through multivariate scenario forecasting in Data Centers using Generative Adversarial Networks

Jan 12, 2022

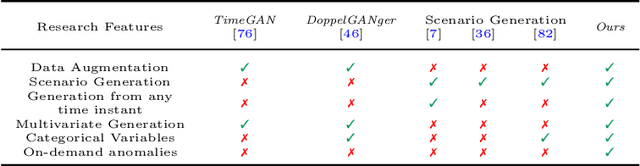

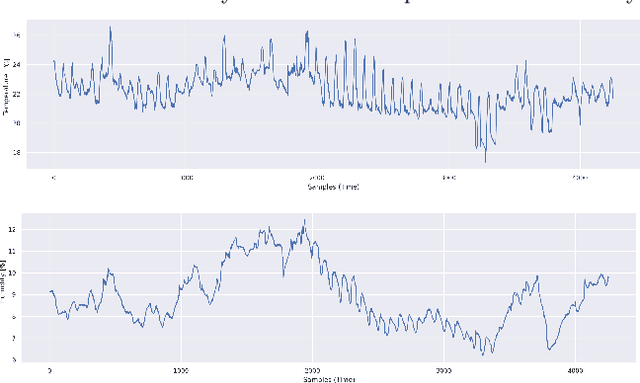

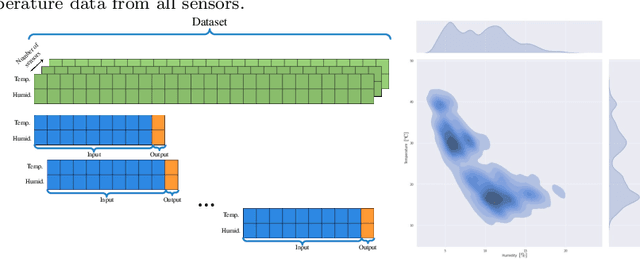

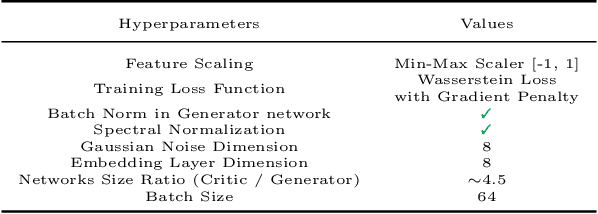

Abstract:The Cloud paradigm is at a critical point in which the existing energy-efficiency techniques are reaching a plateau, while the computing resources demand at Data Center facilities continues to increase exponentially. The main challenge in achieving a global energy efficiency strategy based on Artificial Intelligence is that we need massive amounts of data to feed the algorithms. Nowadays, any optimization strategy must begin with data. However, companies with access to these large amounts of data decide not to share them because it could compromise their security. This paper proposes a time-series data augmentation methodology based on synthetic scenario forecasting within the Data Center. For this purpose, we will implement a powerful generative algorithm: Generative Adversarial Networks (GANs). The use of GANs will allow us to handle multivariate data and data from different natures (e.g., categorical). On the other hand, adapting Data Centers' operational management to the occurrence of sporadic anomalies is complicated due to the reduced frequency of failures in the system. Therefore, we also propose a methodology to increase the generated data variability by introducing on-demand anomalies. We validated our approach using real data collected from an operating Data Center, successfully obtaining forecasts of random scenarios with several hours of prediction. Our research will help to optimize the energy consumed in Data Centers, although the proposed methodology can be employed in any similar time-series-like problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge