José M. Moya

Modeling methodology for the accurate and prompt prediction of symptomatic events in chronic diseases

Feb 15, 2024

Abstract:Prediction of symptomatic crises in chronic diseases allows to take decisions before the symptoms occur, such as the intake of drugs to avoid the symptoms or the activation of medical alarms. The prediction horizon is in this case an important parameter in order to fulfill the pharmacokinetics of medications, or the time response of medical services. This paper presents a study about the prediction limits of a chronic disease with symptomatic crises: the migraine. For that purpose, this work develops a methodology to build predictive migraine models and to improve these predictions beyond the limits of the initial models. The maximum prediction horizon is analyzed, and its dependency on the selected features is studied. A strategy for model selection is proposed to tackle the trade off between conservative but robust predictive models, with respect to less accurate predictions with higher horizons. The obtained results show a prediction horizon close to 40 minutes, which is in the time range of the drug pharmacokinetics. Experiments have been performed in a realistic scenario where input data have been acquired in an ambulatory clinical study by the deployment of a non-intrusive Wireless Body Sensor Network. Our results provide an effective methodology for the selection of the future horizon in the development of prediction algorithms for diseases experiencing symptomatic crises.

Heuristics and Metaheuristics for Dynamic Management of Computing and Cooling Energy in Cloud Data Centers

Dec 17, 2023Abstract:Data centers handle impressive high figures in terms of energy consumption, and the growing popularity of Cloud applications is intensifying their computational demand. Moreover, the cooling needed to keep the servers within reliable thermal operating conditions also has an impact on the thermal distribution of the data room, thus affecting to servers' power leakage. Optimizing the energy consumption of these infrastructures is a major challenge to place data centers on a more scalable scenario. Thus, understanding the relationship between power, temperature, consolidation and performance is crucial to enable an energy-efficient management at the data center level. In this research, we propose novel power and thermal-aware strategies and models to provide joint cooling and computing optimizations from a local perspective based on the global energy consumption of metaheuristic-based optimizations. Our results show that the combined awareness from both metaheuristic and best fit decreasing algorithms allow us to describe the global energy into faster and lighter optimization strategies that may be used during runtime. This approach allows us to improve the energy efficiency of the data center, considering both computing and cooling infrastructures, in up to a 21.74\% while maintaining quality of service.

Runtime data center temperature prediction using Grammatical Evolution techniques

Nov 11, 2022

Abstract:Data Centers are huge power consumers, both because of the energy required for computation and the cooling needed to keep servers below thermal redlining. The most common technique to minimize cooling costs is increasing data room temperature. However, to avoid reliability issues, and to enhance energy efficiency, there is a need to predict the temperature attained by servers under variable cooling setups. Due to the complex thermal dynamics of data rooms, accurate runtime data center temperature prediction has remained as an important challenge. By using Gramatical Evolution techniques, this paper presents a methodology for the generation of temperature models for data centers and the runtime prediction of CPU and inlet temperature under variable cooling setups. As opposed to time costly Computational Fluid Dynamics techniques, our models do not need specific knowledge about the problem, can be used in arbitrary data centers, re-trained if conditions change and have negligible overhead during runtime prediction. Our models have been trained and tested by using traces from real Data Center scenarios. Our results show how we can fully predict the temperature of the servers in a data rooms, with prediction errors below 2 C and 0.5 C in CPU and server inlet temperature respectively.

Data augmentation through multivariate scenario forecasting in Data Centers using Generative Adversarial Networks

Jan 12, 2022

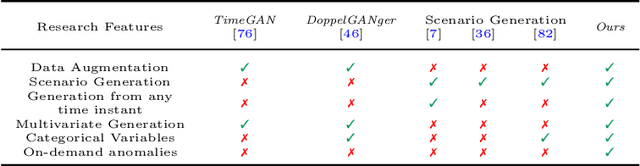

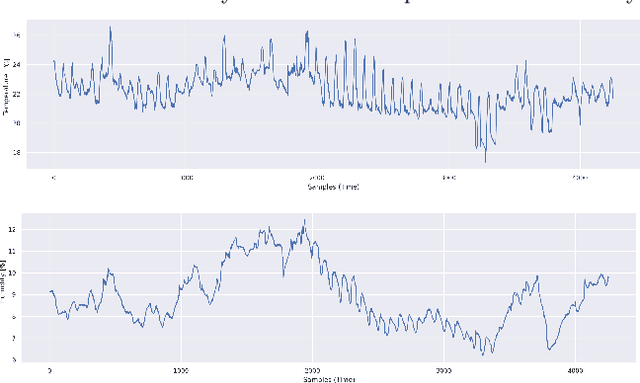

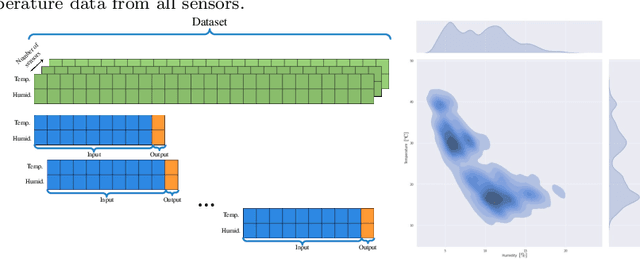

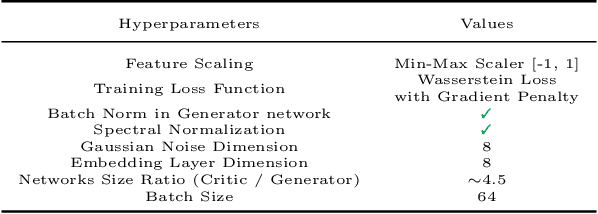

Abstract:The Cloud paradigm is at a critical point in which the existing energy-efficiency techniques are reaching a plateau, while the computing resources demand at Data Center facilities continues to increase exponentially. The main challenge in achieving a global energy efficiency strategy based on Artificial Intelligence is that we need massive amounts of data to feed the algorithms. Nowadays, any optimization strategy must begin with data. However, companies with access to these large amounts of data decide not to share them because it could compromise their security. This paper proposes a time-series data augmentation methodology based on synthetic scenario forecasting within the Data Center. For this purpose, we will implement a powerful generative algorithm: Generative Adversarial Networks (GANs). The use of GANs will allow us to handle multivariate data and data from different natures (e.g., categorical). On the other hand, adapting Data Centers' operational management to the occurrence of sporadic anomalies is complicated due to the reduced frequency of failures in the system. Therefore, we also propose a methodology to increase the generated data variability by introducing on-demand anomalies. We validated our approach using real data collected from an operating Data Center, successfully obtaining forecasts of random scenarios with several hours of prediction. Our research will help to optimize the energy consumed in Data Centers, although the proposed methodology can be employed in any similar time-series-like problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge