Paschalis Panteleris

Institute of Computer Science, FORTH

PE-former: Pose Estimation Transformer

Dec 09, 2021

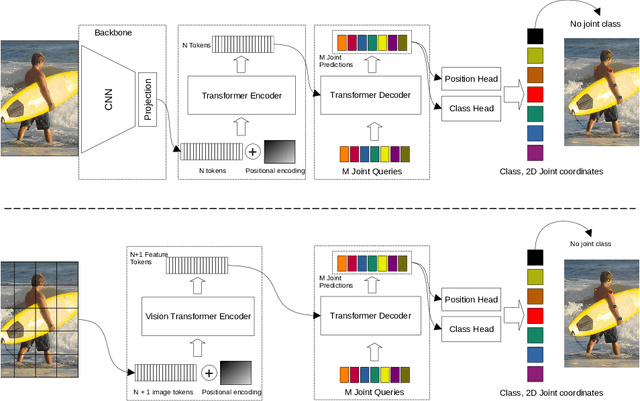

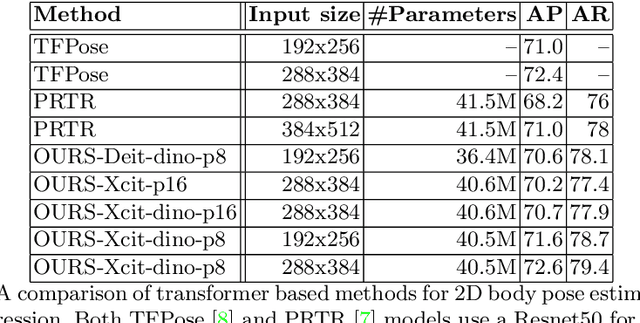

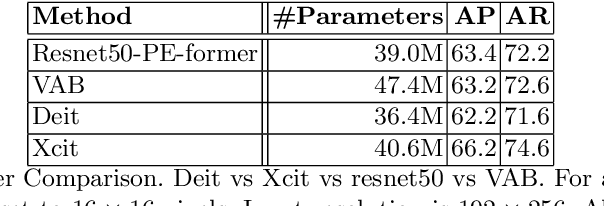

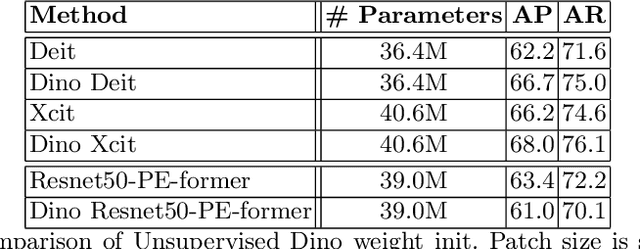

Abstract:Vision transformer architectures have been demonstrated to work very effectively for image classification tasks. Efforts to solve more challenging vision tasks with transformers rely on convolutional backbones for feature extraction. In this paper we investigate the use of a pure transformer architecture (i.e., one with no CNN backbone) for the problem of 2D body pose estimation. We evaluate two ViT architectures on the COCO dataset. We demonstrate that using an encoder-decoder transformer architecture yields state of the art results on this estimation problem.

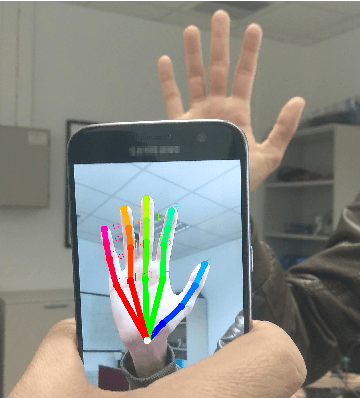

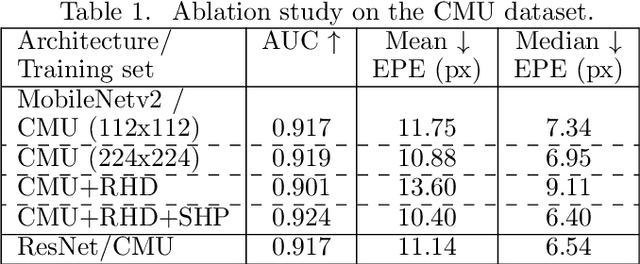

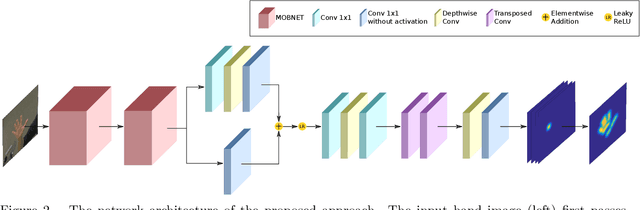

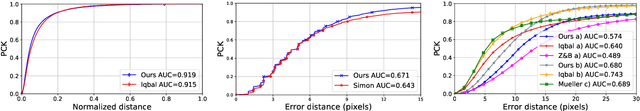

Accurate Hand Keypoint Localization on Mobile Devices

Dec 19, 2018

Abstract:We present a novel approach for 2D hand keypoint localization from regular color input. The proposed approach relies on an appropriately designed Convolutional Neural Network (CNN) that computes a set of heatmaps, one per hand keypoint of interest. Extensive experiments with the proposed method compare it against state of the art approaches and demonstrate its accuracy and computational performance on standard, publicly available datasets. The obtained results demonstrate that the proposed method matches or outperforms the competing methods in accuracy, but clearly outperforms them in computational efficiency, making it a suitable building block for applications that require hand keypoint estimation on mobile devices.

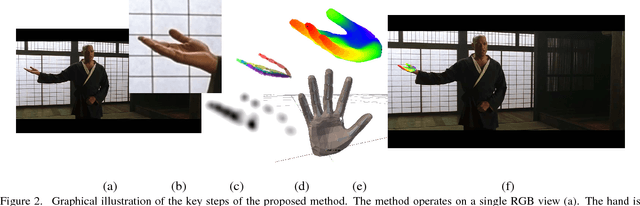

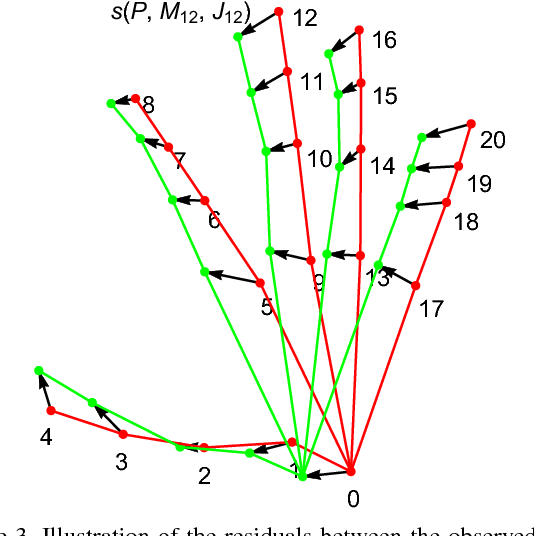

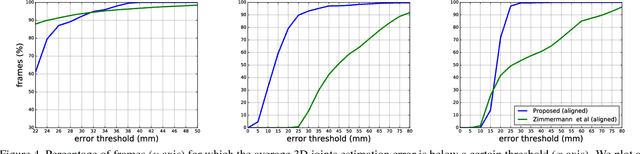

Using a single RGB frame for real time 3D hand pose estimation in the wild

Dec 11, 2017

Abstract:We present a method for the real-time estimation of the full 3D pose of one or more human hands using a single commodity RGB camera. Recent work in the area has displayed impressive progress using RGBD input. However, since the introduction of RGBD sensors, there has been little progress for the case of monocular color input. We capitalize on the latest advancements of deep learning, combining them with the power of generative hand pose estimation techniques to achieve real-time monocular 3D hand pose estimation in unrestricted scenarios. More specifically, given an RGB image and the relevant camera calibration information, we employ a state-of-the-art detector to localize hands. Given a crop of a hand in the image, we run the pretrained network of OpenPose for hands to estimate the 2D location of hand joints. Finally, non-linear least-squares minimization fits a 3D model of the hand to the estimated 2D joint positions, recovering the 3D hand pose. Extensive experimental results provide comparison to the state of the art as well as qualitative assessment of the method in the wild.

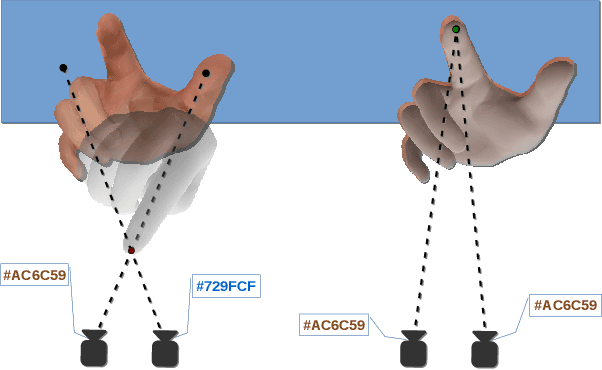

Back to RGB: 3D tracking of hands and hand-object interactions based on short-baseline stereo

May 15, 2017

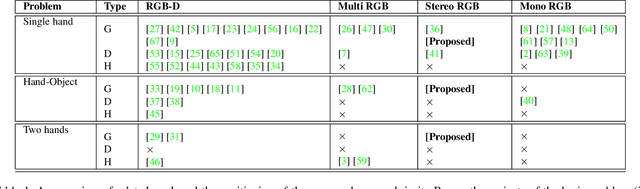

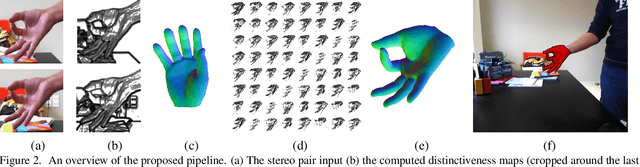

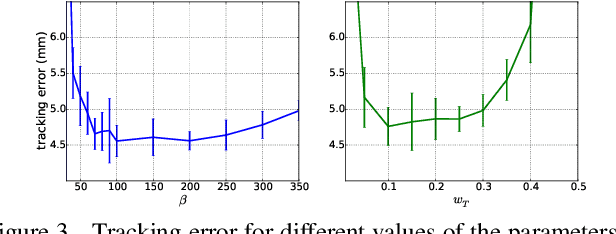

Abstract:We present a novel solution to the problem of 3D tracking of the articulated motion of human hand(s), possibly in interaction with other objects. The vast majority of contemporary relevant work capitalizes on depth information provided by RGBD cameras. In this work, we show that accurate and efficient 3D hand tracking is possible, even for the case of RGB stereo. A straightforward approach for solving the problem based on such input would be to first recover depth and then apply a state of the art depth-based 3D hand tracking method. Unfortunately, this does not work well in practice because the stereo-based, dense 3D reconstruction of hands is far less accurate than the one obtained by RGBD cameras. Our approach bypasses 3D reconstruction and follows a completely different route: 3D hand tracking is formulated as an optimization problem whose solution is the hand configuration that maximizes the color consistency between the two views of the hand. We demonstrate the applicability of our method for real time tracking of a single hand, of a hand manipulating an object and of two interacting hands. The method has been evaluated quantitatively on standard datasets and in comparison to relevant, state of the art RGBD-based approaches. The obtained results demonstrate that the proposed stereo-based method performs equally well to its RGBD-based competitors, and in some cases, it even outperforms them.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge