Pascal Schweitzer

Trainability for Universal GNNs Through Surgical Randomness

Dec 08, 2021

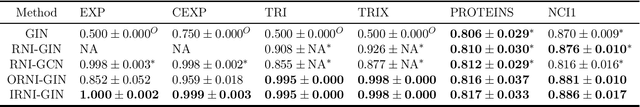

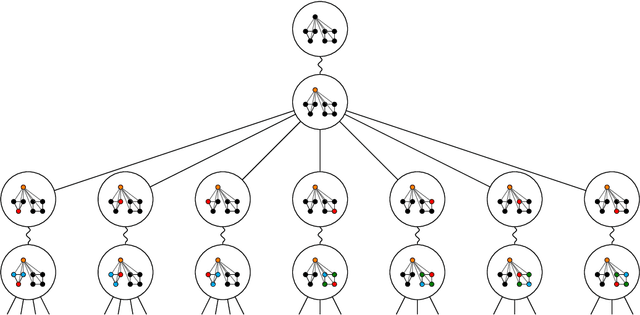

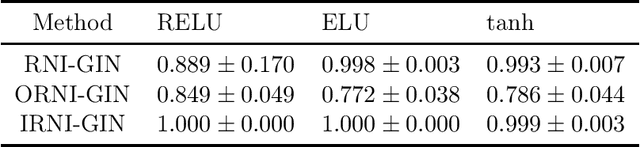

Abstract:Message passing neural networks (MPNN) have provable limitations, which can be overcome by universal networks. However, universal networks are typically impractical. The only exception is random node initialization (RNI), a data augmentation method that results in provably universal networks. Unfortunately, RNI suffers from severe drawbacks such as slow convergence and high sensitivity to changes in hyperparameters. We transfer powerful techniques from the practical world of graph isomorphism testing to MPNNs, resolving these drawbacks. This culminates in individualization-refinement node initialization (IRNI). We replace the indiscriminate and haphazard randomness used in RNI by a surgical incision of only a few random bits at well-selected nodes. Our novel non-intrusive data-augmentation scheme maintains the networks' universality while resolving the trainability issues. We formally prove the claimed universality and corroborate experimentally -- on synthetic benchmarks sets previously explicitly designed for that purpose -- that IRNI overcomes the limitations of MPNNs. We also verify the practical efficacy of our approach on the standard benchmark data sets PROTEINS and NCI1.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge