Paolo Angella

DIMA: DIffusing Motion Artifacts for unsupervised correction in brain MRI images

Apr 09, 2025

Abstract:Motion artifacts remain a significant challenge in Magnetic Resonance Imaging (MRI), compromising diagnostic quality and potentially leading to misdiagnosis or repeated scans. Existing deep learning approaches for motion artifact correction typically require paired motion-free and motion-affected images for training, which are rarely available in clinical settings. To overcome this requirement, we present DIMA (DIffusing Motion Artifacts), a novel framework that leverages diffusion models to enable unsupervised motion artifact correction in brain MRI. Our two-phase approach first trains a diffusion model on unpaired motion-affected images to learn the distribution of motion artifacts. This model then generates realistic motion artifacts on clean images, creating paired datasets suitable for supervised training of correction networks. Unlike existing methods, DIMA operates without requiring k-space manipulation or detailed knowledge of MRI sequence parameters, making it adaptable across different scanning protocols and hardware. Comprehensive evaluations across multiple datasets and anatomical planes demonstrate that our method achieves comparable performance to state-of-the-art supervised approaches while offering superior generalizability to real clinical data. DIMA represents a significant advancement in making motion artifact correction more accessible for routine clinical use, potentially reducing the need for repeat scans and improving diagnostic accuracy.

Assessing the use of Diffusion models for motion artifact correction in brain MRI

Feb 03, 2025

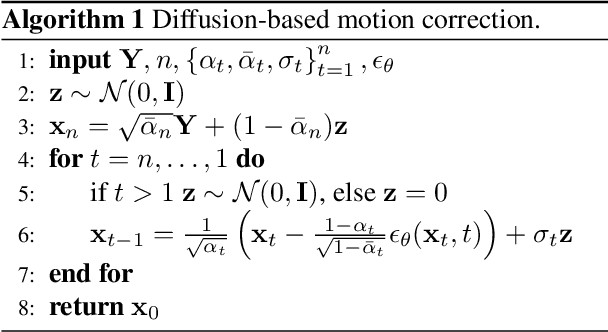

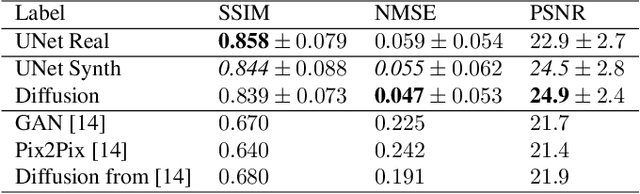

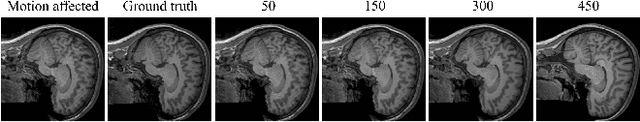

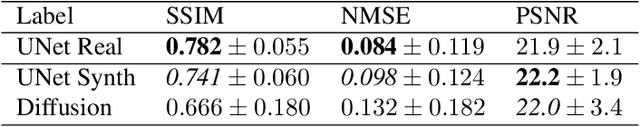

Abstract:Magnetic Resonance Imaging generally requires long exposure times, while being sensitive to patient motion, resulting in artifacts in the acquired images, which may hinder their diagnostic relevance. Despite research efforts to decrease the acquisition time, and designing efficient acquisition sequences, motion artifacts are still a persistent problem, pushing toward the need for the development of automatic motion artifact correction techniques. Recently, diffusion models have been proposed as a solution for the task at hand. While diffusion models can produce high-quality reconstructions, they are also susceptible to hallucination, which poses risks in diagnostic applications. In this study, we critically evaluate the use of diffusion models for correcting motion artifacts in 2D brain MRI scans. Using a popular benchmark dataset, we compare a diffusion model-based approach with state-of-the-art methods consisting of Unets trained in a supervised fashion on motion-affected images to reconstruct ground truth motion-free images. Our findings reveal mixed results: diffusion models can produce accurate predictions or generate harmful hallucinations in this context, depending on data heterogeneity and the acquisition planes considered as input.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge