Pablo G. Morato

IMP-MARL: a Suite of Environments for Large-scale Infrastructure Management Planning via MARL

Jun 20, 2023

Abstract:We introduce IMP-MARL, an open-source suite of multi-agent reinforcement learning (MARL) environments for large-scale Infrastructure Management Planning (IMP), offering a platform for benchmarking the scalability of cooperative MARL methods in real-world engineering applications. In IMP, a multi-component engineering system is subject to a risk of failure due to its components' damage condition. Specifically, each agent plans inspections and repairs for a specific system component, aiming to minimise maintenance costs while cooperating to minimise system failure risk. With IMP-MARL, we release several environments including one related to offshore wind structural systems, in an effort to meet today's needs to improve management strategies to support sustainable and reliable energy systems. Supported by IMP practical engineering environments featuring up to 100 agents, we conduct a benchmark campaign, where the scalability and performance of state-of-the-art cooperative MARL methods are compared against expert-based heuristic policies. The results reveal that centralised training with decentralised execution methods scale better with the number of agents than fully centralised or decentralised RL approaches, while also outperforming expert-based heuristic policies in most IMP environments. Based on our findings, we additionally outline remaining cooperation and scalability challenges that future MARL methods should still address. Through IMP-MARL, we encourage the implementation of new environments and the further development of MARL methods.

Farm-wide virtual load monitoring for offshore wind structures via Bayesian neural networks

Oct 31, 2022

Abstract:Offshore wind structures are subject to deterioration mechanisms throughout their operational lifetime. Even if the deterioration evolution of structural elements can be estimated through physics-based deterioration models, the uncertainties involved in the process hurdle the selection of lifecycle management decisions. In this scenario, the collection of relevant information through an efficient monitoring system enables the reduction of uncertainties, ultimately driving more optimal lifecycle decisions. However, a full monitoring instrumentation implemented on all wind turbines in a farm might become unfeasible due to practical and economical constraints. Besides, certain load monitoring systems often become defective after a few years of marine environment exposure. Addressing the aforementioned concerns, a farm-wide virtual load monitoring scheme directed by a fleet-leader wind turbine offers an attractive solution. Fetched with data retrieved from a fully-instrumented wind turbine, a model can be trained and then deployed, thus yielding load predictions of non-fully monitored wind turbines, from which only standard data remains available. In this paper, we propose a virtual load monitoring framework formulated via Bayesian neural networks (BNNs) and we provide relevant implementation details needed for the construction, training, and deployment of BNN data-based virtual monitoring models. As opposed to their deterministic counterparts, BNNs intrinsically announce the uncertainties associated with generated load predictions and allow to detect inaccurate load estimations generated for non-fully monitored wind turbines. The proposed virtual load monitoring is thoroughly tested through an experimental campaign in an operational offshore wind farm and the results demonstrate the effectiveness of BNN models for fleet-leader-based farm-wide virtual monitoring.

Inference and dynamic decision-making for deteriorating systems with probabilistic dependencies through Bayesian networks and deep reinforcement learning

Sep 02, 2022

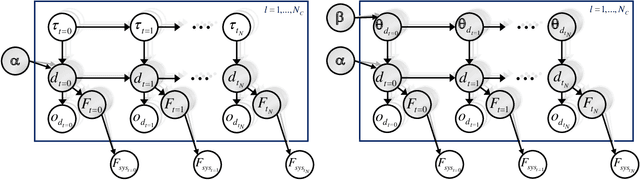

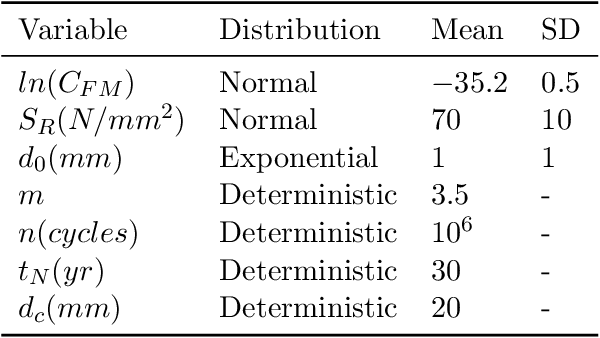

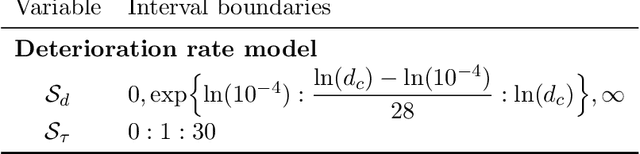

Abstract:In the context of modern environmental and societal concerns, there is an increasing demand for methods able to identify management strategies for civil engineering systems, minimizing structural failure risks while optimally planning inspection and maintenance (I&M) processes. Most available methods simplify the I&M decision problem to the component level due to the computational complexity associated with global optimization methodologies under joint system-level state descriptions. In this paper, we propose an efficient algorithmic framework for inference and decision-making under uncertainty for engineering systems exposed to deteriorating environments, providing optimal management strategies directly at the system level. In our approach, the decision problem is formulated as a factored partially observable Markov decision process, whose dynamics are encoded in Bayesian network conditional structures. The methodology can handle environments under equal or general, unequal deterioration correlations among components, through Gaussian hierarchical structures and dynamic Bayesian networks. In terms of policy optimization, we adopt a deep decentralized multi-agent actor-critic (DDMAC) reinforcement learning approach, in which the policies are approximated by actor neural networks guided by a critic network. By including deterioration dependence in the simulated environment, and by formulating the cost model at the system level, DDMAC policies intrinsically consider the underlying system-effects. This is demonstrated through numerical experiments conducted for both a 9-out-of-10 system and a steel frame under fatigue deterioration. Results demonstrate that DDMAC policies offer substantial benefits when compared to state-of-the-art heuristic approaches. The inherent consideration of system-effects by DDMAC strategies is also interpreted based on the learned policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge