P Ellen Grant

Equivariant Filters for Efficient Tracking in 3D Imaging

Mar 18, 2021

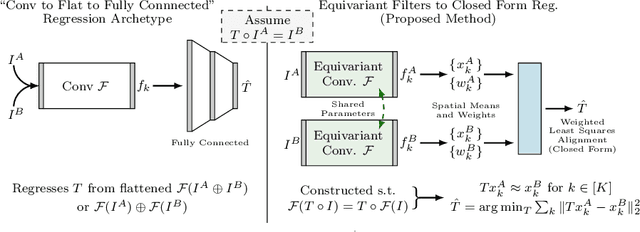

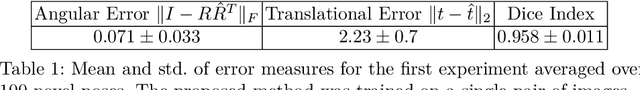

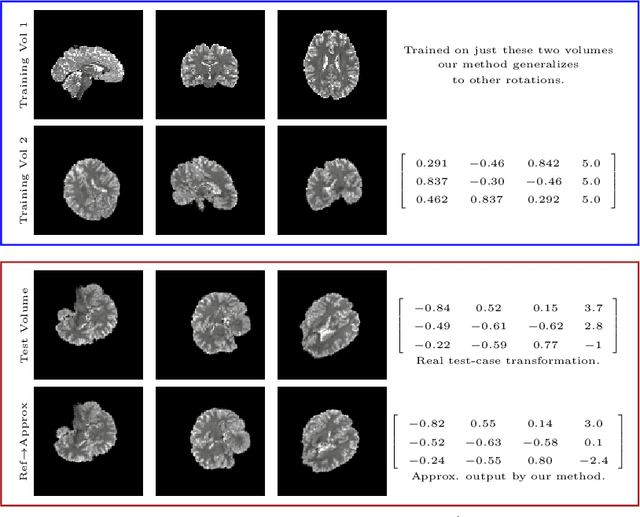

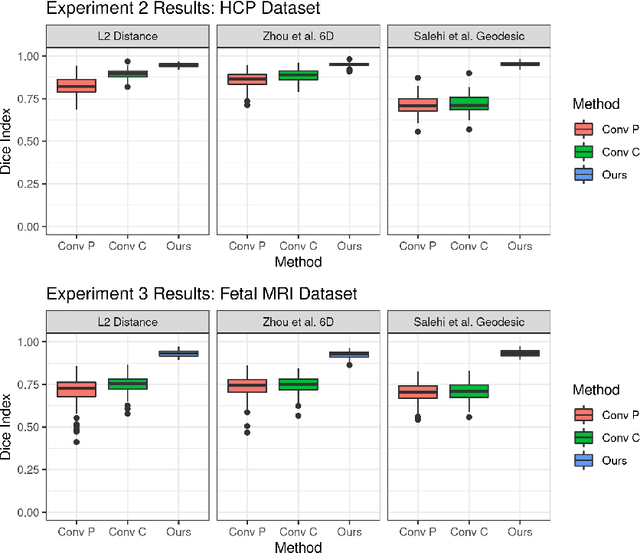

Abstract:We demonstrate an object tracking method for {3D} images with fixed computational cost and state-of-the-art performance. Previous methods predicted transformation parameters from convolutional layers. We instead propose an architecture that does not include either flattening of convolutional features or fully connected layers, but instead relies on equivariant filters to preserve transformations between inputs and outputs (e.g. rot./trans. of inputs rotate/translate outputs). The transformation is then derived in closed form from the outputs of the filters. This method is useful for applications requiring low latency, such as real-time tracking. We demonstrate our model on synthetically augmented adult brain MRI, as well as fetal brain MRI, which is the intended use-case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge