Ozgur Ercetin

On the Structure of the Optimal Detector for Sub-THz Multi-Hop Relays with Unknown Prior: Over-the-Air Diffusion

Jan 03, 2026Abstract:Amplify and forward (AF) relaying is a viable strategy to extend the coverage of sub-terahertz (sub-THz) links, but inevitably propagates noise, leading to cumulative degradation across multiple hops. At the receiver, optimal decoding is desirable, yet challenging under non-Gaussian input distributions (video, voice, etc), for which neither the Minimum Mean Square Error (MMSE) estimator nor the mutual information admits a closed form. A further open question is whether knowledge of Channel State Information (CSI) and noise statistics at the intermediate relays is necessary for optimal detection. Aiming for an optimal decoder, this paper introduces a new framework that interprets the AF relay chain as a variance-preserving diffusion process and employs denoising diffusion implicit models (DDIMs) for signal recovery. We show that each AF hop is mathematically equivalent to a diffusion step with hop-dependent attenuation and noise injection. Consequently, the entire multi-hop chain collapses to an equivalent Gaussian channel fully described by only three real scalars per block: the cumulative complex gain and the effective noise variance. At the receiver, these end-to-end sufficient statistics define a matched reverse schedule that guides the DDIM-based denoiser, enabling near-optimal Bayesian decoding without per-hop CSI. We establish the information-theoretic foundation of this equivalence, proving that decoding performance depends solely on the final effective Signal-to-Noise-Ratio (SNR), regardless of intermediate noise/channel allocation or prior distribution. Simulations under AWGN and Rician fading confirm that the proposed AF-DDIM decoder reduces mean-squared error, symbol error rate, and bit error rate, particularly at moderate SNRs and for higher-order constellations.

Online Learning for Autonomous Management of Intent-based 6G Networks

Jul 25, 2024

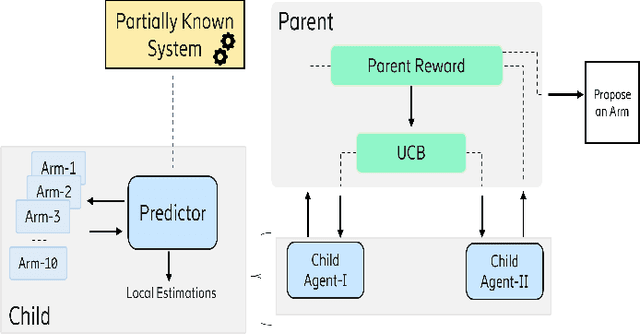

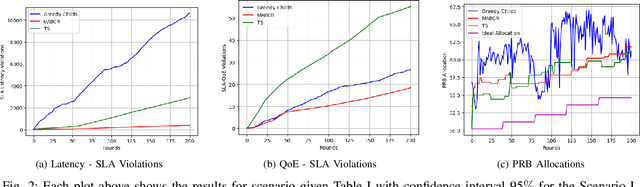

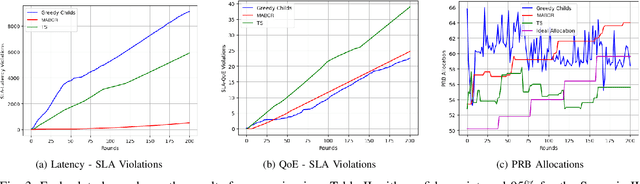

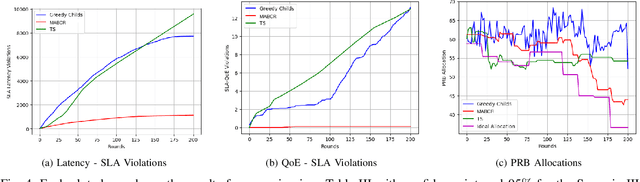

Abstract:The growing complexity of networks and the variety of future scenarios with diverse and often stringent performance requirements call for a higher level of automation. Intent-based management emerges as a solution to attain high level of automation, enabling human operators to solely communicate with the network through high-level intents. The intents consist of the targets in the form of expectations (i.e., latency expectation) from a service and based on the expectations the required network configurations should be done accordingly. It is almost inevitable that when a network action is taken to fulfill one intent, it can cause negative impacts on the performance of another intent, which results in a conflict. In this paper, we aim to address the conflict issue and autonomous management of intent-based networking, and propose an online learning method based on the hierarchical multi-armed bandits approach for an effective management. Thanks to this hierarchical structure, it performs an efficient exploration and exploitation of network configurations with respect to the dynamic network conditions. We show that our algorithm is an effective approach regarding resource allocation and satisfaction of intent expectations.

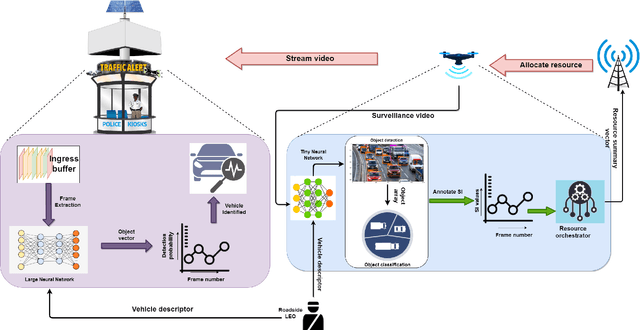

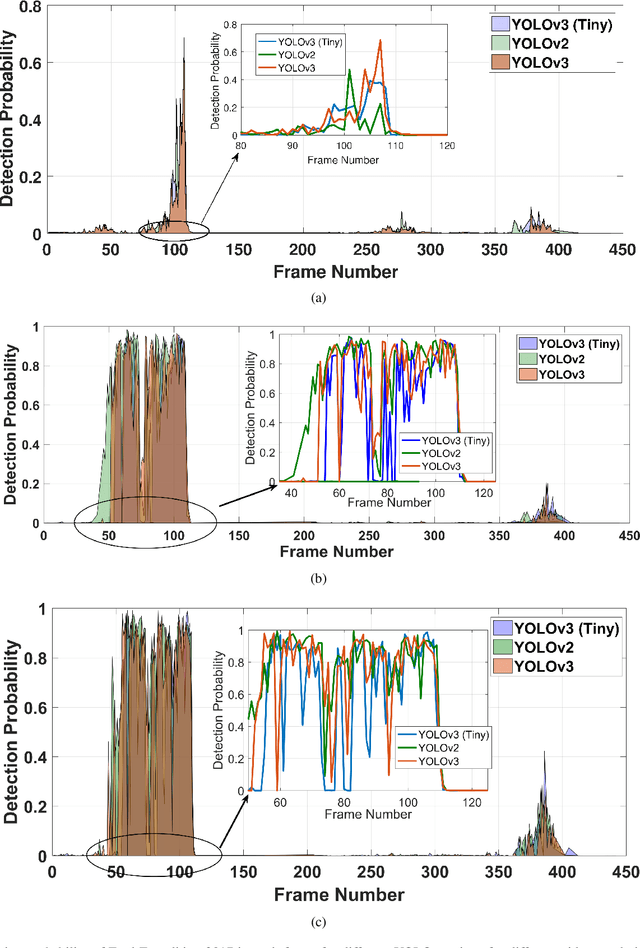

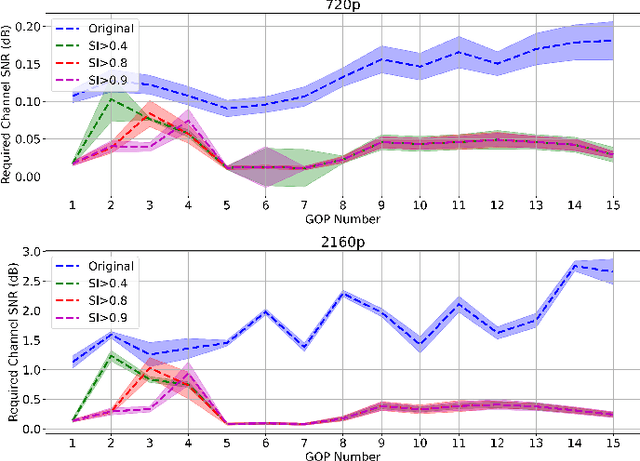

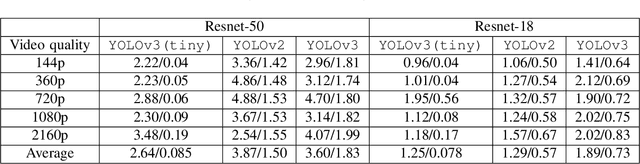

An End-to-End Integrated Computation and Communication Architecture for Goal-oriented Networking: A Perspective on Live Surveillance Video

Apr 05, 2022

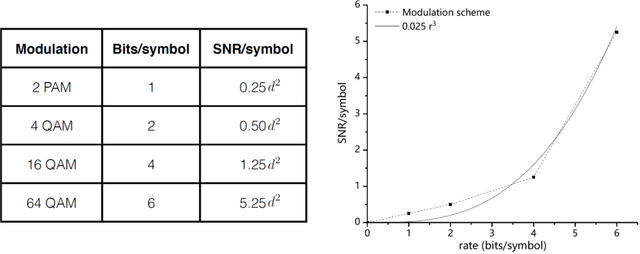

Abstract:Real-time video surveillance has become a crucial technology for smart cities, made possible through the large-scale deployment of mobile and fixed video cameras. In this paper, we propose situation-aware streaming, for real-time identification of important events from live-feeds at the source rather than a cloud based analysis. For this, we first identify the frames containing a specific situation and assign them a high scale-of-importance (SI). The identification is made at the source using a tiny neural network (having a small number of hidden layers), which incurs a small computational resource, albeit at the cost of accuracy. The frames with a high SI value are then streamed with a certain required Signal-to-Noise-Ratio (SNR) to retain the frame quality, while the remaining ones are transmitted with a small SNR. The received frames are then analyzed using a deep neural network (with many hidden layers) to extract the situation accurately. We show that the proposed scheme is able to reduce the required power consumption of the transmitter by 38.5% for 2160p (UHD) video, while achieving a classification accuracy of 97.5%, for the given situation.

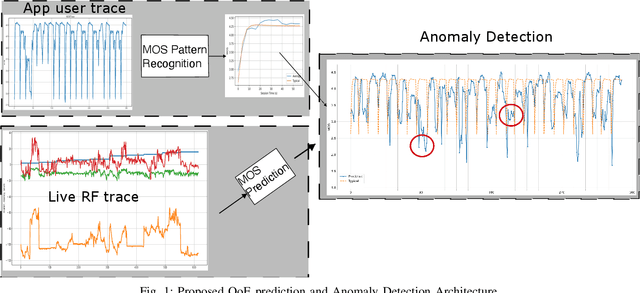

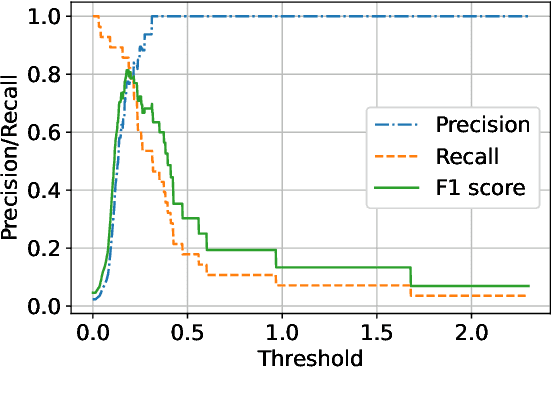

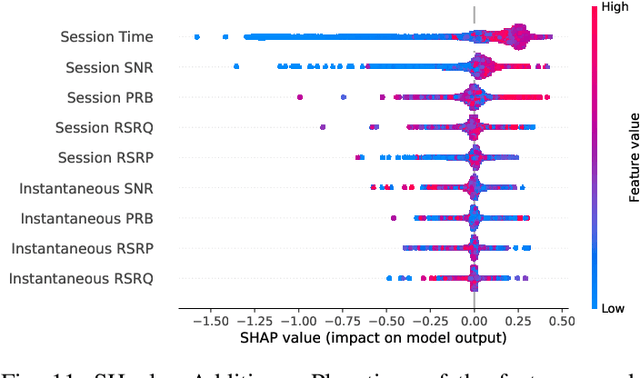

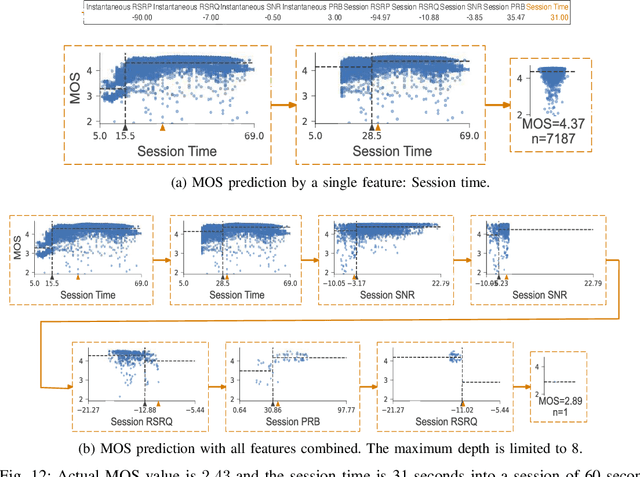

Virtual Drive-Tests: A Case for Predicting QoE in Adaptive Video Streaming

Jul 26, 2021

Abstract:Intelligent and autonomous troubleshooting is a crucial enabler for the current 5G and future 6G networks. In this work, we develop a flexible architecture for detecting anomalies in adaptive video streaming comprising three main components: i) A pattern recognizer that learns a typical pattern for video quality from the client-side application traces of a specific reference video, ii) A predictor for mapping Radio Frequency (RF) performance indicators collected on the network-side using user-based traces to a video quality measure, iii) An anomaly detector for comparing the predicted video quality pattern with the typical pattern to identify anomalies. We use real network traces (i.e., on-device measurements) collected in different geographical locations and at various times of day to train our machine learning models. We perform extensive numerical analysis to demonstrate key parameters impacting correct video quality prediction and anomaly detection. In particular, we have shown that the video playback time is the most crucial parameter determining the video quality since buffering continues during the playback and resulting in better video quality further into the playback. However, we also reveal that RF performance indicators characterizing the quality of the cellular connectivity are required to correctly predict QoE in anomalous cases. Then, we have exhibited that the mean maximum F1-score of our method is 77%, verifying the efficacy of our models. Our architecture is flexible and autonomous, so one can apply it to -- and operate with -- other user applications as long as the relevant user-based traces are available.

Hierarchical Federated Learning Across Heterogeneous Cellular Networks

Sep 05, 2019

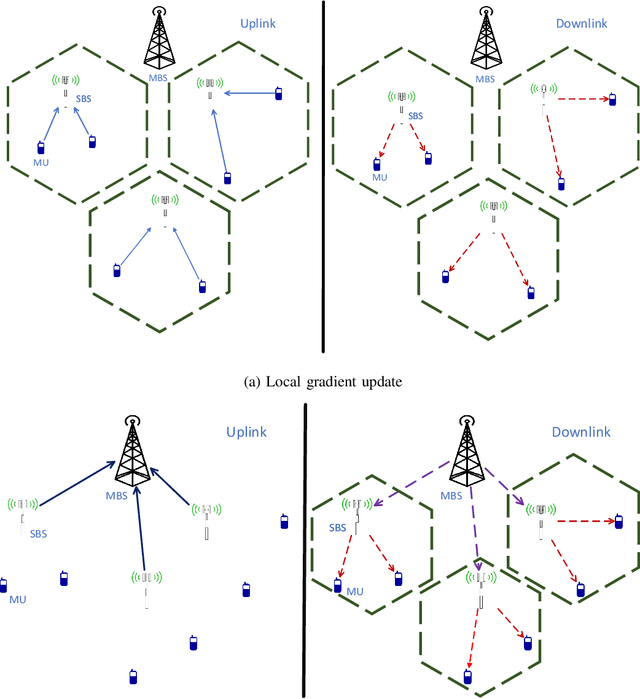

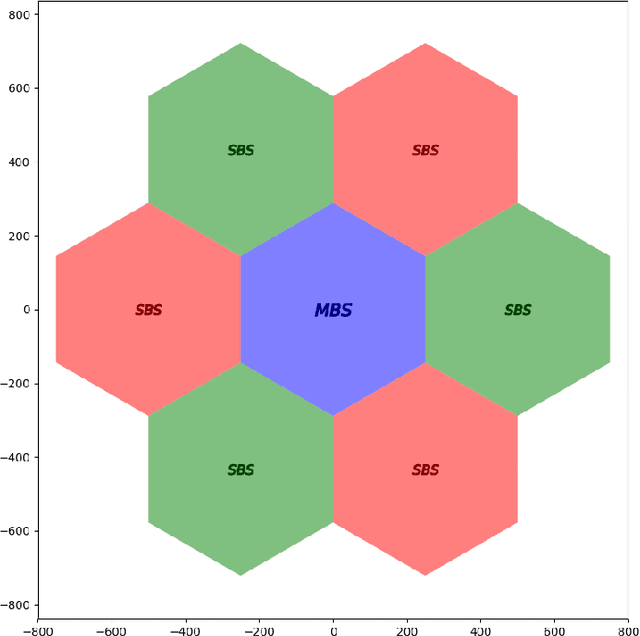

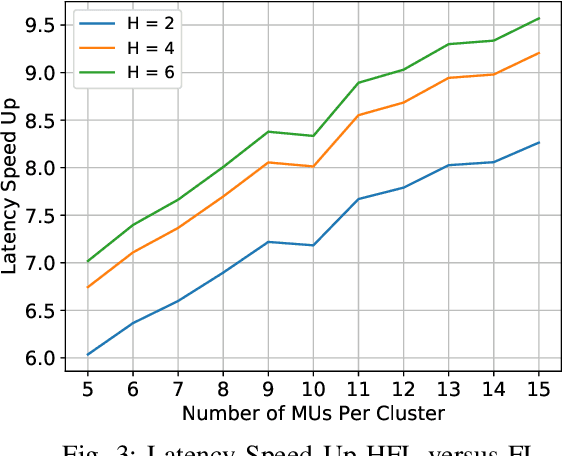

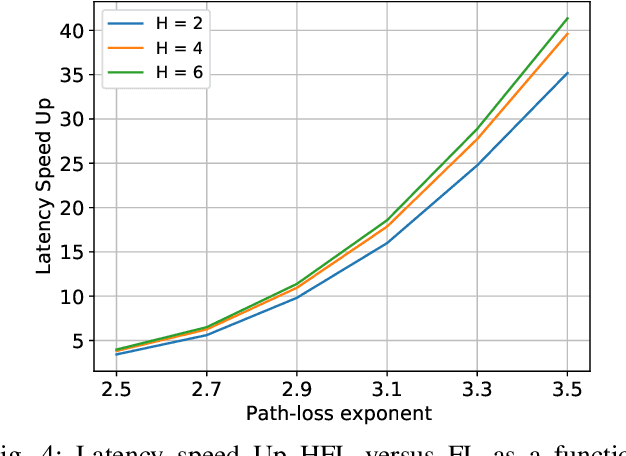

Abstract:We study collaborative machine learning (ML) across wireless devices, each with its own local dataset. Offloading these datasets to a cloud or an edge server to implement powerful ML solutions is often not feasible due to latency, bandwidth and privacy constraints. Instead, we consider federated edge learning (FEEL), where the devices share local updates on the model parameters rather than their datasets. We consider a heterogeneous cellular network (HCN), where small cell base stations (SBSs) orchestrate FL among the mobile users (MUs) within their cells, and periodically exchange model updates with the macro base station (MBS) for global consensus. We employ gradient sparsification and periodic averaging to increase the communication efficiency of this hierarchical federated learning (FL) framework. We then show using CIFAR-10 dataset that the proposed hierarchical learning solution can significantly reduce the communication latency without sacrificing the model accuracy.

Finite Horizon Throughput Maximization and Sensing Optimization in Wireless Powered Devices over Fading Channels

Sep 09, 2018

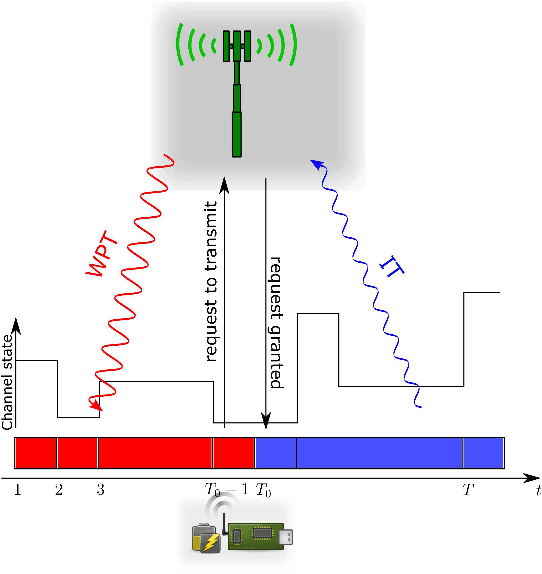

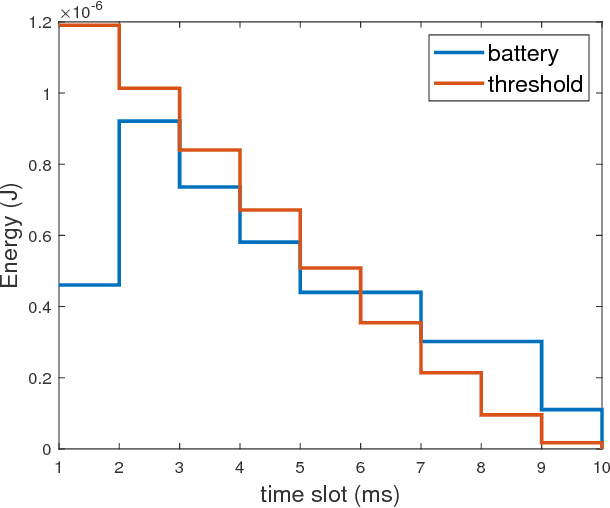

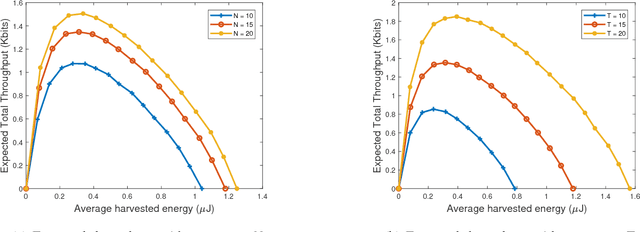

Abstract:Wireless power transfer (WPT) is a promising technology that provides the network a way to replenish the batteries of the remote devices by utilizing RF transmissions. We study a class of harvest-first-transmit-later type of WPT policy, where an access point (AP) first employs RF power transfer to recharge a wireless powered device (WPD) for a certain period subjected to optimization, and then, the harvested energy is subsequently used by the WPD to transmit its data bits back to the AP over a finite horizon. A significant challenge regarding the studied WPT scenario is the time-varying nature of the wireless channel linking the WPD to the AP. We first investigate as a benchmark the offline case where the channel realizations are known non-causally prior to the starting of the horizon. For the offline case, by finding the optimal WPT duration and power allocations in the data transmission period, we derive an upper bound on the throughput of the WPD. We then focus on the online counterpart of the problem where the channel realizations are known causally. We prove that the optimal WPT duration obeys a time-dependent threshold form depending on the energy state of the WPD. In the subsequent data transmission stage, the optimal transmit power allocation for the WPD is shown to be of a fractional structure where at each time slot a fraction of energy depending on the current channel and a measure of future channel state expectations is allocated for data transmission. We numerically show that the online policy performs almost identical to the upper bound. We then consider a data sensing application, where the WPD adjusts the sensing resolution to balance between the quality of the sensed data and the probability of successfully delivering it. We use Bayesian inference as a reinforcement learning method to provide a mean for the WPD in learning to balance the sensing resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge