Mehdi Salehi Heydar Abad

Hierarchical Federated Learning Across Heterogeneous Cellular Networks

Sep 05, 2019

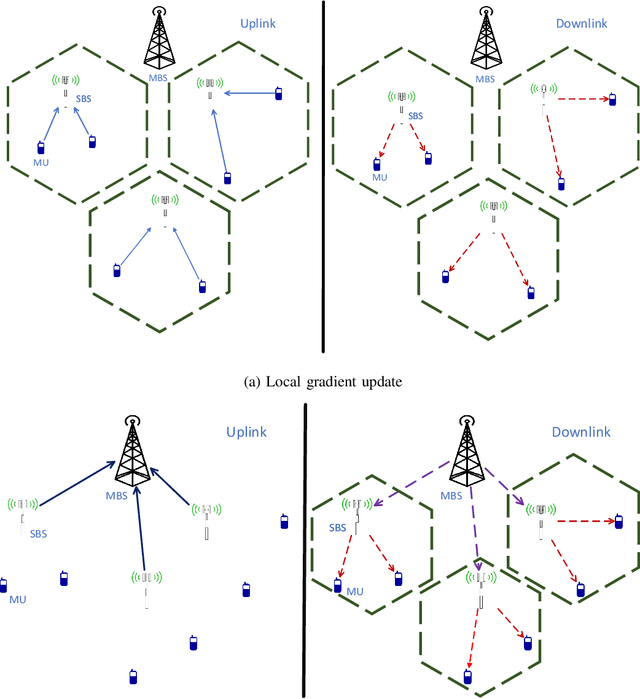

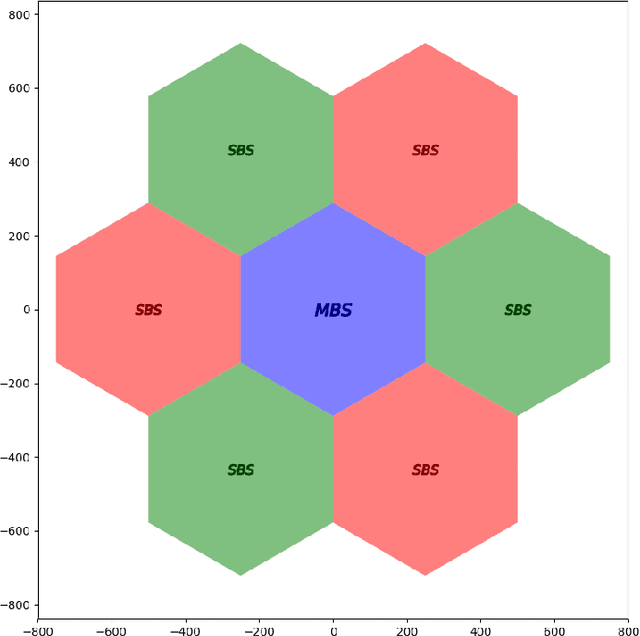

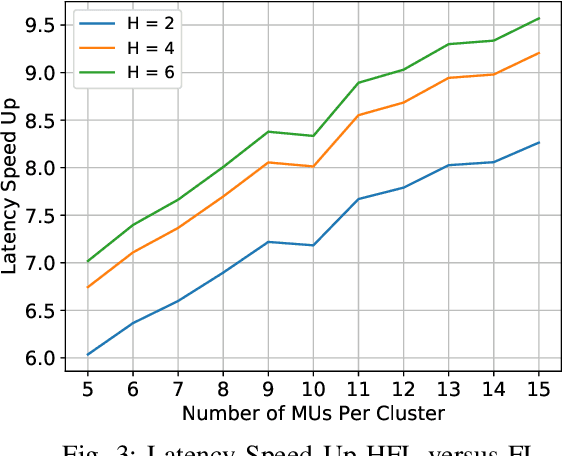

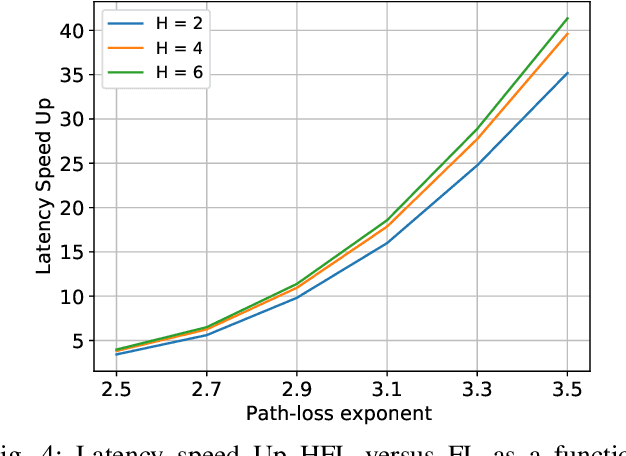

Abstract:We study collaborative machine learning (ML) across wireless devices, each with its own local dataset. Offloading these datasets to a cloud or an edge server to implement powerful ML solutions is often not feasible due to latency, bandwidth and privacy constraints. Instead, we consider federated edge learning (FEEL), where the devices share local updates on the model parameters rather than their datasets. We consider a heterogeneous cellular network (HCN), where small cell base stations (SBSs) orchestrate FL among the mobile users (MUs) within their cells, and periodically exchange model updates with the macro base station (MBS) for global consensus. We employ gradient sparsification and periodic averaging to increase the communication efficiency of this hierarchical federated learning (FL) framework. We then show using CIFAR-10 dataset that the proposed hierarchical learning solution can significantly reduce the communication latency without sacrificing the model accuracy.

Finite Horizon Throughput Maximization and Sensing Optimization in Wireless Powered Devices over Fading Channels

Sep 09, 2018

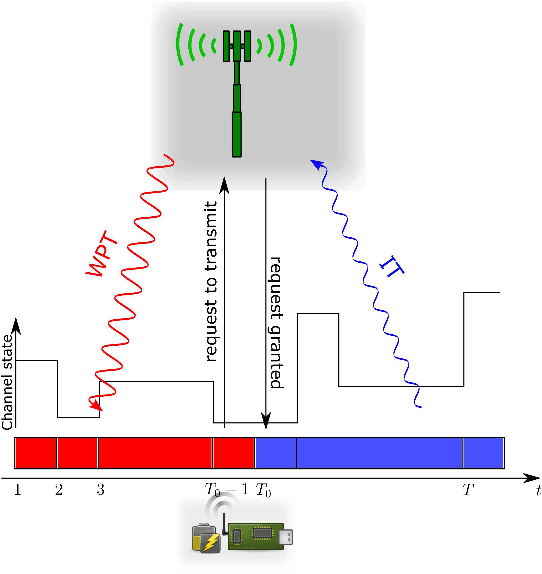

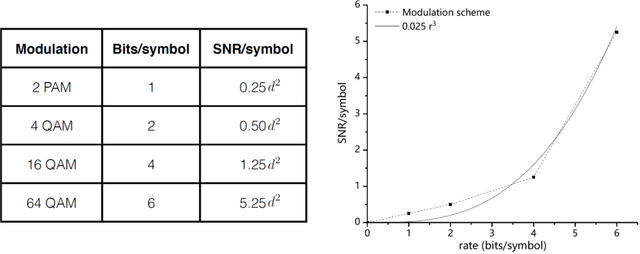

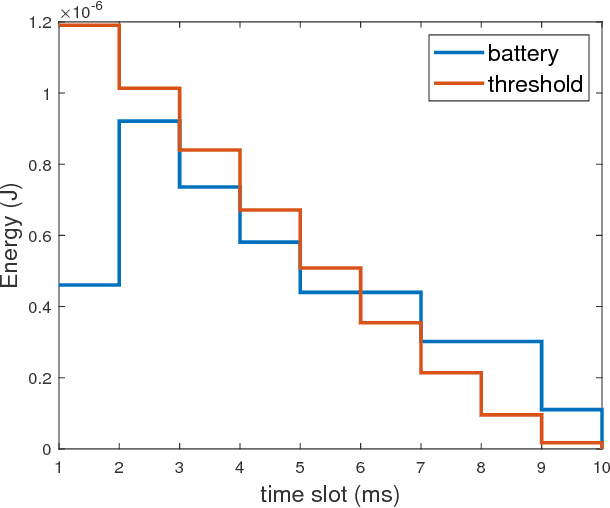

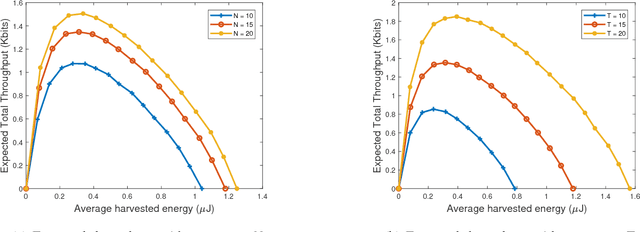

Abstract:Wireless power transfer (WPT) is a promising technology that provides the network a way to replenish the batteries of the remote devices by utilizing RF transmissions. We study a class of harvest-first-transmit-later type of WPT policy, where an access point (AP) first employs RF power transfer to recharge a wireless powered device (WPD) for a certain period subjected to optimization, and then, the harvested energy is subsequently used by the WPD to transmit its data bits back to the AP over a finite horizon. A significant challenge regarding the studied WPT scenario is the time-varying nature of the wireless channel linking the WPD to the AP. We first investigate as a benchmark the offline case where the channel realizations are known non-causally prior to the starting of the horizon. For the offline case, by finding the optimal WPT duration and power allocations in the data transmission period, we derive an upper bound on the throughput of the WPD. We then focus on the online counterpart of the problem where the channel realizations are known causally. We prove that the optimal WPT duration obeys a time-dependent threshold form depending on the energy state of the WPD. In the subsequent data transmission stage, the optimal transmit power allocation for the WPD is shown to be of a fractional structure where at each time slot a fraction of energy depending on the current channel and a measure of future channel state expectations is allocated for data transmission. We numerically show that the online policy performs almost identical to the upper bound. We then consider a data sensing application, where the WPD adjusts the sensing resolution to balance between the quality of the sensed data and the probability of successfully delivering it. We use Bayesian inference as a reinforcement learning method to provide a mean for the WPD in learning to balance the sensing resolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge