Ozan Ozdenizci

Stochastic Mutual Information Gradient Estimation for Dimensionality Reduction Networks

May 01, 2021

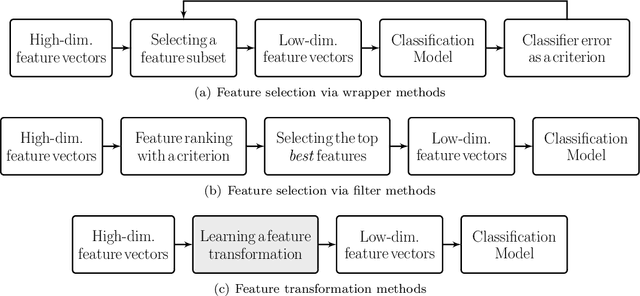

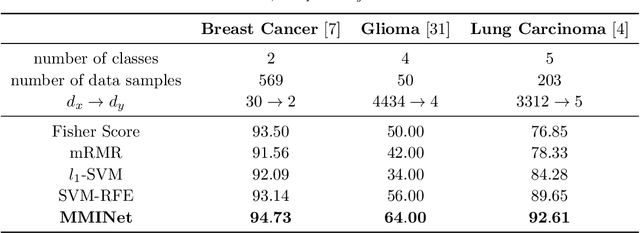

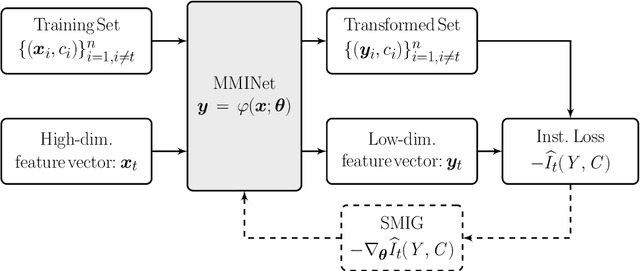

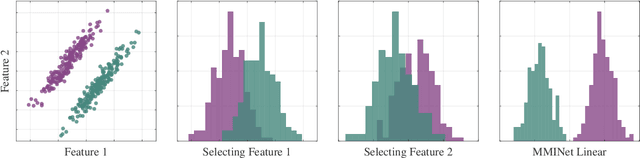

Abstract:Feature ranking and selection is a widely used approach in various applications of supervised dimensionality reduction in discriminative machine learning. Nevertheless there exists significant evidence on feature ranking and selection algorithms based on any criterion leading to potentially sub-optimal solutions for class separability. In that regard, we introduce emerging information theoretic feature transformation protocols as an end-to-end neural network training approach. We present a dimensionality reduction network (MMINet) training procedure based on the stochastic estimate of the mutual information gradient. The network projects high-dimensional features onto an output feature space where lower dimensional representations of features carry maximum mutual information with their associated class labels. Furthermore, we formulate the training objective to be estimated non-parametrically with no distributional assumptions. We experimentally evaluate our method with applications to high-dimensional biological data sets, and relate it to conventional feature selection algorithms to form a special case of our approach.

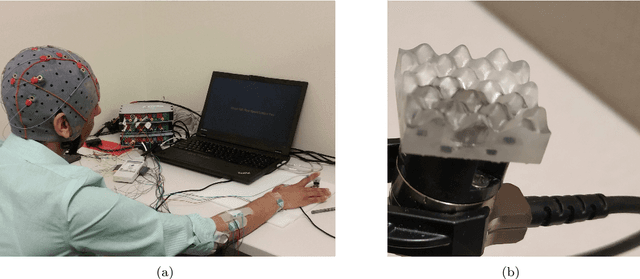

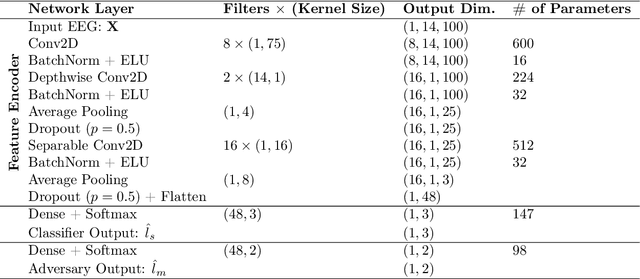

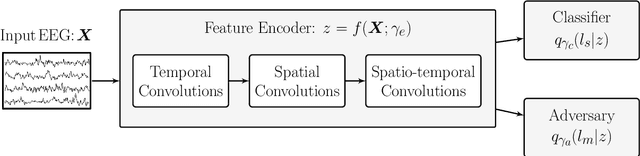

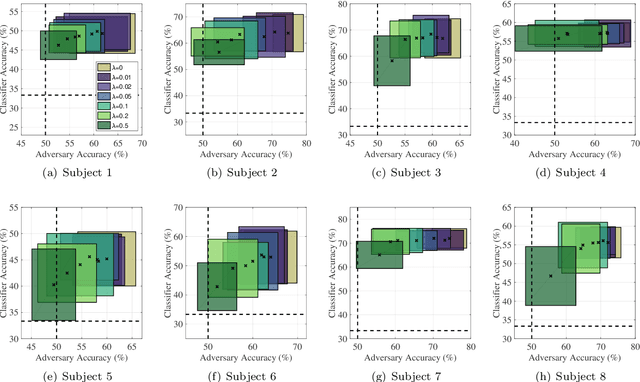

EEG-based Texture Roughness Classification in Active Tactile Exploration with Invariant Representation Learning Networks

Mar 05, 2021

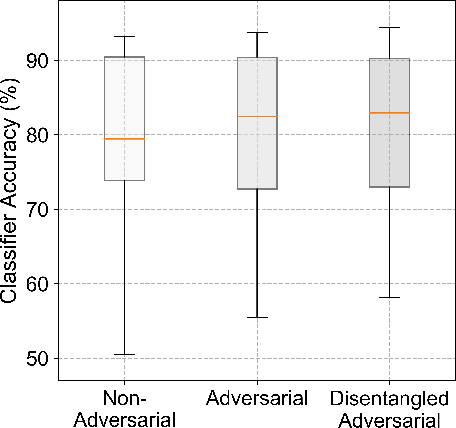

Abstract:During daily activities, humans use their hands to grasp surrounding objects and perceive sensory information which are also employed for perceptual and motor goals. Multiple cortical brain regions are known to be responsible for sensory recognition, perception and motor execution during sensorimotor processing. While various research studies particularly focus on the domain of human sensorimotor control, the relation and processing between motor execution and sensory processing is not yet fully understood. Main goal of our work is to discriminate textured surfaces varying in their roughness levels during active tactile exploration using simultaneously recorded electroencephalogram (EEG) data, while minimizing the variance of distinct motor exploration movement patterns. We perform an experimental study with eight healthy participants who were instructed to use the tip of their dominant hand index finger while rubbing or tapping three different textured surfaces with varying levels of roughness. We use an adversarial invariant representation learning neural network architecture that performs EEG-based classification of different textured surfaces, while simultaneously minimizing the discriminability of motor movement conditions (i.e., rub or tap). Results show that the proposed approach can discriminate between three different textured surfaces with accuracies up to 70%, while suppressing movement related variability from learned representations.

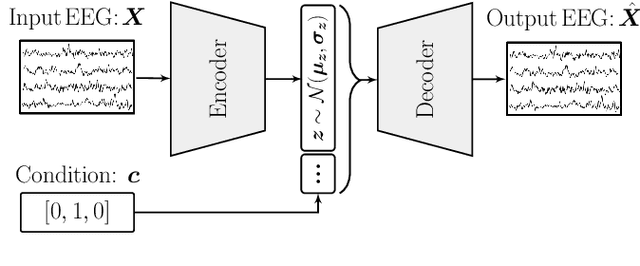

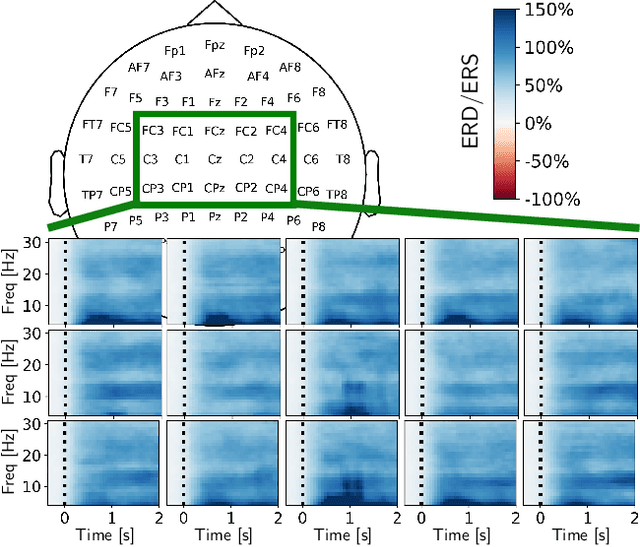

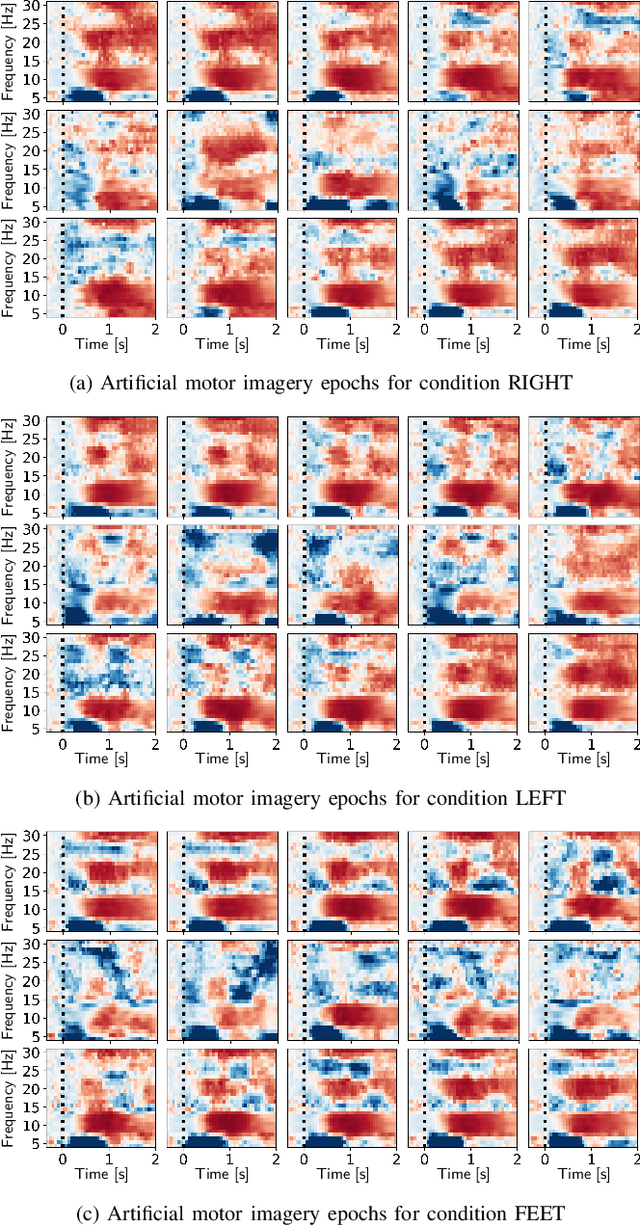

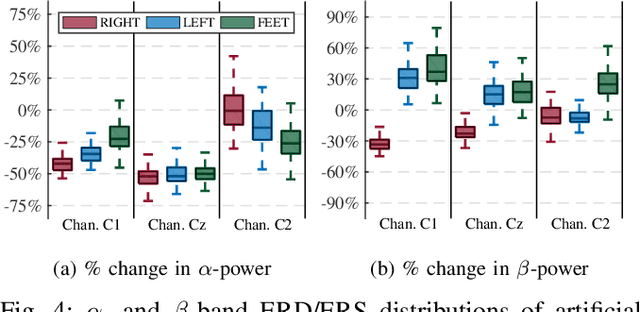

On the use of generative deep neural networks to synthesize artificial multichannel EEG signals

Feb 16, 2021

Abstract:Recent promises of generative deep learning lately brought interest to its potential uses in neural engineering. In this paper we firstly review recently emerging studies on generating artificial electroencephalography (EEG) signals with deep neural networks. Subsequently, we present our feasibility experiments on generating condition-specific multichannel EEG signals using conditional variational autoencoders. By manipulating real resting-state EEG epochs, we present an approach to synthetically generate time-series multichannel signals that show spectro-temporal EEG patterns which are expected to be observed during distinct motor imagery conditions.

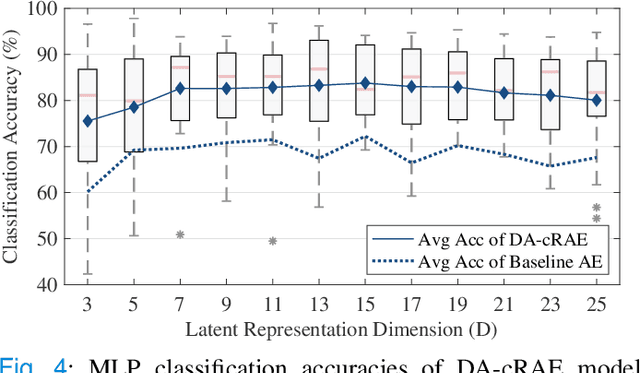

Universal Physiological Representation Learning with Soft-Disentangled Rateless Autoencoders

Sep 28, 2020

Abstract:Human computer interaction (HCI) involves a multidisciplinary fusion of technologies, through which the control of external devices could be achieved by monitoring physiological status of users. However, physiological biosignals often vary across users and recording sessions due to unstable physical/mental conditions and task-irrelevant activities. To deal with this challenge, we propose a method of adversarial feature encoding with the concept of a Rateless Autoencoder (RAE), in order to exploit disentangled, nuisance-robust, and universal representations. We achieve a good trade-off between user-specific and task-relevant features by making use of the stochastic disentanglement of the latent representations by adopting additional adversarial networks. The proposed model is applicable to a wider range of unknown users and tasks as well as different classifiers. Results on cross-subject transfer evaluations show the advantages of the proposed framework, with up to an 11.6% improvement in the average subject-transfer classification accuracy.

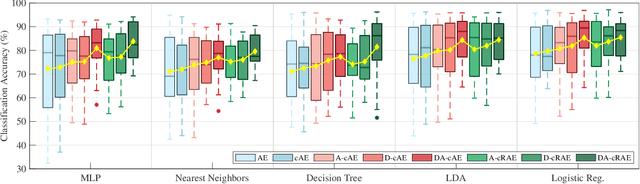

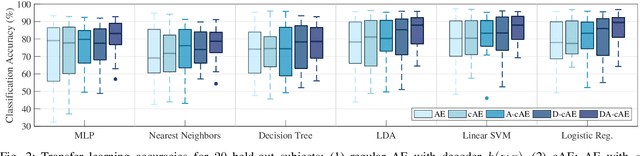

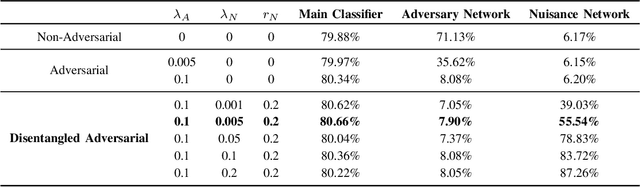

Disentangled Adversarial Autoencoder for Subject-Invariant Physiological Feature Extraction

Aug 26, 2020

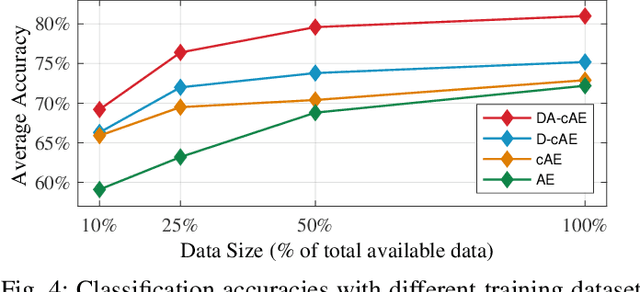

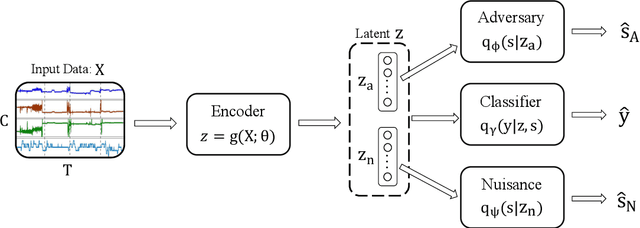

Abstract:Recent developments in biosignal processing have enabled users to exploit their physiological status for manipulating devices in a reliable and safe manner. One major challenge of physiological sensing lies in the variability of biosignals across different users and tasks. To address this issue, we propose an adversarial feature extractor for transfer learning to exploit disentangled universal representations. We consider the trade-off between task-relevant features and user-discriminative information by introducing additional adversary and nuisance networks in order to manipulate the latent representations such that the learned feature extractor is applicable to unknown users and various tasks. Results on cross-subject transfer evaluations exhibit the benefits of the proposed framework, with up to 8.8% improvement in average accuracy of classification, and demonstrate adaptability to a broader range of subjects.

* Accepted for publication by IEEE Signal Processing Letters

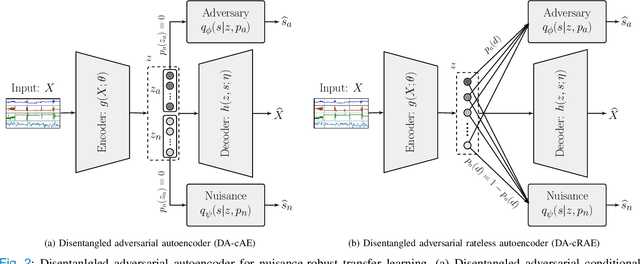

Disentangled Adversarial Transfer Learning for Physiological Biosignals

Apr 15, 2020

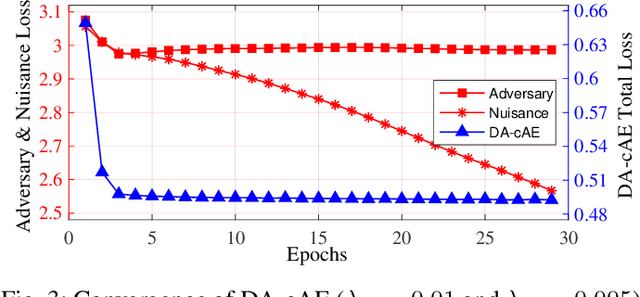

Abstract:Recent developments in wearable sensors demonstrate promising results for monitoring physiological status in effective and comfortable ways. One major challenge of physiological status assessment is the problem of transfer learning caused by the domain inconsistency of biosignals across users or different recording sessions from the same user. We propose an adversarial inference approach for transfer learning to extract disentangled nuisance-robust representations from physiological biosignal data in stress status level assessment. We exploit the trade-off between task-related features and person-discriminative information by using both an adversary network and a nuisance network to jointly manipulate and disentangle the learned latent representations by the encoder, which are then input to a discriminative classifier. Results on cross-subjects transfer evaluations demonstrate the benefits of the proposed adversarial framework, and thus show its capabilities to adapt to a broader range of subjects. Finally we highlight that our proposed adversarial transfer learning approach is also applicable to other deep feature learning frameworks.

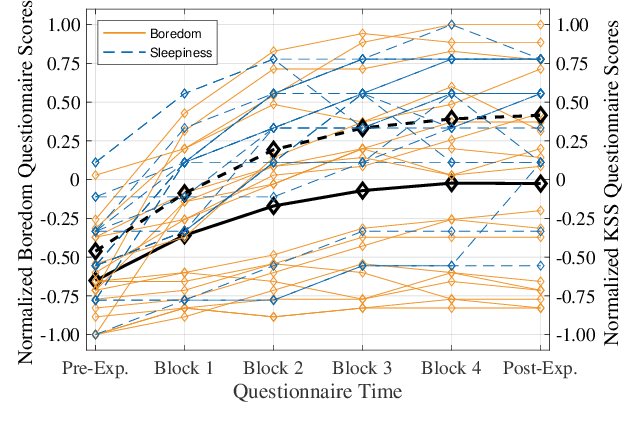

Adversarial Feature Learning in Brain Interfacing: An Experimental Study on Eliminating Drowsiness Effects

Jul 22, 2019

Abstract:Across- and within-recording variabilities in electroencephalographic (EEG) activity is a major limitation in EEG-based brain-computer interfaces (BCIs). Specifically, gradual changes in fatigue and vigilance levels during long EEG recording durations and BCI system usage bring along significant fluctuations in BCI performances even when these systems are calibrated daily. We address this in an experimental offline study from EEG-based BCI speller usage data acquired for one hour duration. As the main part of our methodological approach, we propose the concept of adversarial invariant feature learning for BCIs as a regularization approach on recently expanding EEG deep learning architectures, to learn nuisance-invariant discriminative features. We empirically demonstrate the feasibility of adversarial feature learning on eliminating drowsiness effects from event related EEG activity features, by using temporal recording block ordering as the source of drowsiness variability.

Information Theoretic Feature Transformation Learning for Brain Interfaces

Apr 05, 2019

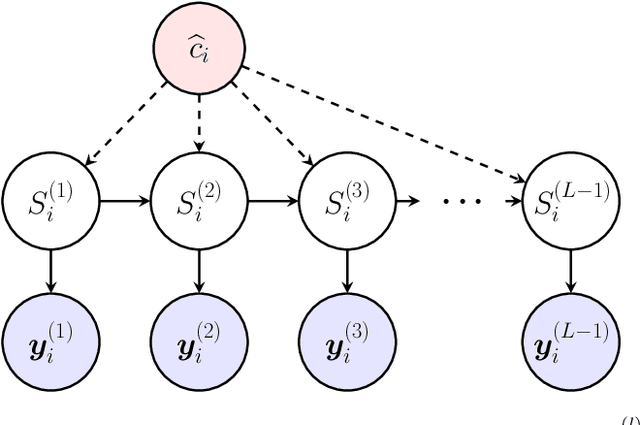

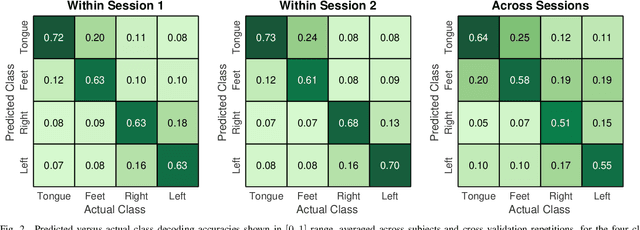

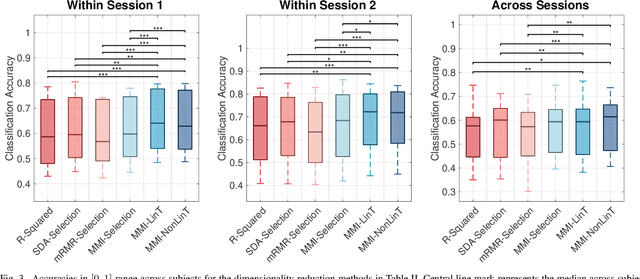

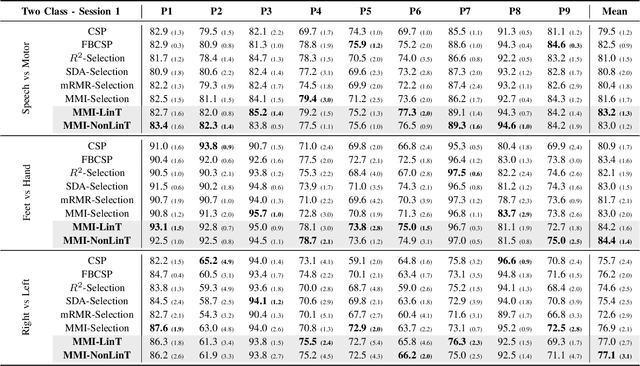

Abstract:Objective: A variety of pattern analysis techniques for model training in brain interfaces exploit neural feature dimensionality reduction based on feature ranking and selection heuristics. In the light of broad evidence demonstrating the potential sub-optimality of ranking based feature selection by any criterion, we propose to extend this focus with an information theoretic learning driven feature transformation concept. Methods: We present a maximum mutual information linear transformation (MMI-LinT), and a nonlinear transformation (MMI-NonLinT) framework derived by a general definition of the feature transformation learning problem. Empirical assessments are performed based on electroencephalographic (EEG) data recorded during a four class motor imagery brain-computer interface (BCI) task. Exploiting state-of-the-art methods for initial feature vector construction, we compare the proposed approaches with conventional feature selection based dimensionality reduction techniques which are widely used in brain interfaces. Furthermore, for the multi-class problem, we present and exploit a hierarchical graphical model based BCI decoding system. Results: Both binary and multi-class decoding analyses demonstrate significantly better performances with the proposed methods. Conclusion: Information theoretic feature transformations are capable of tackling potential confounders of conventional approaches in various settings. Significance: We argue that this concept provides significant insights to extend the focus on feature selection heuristics to a broader definition of feature transformation learning in brain interfaces.

* Accepted for publication by IEEE Transactions on Biomedical Engineering

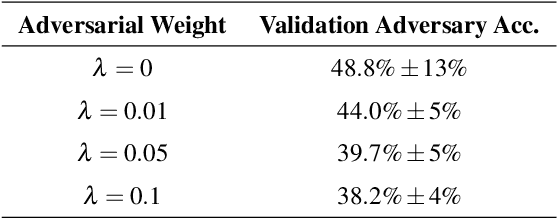

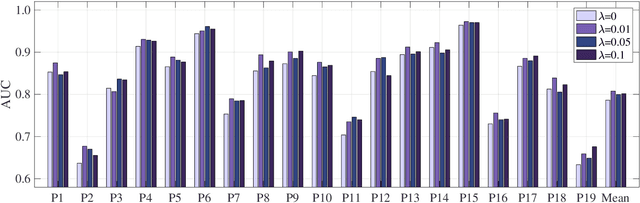

Adversarial Deep Learning in EEG Biometrics

Mar 27, 2019

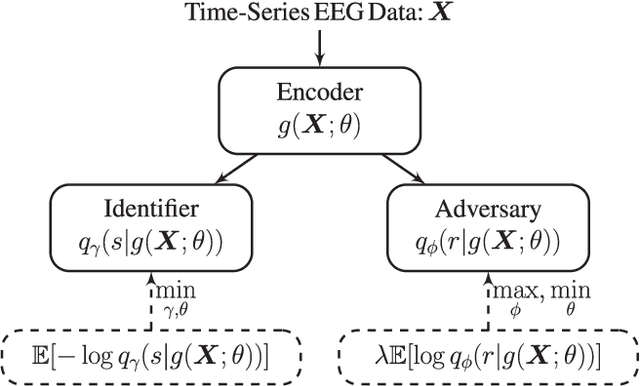

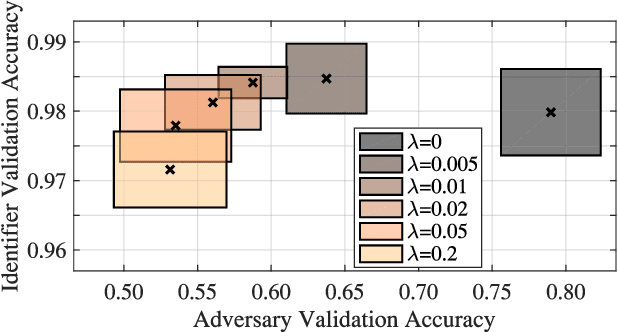

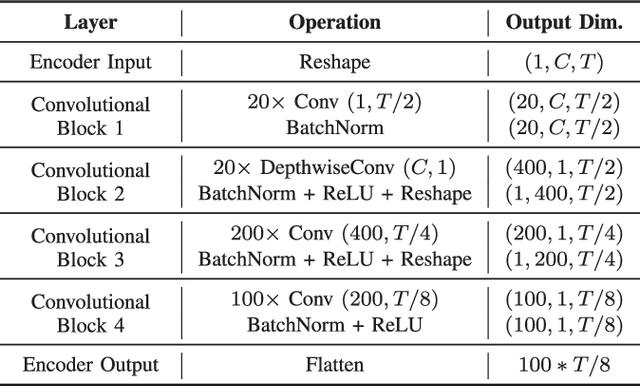

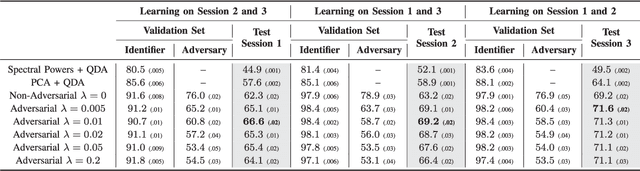

Abstract:Deep learning methods for person identification based on electroencephalographic (EEG) brain activity encounters the problem of exploiting the temporally correlated structures or recording session specific variability within EEG. Furthermore, recent methods have mostly trained and evaluated based on single session EEG data. We address this problem from an invariant representation learning perspective. We propose an adversarial inference approach to extend such deep learning models to learn session-invariant person-discriminative representations that can provide robustness in terms of longitudinal usability. Using adversarial learning within a deep convolutional network, we empirically assess and show improvements with our approach based on longitudinally collected EEG data for person identification from half-second EEG epochs.

* Accepted for publication by IEEE Signal Processing Letters

Transfer Learning in Brain-Computer Interfaces with Adversarial Variational Autoencoders

Dec 17, 2018

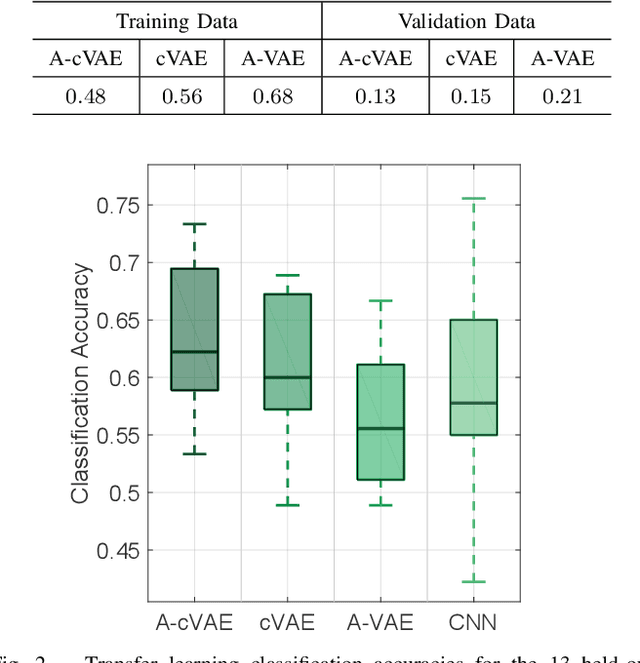

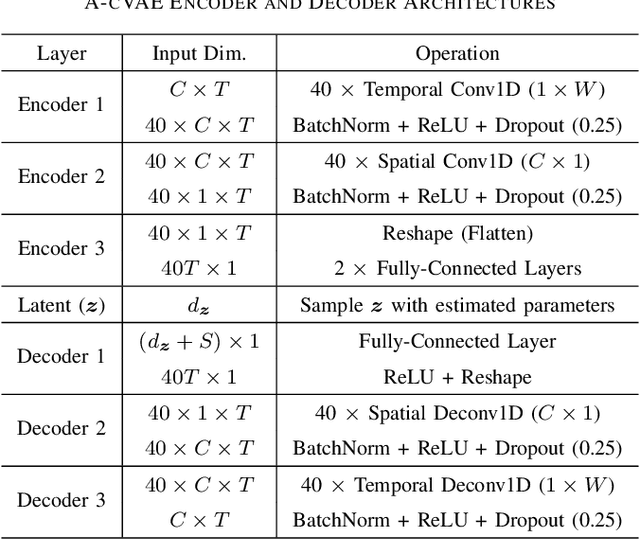

Abstract:We introduce adversarial neural networks for representation learning as a novel approach to transfer learning in brain-computer interfaces (BCIs). The proposed approach aims to learn subject-invariant representations by simultaneously training a conditional variational autoencoder (cVAE) and an adversarial network. We use shallow convolutional architectures to realize the cVAE, and the learned encoder is transferred to extract subject-invariant features from unseen BCI users' data for decoding. We demonstrate a proof-of-concept of our approach based on analyses of electroencephalographic (EEG) data recorded during a motor imagery BCI experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge