Ovanes Petrosian

Driver Assistance System Based on Multimodal Data Hazard Detection

Feb 05, 2025

Abstract:Autonomous driving technology has advanced significantly, yet detecting driving anomalies remains a major challenge due to the long-tailed distribution of driving events. Existing methods primarily rely on single-modal road condition video data, which limits their ability to capture rare and unpredictable driving incidents. This paper proposes a multimodal driver assistance detection system that integrates road condition video, driver facial video, and audio data to enhance incident recognition accuracy. Our model employs an attention-based intermediate fusion strategy, enabling end-to-end learning without separate feature extraction. To support this approach, we develop a new three-modality dataset using a driving simulator. Experimental results demonstrate that our method effectively captures cross-modal correlations, reducing misjudgments and improving driving safety.

Stationary Point Losses for Robust Model

Feb 19, 2023

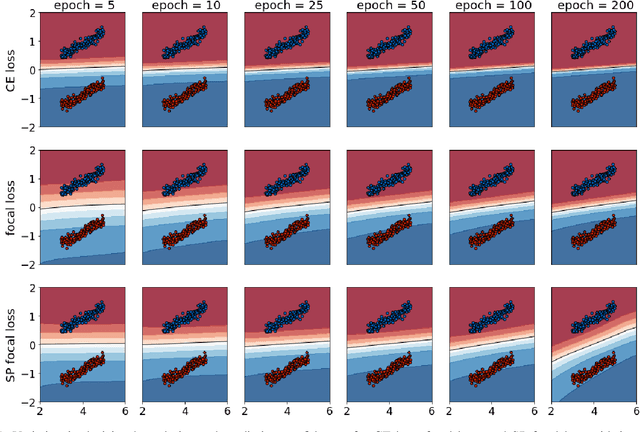

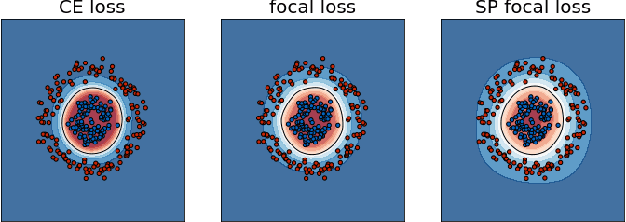

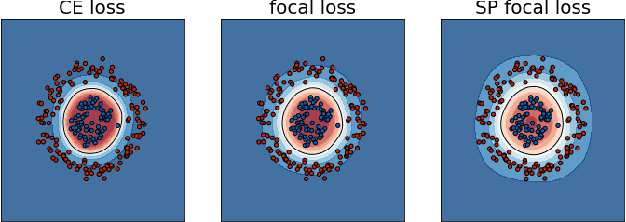

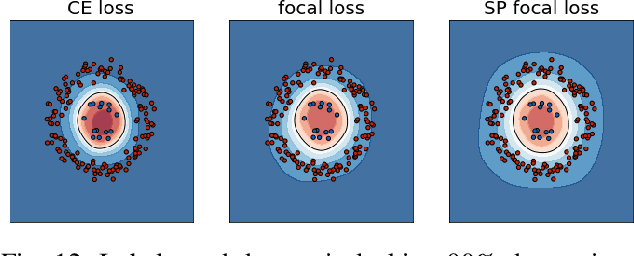

Abstract:The inability to guarantee robustness is one of the major obstacles to the application of deep learning models in security-demanding domains. We identify that the most commonly used cross-entropy (CE) loss does not guarantee robust boundary for neural networks. CE loss sharpens the neural network at the decision boundary to achieve a lower loss, rather than pushing the boundary to a more robust position. A robust boundary should be kept in the middle of samples from different classes, thus maximizing the margins from the boundary to the samples. We think this is due to the fact that CE loss has no stationary point. In this paper, we propose a family of new losses, called stationary point (SP) loss, which has at least one stationary point on the correct classification side. We proved that robust boundary can be guaranteed by SP loss without losing much accuracy. With SP loss, larger perturbations are required to generate adversarial examples. We demonstrate that robustness is improved under a variety of adversarial attacks by applying SP loss. Moreover, robust boundary learned by SP loss also performs well on imbalanced datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge