Ou Ma

Comparison Between Genetic Fuzzy Methodology and Q-learning for Collaborative Control Design

Aug 28, 2020

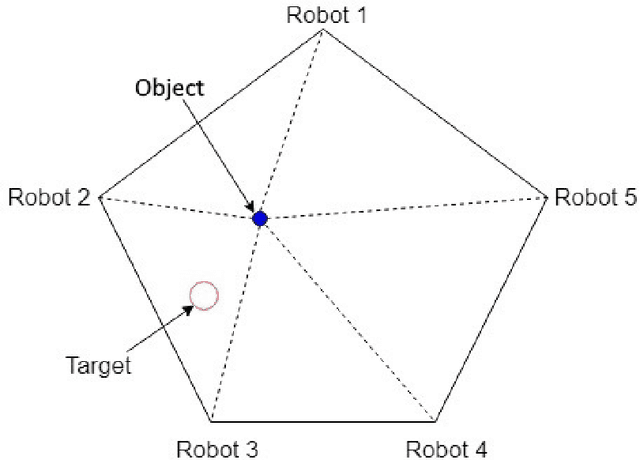

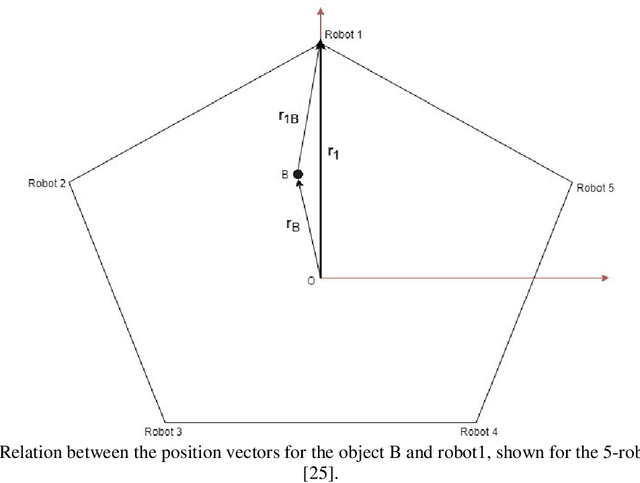

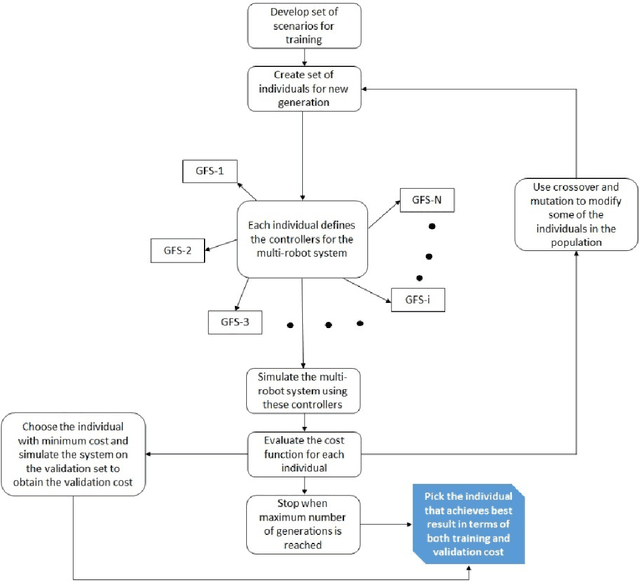

Abstract:A comparison between two machine learning approaches viz., Genetic Fuzzy Methodology and Q-learning, is presented in this paper. The approaches are used to model controllers for a set of collaborative robots that need to work together to bring an object to a target position. The robots are fixed and are attached to the object through elastic cables. A major constraint considered in this problem is that the robots cannot communicate with each other. This means that at any instant, each robot has no motion or control information of the other robots and it can only pull or release its cable based only on the motion states of the object. This decentralized control problem provides a good example to test the capabilities and restrictions of these two machine learning approaches. The system is first trained using a set of training scenarios and then applied to an extensive test set to check the generalization achieved by each method.

* 15 pages, 9 figures

ConvGRU in Fine-grained Pitching Action Recognition for Action Outcome Prediction

Aug 18, 2020

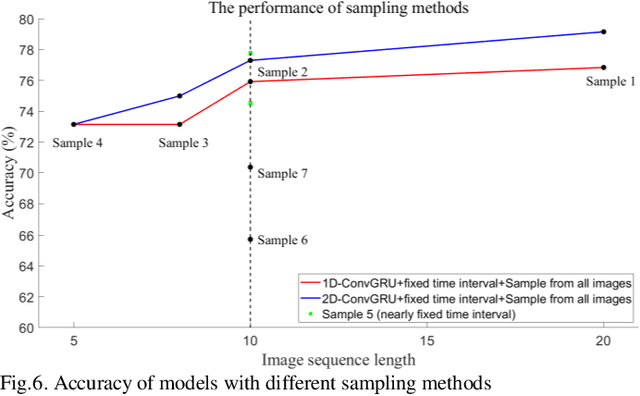

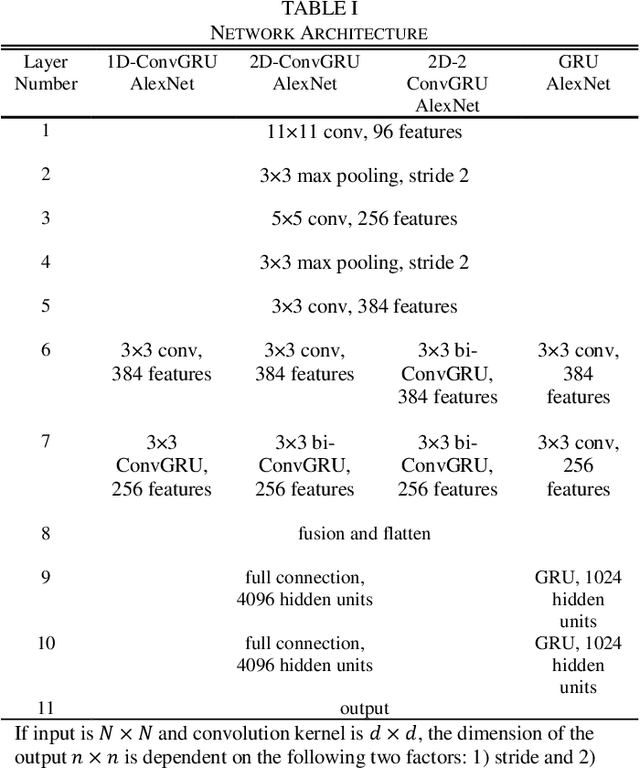

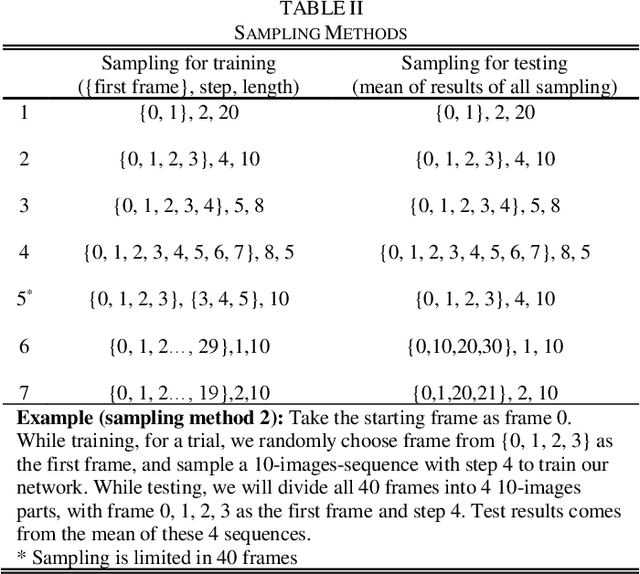

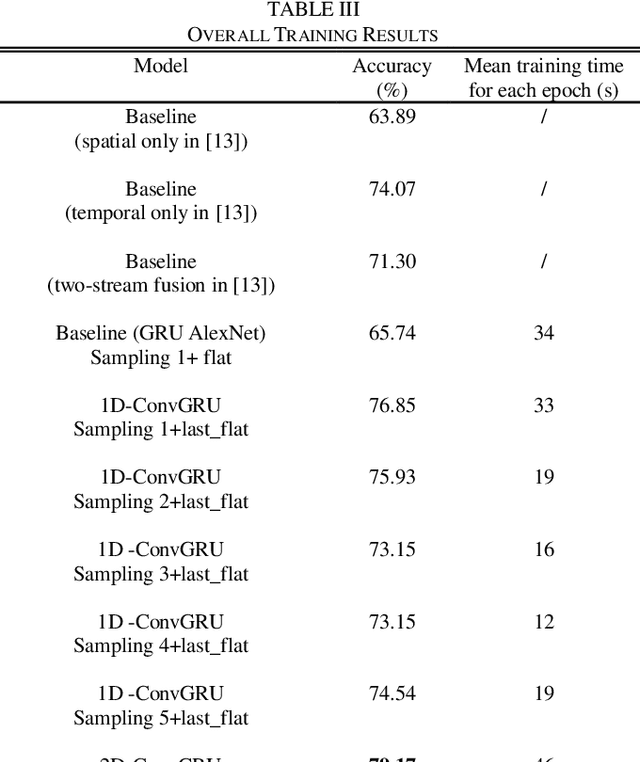

Abstract:Prediction of the action outcome is a new challenge for a robot collaboratively working with humans. With the impressive progress in video action recognition in recent years, fine-grained action recognition from video data turns into a new concern. Fine-grained action recognition detects subtle differences of actions in more specific granularity and is significant in many fields such as human-robot interaction, intelligent traffic management, sports training, health caring. Considering that the different outcomes are closely connected to the subtle differences in actions, fine-grained action recognition is a practical method for action outcome prediction. In this paper, we explore the performance of convolutional gate recurrent unit (ConvGRU) method on a fine-grained action recognition tasks: predicting outcomes of ball-pitching. Based on sequences of RGB images of human actions, the proposed approach achieved the performance of 79.17% accuracy, which exceeds the current state-of-the-art result. We also compared different network implementations and showed the influence of different image sampling methods, different fusion methods and pre-training, etc. Finally, we discussed the advantages and limitations of ConvGRU in such action outcome prediction and fine-grained action recognition tasks.

Multi-robot Cooperative Object Transportation using Decentralized Deep Reinforcement Learning

Jul 17, 2020

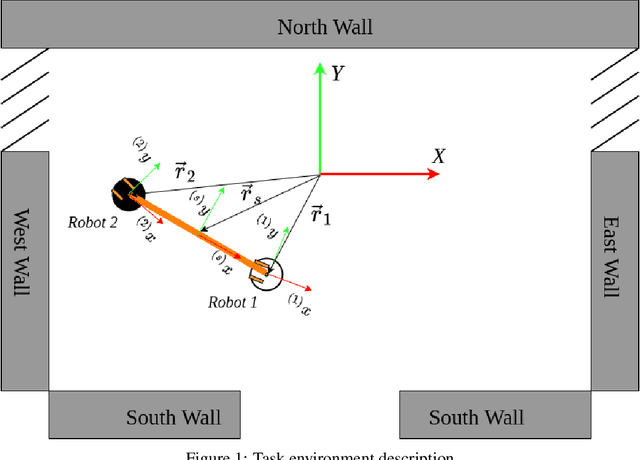

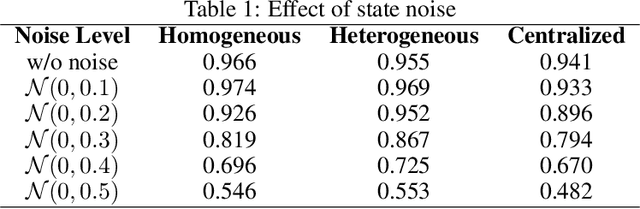

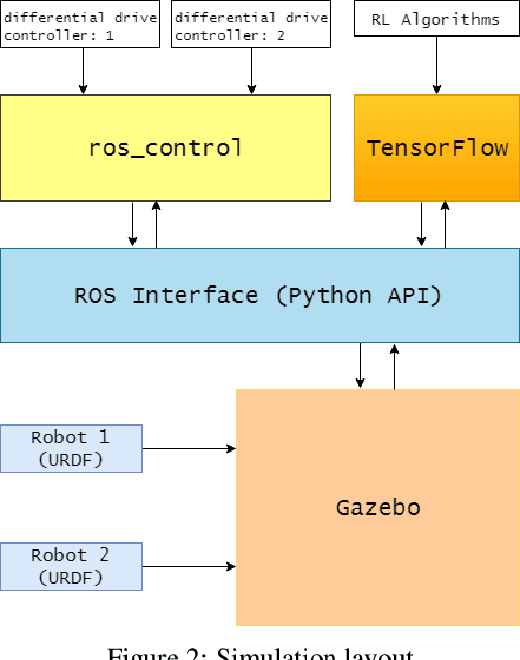

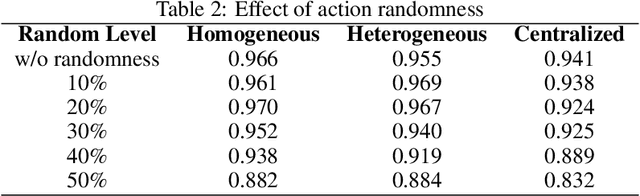

Abstract:Object transportation could be a challenging problem for a single robot due to the oversize and/or overweight issues. A multi-robot system can take the advantage of increased driving power and more flexible configuration to solve such a problem. However, increased number of individuals also changed the dynamics of the system which makes control of a multi-robot system more complicated. Even worse, if the whole system is sitting on a centralized decision making unit, the data flow could be easily overloaded due to the upscaling of the system. In this research, we propose a decentralized control scheme on a multi-robot system with each individual equipped with a deep Q-network (DQN) controller to perform an oversized object transportation task. DQN is a deep reinforcement learning algorithm thus does not require the knowledge of system dynamics, instead, it enables the robots to learn appropriate control strategies through trial-and-error style interactions within the task environment. Since analogous controllers are distributed on the individuals, the computational bottleneck is avoided systematically. We demonstrate such a system in a scenario of carrying an oversized rod through a doorway by a two-robot team. The presented multi-robot system learns abstract features of the task and cooperative behaviors are observed. The decentralized DQN-style controller is showing strong robustness against uncertainties. In addition, We propose a universal metric to assess the cooperation quantitatively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge