Oskar Elek

Monte Carlo Physarum Machine: Characteristics of Pattern Formation in Continuous Stochastic Transport Networks

Apr 04, 2022

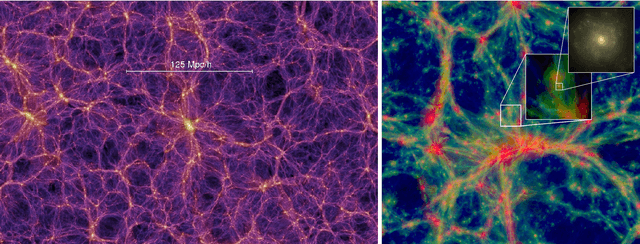

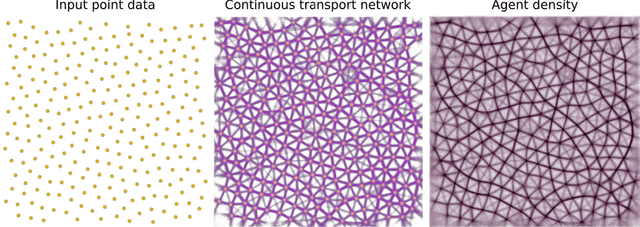

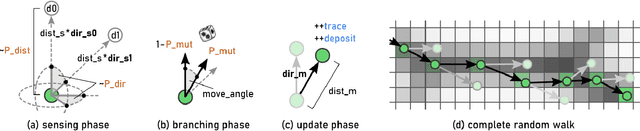

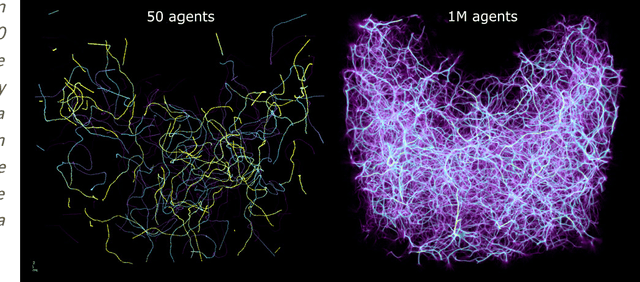

Abstract:We present Monte Carlo Physarum Machine: a computational model suitable for reconstructing continuous transport networks from sparse 2D and 3D data. MCPM is a probabilistic generalization of Jones's 2010 agent-based model for simulating the growth of Physarum polycephalum slime mold. We compare MCPM to Jones's work on theoretical grounds, and describe a task-specific variant designed for reconstructing the large-scale distribution of gas and dark matter in the Universe known as the Cosmic web. To analyze the new model, we first explore MCPM's self-patterning behavior, showing a wide range of continuous network-like morphologies -- called "polyphorms" -- that the model produces from geometrically intuitive parameters. Applying MCPM to both simulated and observational cosmological datasets, we then evaluate its ability to produce consistent 3D density maps of the Cosmic web. Finally, we examine other possible tasks where MCPM could be useful, along with several examples of fitting to domain-specific data as proofs of concept.

Bio-inspired Structure Identification in Language Embeddings

Sep 15, 2020

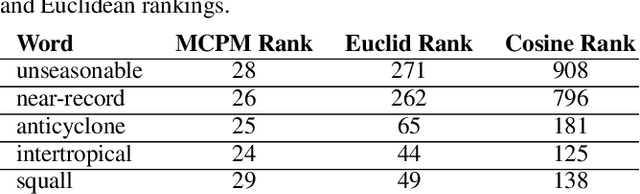

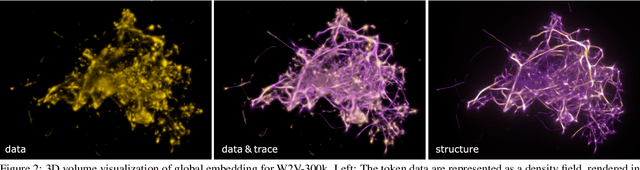

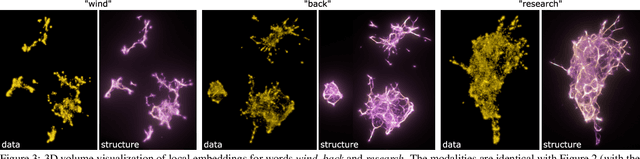

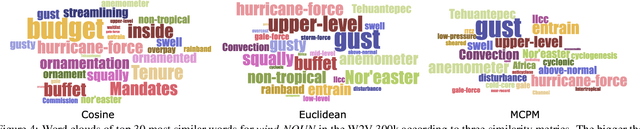

Abstract:Word embeddings are a popular way to improve downstream performances in contemporary language modeling. However, the underlying geometric structure of the embedding space is not well understood. We present a series of explorations using bio-inspired methodology to traverse and visualize word embeddings, demonstrating evidence of discernible structure. Moreover, our model also produces word similarity rankings that are plausible yet very different from common similarity metrics, mainly cosine similarity and Euclidean distance. We show that our bio-inspired model can be used to investigate how different word embedding techniques result in different semantic outputs, which can emphasize or obscure particular interpretations in textual data.

Learning Patterns in Sample Distributions for Monte Carlo Variance Reduction

Jun 01, 2019

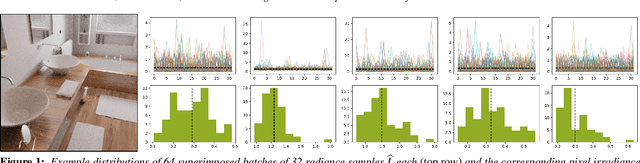

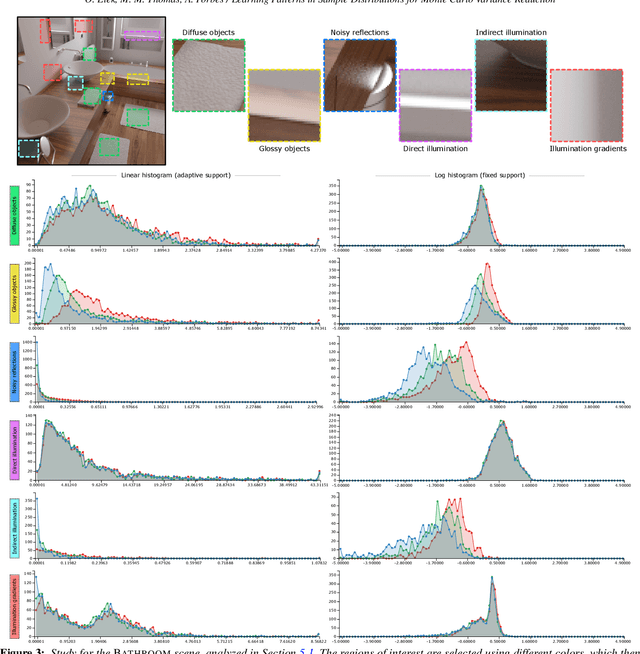

Abstract:This paper investigates a novel a-posteriori variance reduction approach in Monte Carlo image synthesis. Unlike most established methods based on lateral filtering in the image space, our proposition is to produce the best possible estimate for each pixel separately, from all the samples drawn for it. To enable this, we systematically study the per-pixel sample distributions for diverse scene configurations. Noting that these are too complex to be characterized by standard statistical distributions (e.g. Gaussians), we identify patterns recurring in them and exploit those for training a variance-reduction model based on neural nets. In result, we obtain numerically better estimates compared to simple averaging of samples. This method is compatible with existing image-space denoising methods, as the improved estimates of our model can be used for further processing. We conclude by discussing how the proposed model could in future be extended for fully progressive rendering with constant memory footprint and scene-sensitive output.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge