Oscar Dilley

Profiling AI Models: Towards Efficient Computation Offloading in Heterogeneous Edge AI Systems

Oct 30, 2024

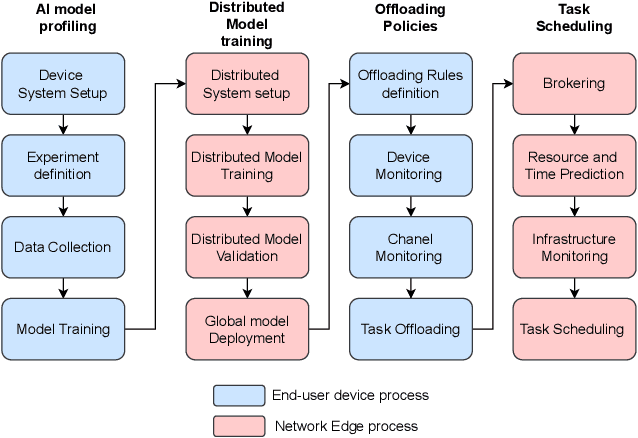

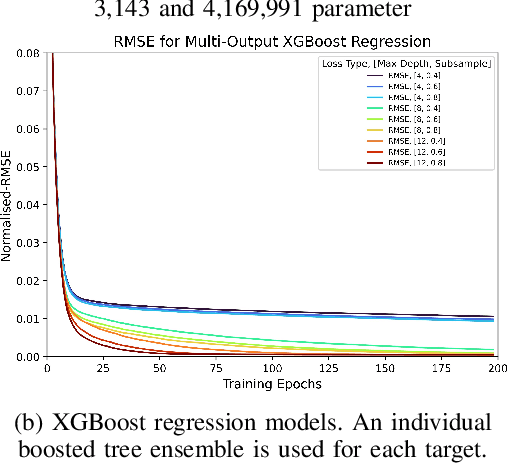

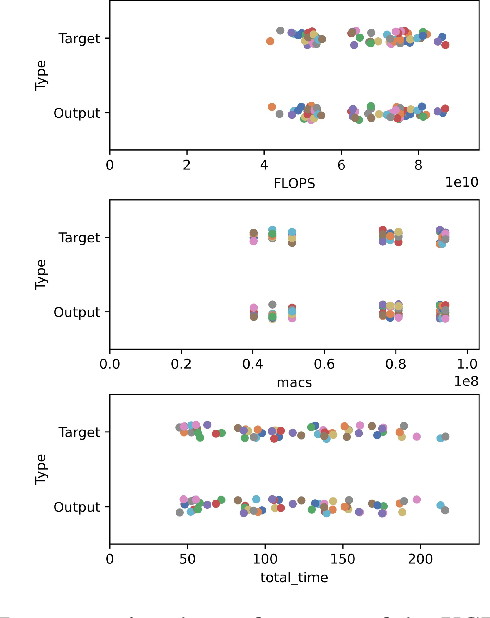

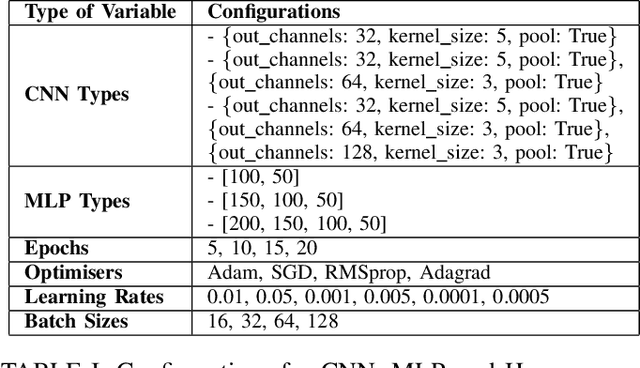

Abstract:The rapid growth of end-user AI applications, such as computer vision and generative AI, has led to immense data and processing demands often exceeding user devices' capabilities. Edge AI addresses this by offloading computation to the network edge, crucial for future services in 6G networks. However, it faces challenges such as limited resources during simultaneous offloads and the unrealistic assumption of homogeneous system architecture. To address these, we propose a research roadmap focused on profiling AI models, capturing data about model types, hyperparameters, and underlying hardware to predict resource utilisation and task completion time. Initial experiments with over 3,000 runs show promise in optimising resource allocation and enhancing Edge AI performance.

Federated Fairness Analytics: Quantifying Fairness in Federated Learning

Aug 15, 2024

Abstract:Federated Learning (FL) is a privacy-enhancing technology for distributed ML. By training models locally and aggregating updates - a federation learns together, while bypassing centralised data collection. FL is increasingly popular in healthcare, finance and personal computing. However, it inherits fairness challenges from classical ML and introduces new ones, resulting from differences in data quality, client participation, communication constraints, aggregation methods and underlying hardware. Fairness remains an unresolved issue in FL and the community has identified an absence of succinct definitions and metrics to quantify fairness; to address this, we propose Federated Fairness Analytics - a methodology for measuring fairness. Our definition of fairness comprises four notions with novel, corresponding metrics. They are symptomatically defined and leverage techniques originating from XAI, cooperative game-theory and networking engineering. We tested a range of experimental settings, varying the FL approach, ML task and data settings. The results show that statistical heterogeneity and client participation affect fairness and fairness conscious approaches such as Ditto and q-FedAvg marginally improve fairness-performance trade-offs. Using our techniques, FL practitioners can uncover previously unobtainable insights into their system's fairness, at differing levels of granularity in order to address fairness challenges in FL. We have open-sourced our work at: https://github.com/oscardilley/federated-fairness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge