Oren Spector

InsertionNet 2.0: Minimal Contact Multi-Step Insertion Using Multimodal Multiview Sensory Input

Mar 02, 2022

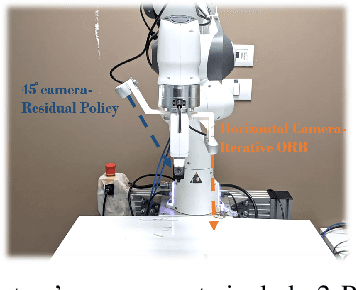

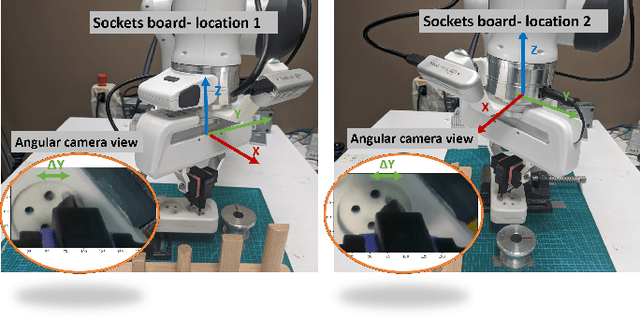

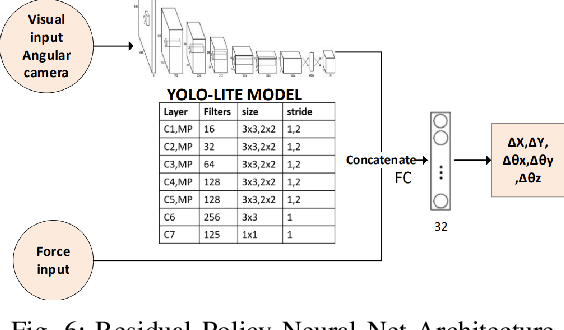

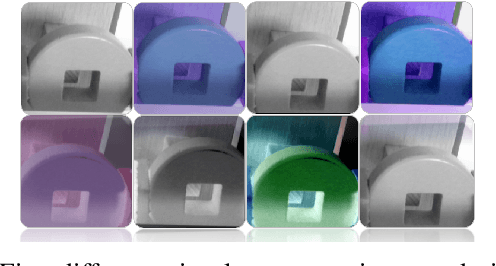

Abstract:We address the problem of devising the means for a robot to rapidly and safely learn insertion skills with just a few human interventions and without hand-crafted rewards or demonstrations. Our InsertionNet version 2.0 provides an improved technique to robustly cope with a wide range of use-cases featuring different shapes, colors, initial poses, etc. In particular, we present a regression-based method based on multimodal input from stereo perception and force, augmented with contrastive learning for the efficient learning of valuable features. In addition, we introduce a one-shot learning technique for insertion, which relies on a relation network scheme to better exploit the collected data and to support multi-step insertion tasks. Our method improves on the results obtained with the original InsertionNet, achieving an almost perfect score (above 97.5$\%$ on 200 trials) in 16 real-life insertion tasks while minimizing the execution time and contact during insertion. We further demonstrate our method's ability to tackle a real-life 3-step insertion task and perfectly solve an unseen insertion task without learning.

InsertionNet -- A Scalable Solution for Insertion

Apr 29, 2021

Abstract:Complicated assembly processes can be described as a sequence of two main activities: grasping and insertion. While general grasping solutions are common in industry, insertion is still only applicable to small subsets of problems, mainly ones involving simple shapes in fixed locations and in which the variations are not taken into consideration. Recently, RL approaches with prior knowledge (e.g., LfD or residual policy) have been adopted. However, these approaches might be problematic in contact-rich tasks since interaction might endanger the robot and its equipment. In this paper, we tackled this challenge by formulating the problem as a regression problem. By combining visual and force inputs, we demonstrate that our method can scale to 16 different insertion tasks in less than 10 minutes. The resulting policies are robust to changes in the socket position, orientation or peg color, as well as to small differences in peg shape. Finally, we demonstrate an end-to-end solution for 2 complex assembly tasks with multi-insertion objectives when the assembly board is randomly placed on a table.

Deep Reinforcement Learning for Contact-Rich Skills Using Compliant Movement Primitives

Aug 30, 2020

Abstract:In recent years, industrial robots have been installed in various industries to handle advanced manufacturing and high precision tasks. However, further integration of industrial robots is hampered by their limited flexibility, adaptability and decision making skills compared to human operators. Assembly tasks are especially challenging for robots since they are contact-rich and sensitive to even small uncertainties. While reinforcement learning (RL) offers a promising framework to learn contact-rich control policies from scratch, its applicability to high-dimensional continuous state-action spaces remains rather limited due to high brittleness and sample complexity. To address those issues, we propose different pruning methods that facilitate convergence and generalization. In particular, we divide the task into free and contact-rich sub-tasks, perform the control in Cartesian rather than joint space, and parameterize the control policy. Those pruning methods are naturally implemented within the framework of dynamic movement primitives (DMP). To handle contact-rich tasks, we extend the DMP framework by introducing a coupling term that acts like the human wrist and provides active compliance under contact with the environment. We demonstrate that the proposed method can learn insertion skills that are invariant to space, size, shape, and closely related scenarios, while handling large uncertainties. Finally we demonstrate that the learned policy can be easily transferred from simulations to real world and achieve similar performance on UR5e robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge