Or Berebi

Loss functions incorporating auditory spatial perception in deep learning -- a review

Jun 24, 2025Abstract:Binaural reproduction aims to deliver immersive spatial audio with high perceptual realism over headphones. Loss functions play a central role in optimizing and evaluating algorithms that generate binaural signals. However, traditional signal-related difference measures often fail to capture the perceptual properties that are essential to spatial audio quality. This review paper surveys recent loss functions that incorporate spatial perception cues relevant to binaural reproduction. It focuses on losses applied to binaural signals, which are often derived from microphone recordings or Ambisonics signals, while excluding those based on room impulse responses. Guided by the Spatial Audio Quality Inventory (SAQI), the review emphasizes perceptual dimensions related to source localization and room response, while excluding general spectral-temporal attributes. The literature survey reveals a strong focus on localization cues, such as interaural time and level differences (ITDs, ILDs), while reverberation and other room acoustic attributes remain less explored in loss function design. Recent works that estimate room acoustic parameters and develop embeddings that capture room characteristics indicate their potential for future integration into neural network training. The paper concludes by highlighting future research directions toward more perceptually grounded loss functions that better capture the listener's spatial experience.

BSM-iMagLS: ILD Informed Binaural Signal Matching for Reproduction with Head-Mounted Microphone Arrays

Jan 30, 2025

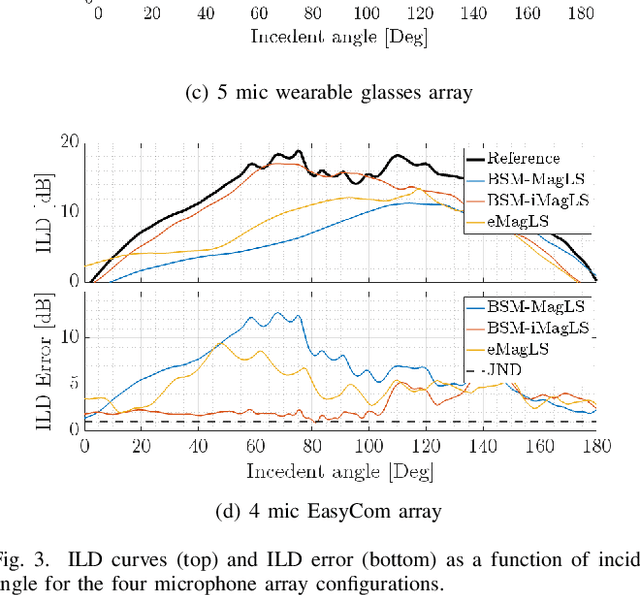

Abstract:Headphone listening in applications such as augmented and virtual reality (AR and VR) relies on high-quality spatial audio to ensure immersion, making accurate binaural reproduction a critical component. As capture devices, wearable arrays with only a few microphones with irregular arrangement face challenges in achieving a reproduction quality comparable to that of arrays with a large number of microphones. Binaural signal matching (BSM) has recently been presented as a signal-independent approach for generating high-quality binaural signal using only a few microphones, which is further improved using magnitude-least squares (MagLS) optimization at high frequencies. This paper extends BSM with MagLS by introducing interaural level difference (ILD) into the MagLS, integrated into BSM (BSM-iMagLS). Using a deep neural network (DNN)-based solver, BSM-iMagLS achieves joint optimization of magnitude, ILD, and magnitude derivatives, improving spatial fidelity. Performance is validated through theoretical analysis, numerical simulations with diverse HRTFs and head-mounted array geometries, and listening experiments, demonstrating a substantial reduction in ILD errors while maintaining comparable magnitude accuracy to state-of-the-art solutions. The results highlight the potential of BSM-iMagLS to enhance binaural reproduction for wearable and portable devices.

Ambisonics Binaural Rendering via Masked Magnitude Least Squares

Jan 30, 2025

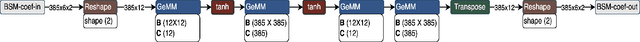

Abstract:Ambisonics rendering has become an integral part of 3D audio for headphones. It works well with existing recording hardware, the processing cost is mostly independent of the number of sound sources, and it elegantly allows for rotating the scene and listener. One challenge in Ambisonics headphone rendering is to find a perceptually well behaved low-order representation of the Head-Related Transfer Functions (HRTFs) that are contained in the rendering pipe-line. Low-order rendering is of interest, when working with microphone arrays containing only a few sensors, or for reducing the bandwidth for signal transmission. Magnitude Least Squares rendering became the de facto standard for this, which discards high-frequency interaural phase information in favor of reducing magnitude errors. Building upon this idea, we suggest Masked Magnitude Least Squares, which optimized the Ambisonics coefficients with a neural network and employs a spatio-spectral weighting mask to control the accuracy of the magnitude reconstruction. In the tested case, the weighting mask helped to maintain high-frequency notches in the low-order HRTFs and improved the modeled median plane localization performance in comparison to MagLS, while only marginally affecting the overall accuracy of the magnitude reconstruction.

Feasibility of iMagLS-BSM -- ILD Informed Binaural Signal Matching with Arbitrary Microphone Arrays

Aug 07, 2024

Abstract:Binaural reproduction for headphone-centric listening has become a focal point in ongoing research, particularly within the realm of advancing technologies such as augmented and virtual reality (AR and VR). The demand for high-quality spatial audio in these applications is essential to uphold a seamless sense of immersion. However, challenges arise from wearable recording devices equipped with only a limited number of microphones and irregular microphone placements due to design constraints. These factors contribute to limited reproduction quality compared to reference signals captured by high-order microphone arrays. This paper introduces a novel optimization loss tailored for a beamforming-based, signal-independent binaural reproduction scheme. This method, named iMagLS-BSM incorporates an interaural level difference (ILD) error term into the previously proposed binaural signal matching (BSM) magnitude least squares (MagLS) rendering loss for lateral plane angles. The method leverages nonlinear programming to minimize the introduced loss. Preliminary results show a substantial reduction in ILD error, while maintaining a binaural magnitude error comparable to that achieved with a MagLS BSM solution. These findings hold promise for enhancing the overall spatial quality of resultant binaural signals.

iMagLS: Interaural Level Difference with Magnitude Least-Squares Loss for Optimized First-Order Head-Related Transfer Function

Nov 28, 2023Abstract:Binaural reproduction for headphone-based listening is an active research area due to its widespread use in evolving technologies such as augmented and virtual reality (AR and VR). On the one hand, these applications demand high quality spatial audio perception to preserve the sense of immersion. On the other hand, recording devices may only have a few microphones, leading to low-order representations such as first-order Ambisonics (FOA). However, first-order Ambisonics leads to limited externalization and spatial resolution. In this paper, a novel head-related transfer function (HRTF) preprocessing optimization loss is proposed, and is minimized using nonlinear programming. The new method, denoted iMagLS, involves the introduction of an interaural level difference (ILD) error term to the now widely used MagLS optimization loss for the lateral plane angles. Results indicate that the ILD error could be substantially reduced, while the HRTF magnitude error remains similar to that obtained with MagLS. These results could prove beneficial to the overall spatial quality of first-order Ambisonics, while other reproduction methods could also benefit from considering this modified loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge