Onur Berk Töre

Frustum Fusion: Pseudo-LiDAR and LiDAR Fusion for 3D Detection

Nov 08, 2021

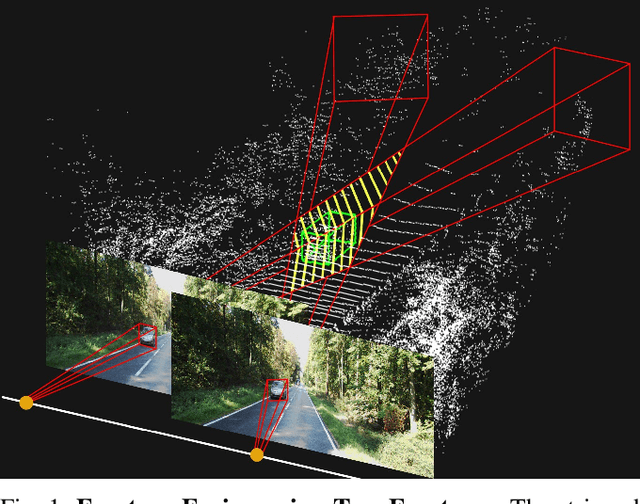

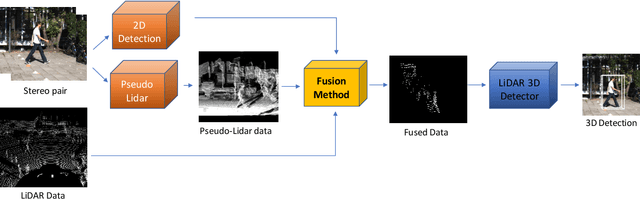

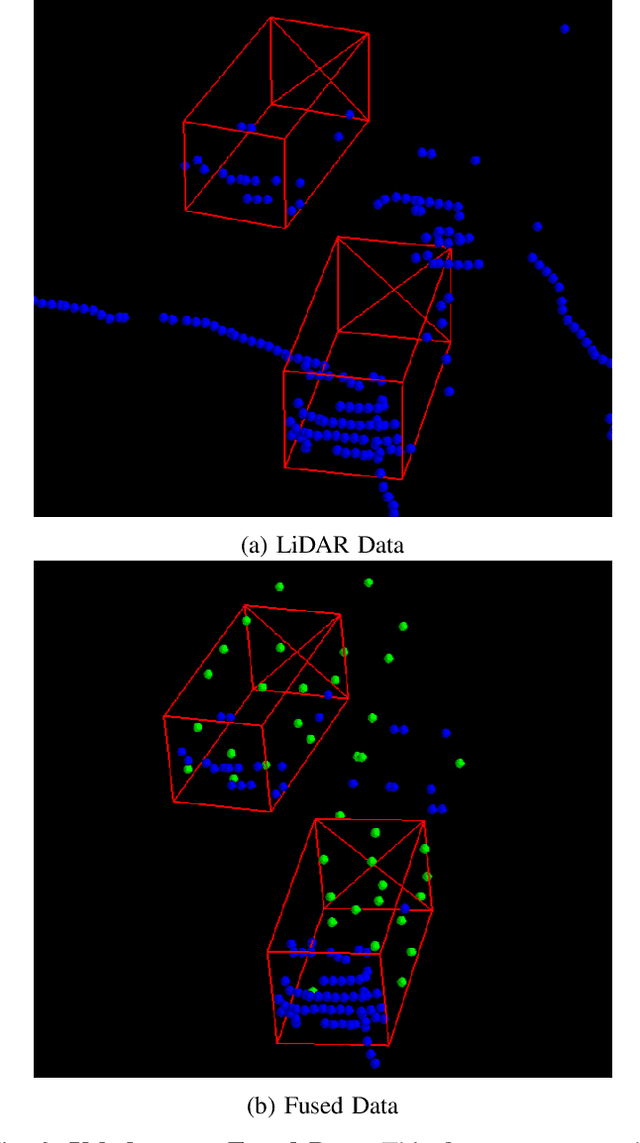

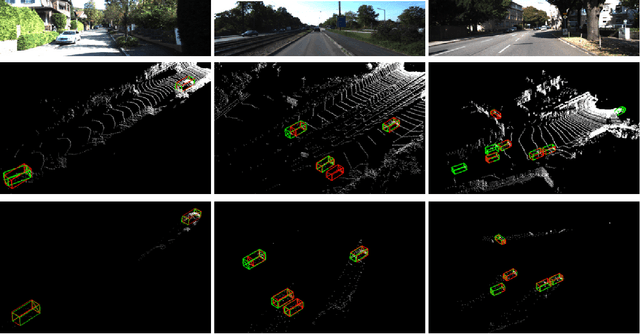

Abstract:Most autonomous vehicles are equipped with LiDAR sensors and stereo cameras. The former is very accurate but generates sparse data, whereas the latter is dense, has rich texture and color information but difficult to extract robust 3D representations from. In this paper, we propose a novel data fusion algorithm to combine accurate point clouds with dense but less accurate point clouds obtained from stereo pairs. We develop a framework to integrate this algorithm into various 3D object detection methods. Our framework starts with 2D detections from both of the RGB images, calculates frustums and their intersection, creates Pseudo-LiDAR data from the stereo images, and fills in the parts of the intersection region where the LiDAR data is lacking with the dense Pseudo-LiDAR points. We train multiple 3D object detection methods and show that our fusion strategy consistently improves the performance of detectors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge