Omair Ali

Adaptive SpikeDeep-Classifier: Self-organizing and self-supervised machine learning algorithm for online spike sorting

Mar 30, 2023

Abstract:Objective. Research on brain-computer interfaces (BCIs) is advancing towards rehabilitating severely disabled patients in the real world. Two key factors for successful decoding of user intentions are the size of implanted microelectrode arrays and a good online spike sorting algorithm. A small but dense microelectrode array with 3072 channels was recently developed for decoding user intentions. The process of spike sorting determines the spike activity (SA) of different sources (neurons) from recorded neural data. Unfortunately, current spike sorting algorithms are unable to handle the massively increasing amount of data from dense microelectrode arrays, making spike sorting a fragile component of the online BCI decoding framework. Approach. We proposed an adaptive and self-organized algorithm for online spike sorting, named Adaptive SpikeDeep-Classifier (Ada-SpikeDeepClassifier), which uses SpikeDeeptector for channel selection, an adaptive background activity rejector (Ada-BAR) for discarding background events, and an adaptive spike classifier (Ada-Spike classifier) for classifying the SA of different neural units. Results. Our algorithm outperformed our previously published SpikeDeep-Classifier and eight other spike sorting algorithms, as evaluated on a human dataset and a publicly available simulated dataset. Significance. The proposed algorithm is the first spike sorting algorithm that automatically learns the abrupt changes in the distribution of noise and SA. It is an artificial neural network-based algorithm that is well-suited for hardware implementation on neuromorphic chips that can be used for wearable invasive BCIs.

ConTraNet: A single end-to-end hybrid network for EEG-based and EMG-based human machine interfaces

Jun 21, 2022

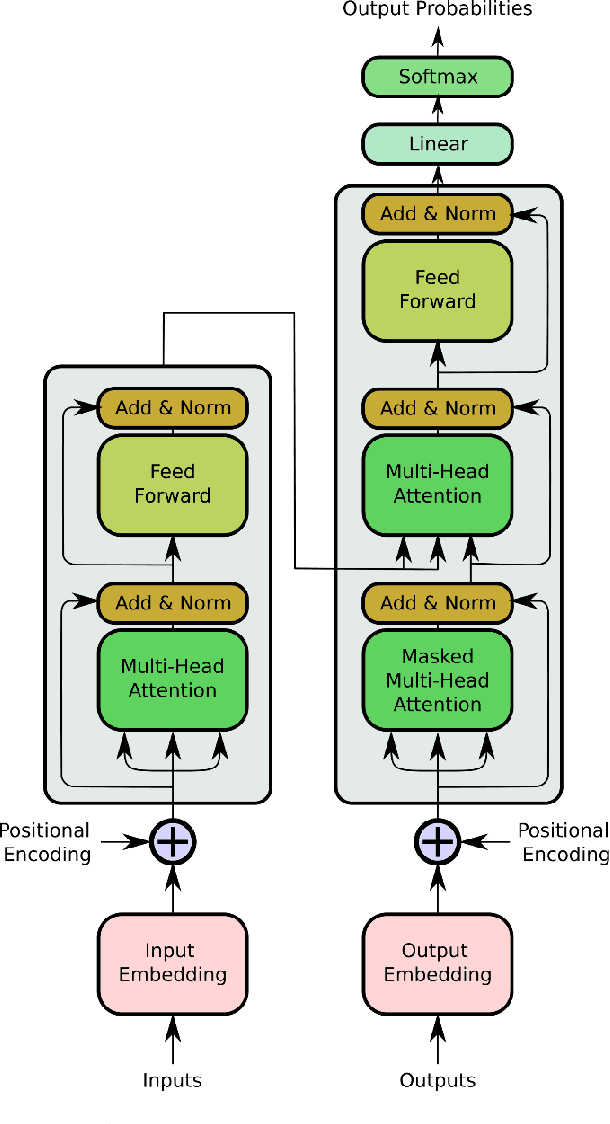

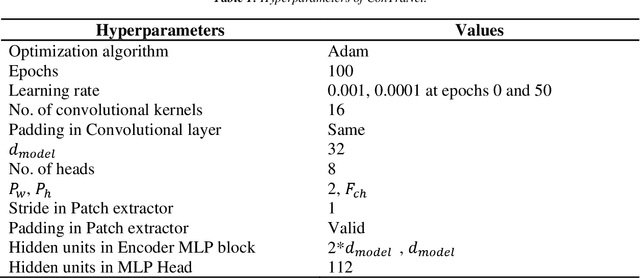

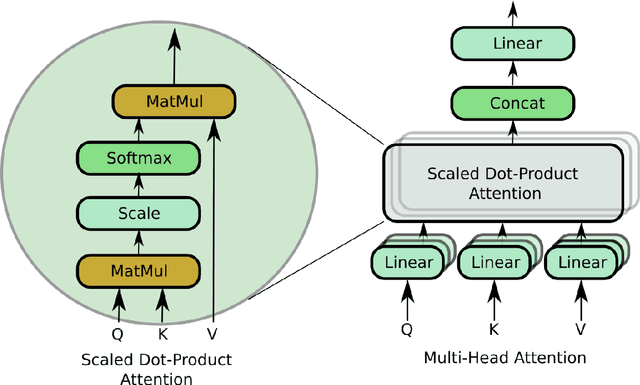

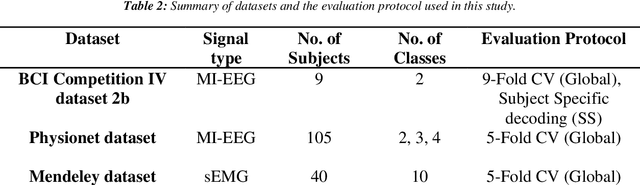

Abstract:Objective: Electroencephalography (EEG) and electromyography (EMG) are two non-invasive bio-signals, which are widely used in human machine interface (HMI) technologies (EEG-HMI and EMG-HMI paradigm) for the rehabilitation of physically disabled people. Successful decoding of EEG and EMG signals into respective control command is a pivotal step in the rehabilitation process. Recently, several Convolutional neural networks (CNNs) based architectures are proposed that directly map the raw time-series signal into decision space and the process of meaningful features extraction and classification are performed simultaneously. However, these networks are tailored to the learn the expected characteristics of the given bio-signal and are limited to single paradigm. In this work, we addressed the question that can we build a single architecture which is able to learn distinct features from different HMI paradigms and still successfully classify them. Approach: In this work, we introduce a single hybrid model called ConTraNet, which is based on CNN and Transformer architectures that is equally useful for EEG-HMI and EMG-HMI paradigms. ConTraNet uses CNN block to introduce inductive bias in the model and learn local dependencies, whereas the Transformer block uses the self-attention mechanism to learn the long-range dependencies in the signal, which are crucial for the classification of EEG and EMG signals. Main results: We evaluated and compared the ConTraNet with state-of-the-art methods on three publicly available datasets which belong to EEG-HMI and EMG-HMI paradigms. ConTraNet outperformed its counterparts in all the different category tasks (2-class, 3-class, 4-class, and 10-class decoding tasks). Significance: The results suggest that ConTraNet is robust to learn distinct features from different HMI paradigms and generalizes well as compared to the current state of the art algorithms.

Improving the performance of EEG decoding using anchored-STFT in conjunction with gradient norm adversarial augmentation

Nov 30, 2020

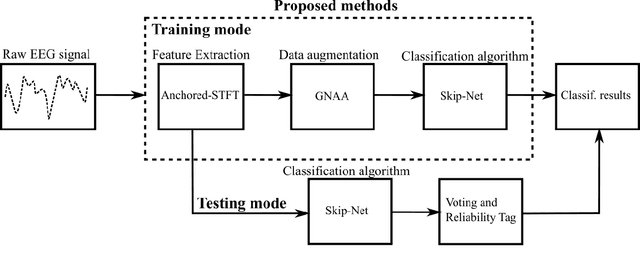

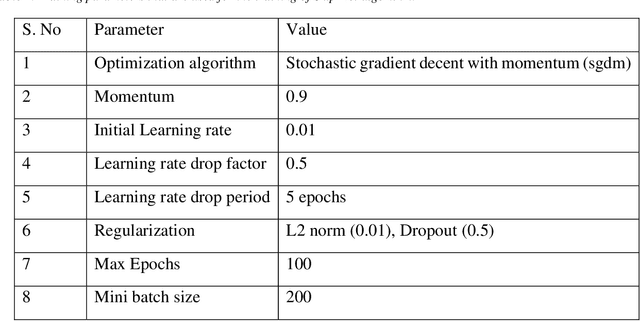

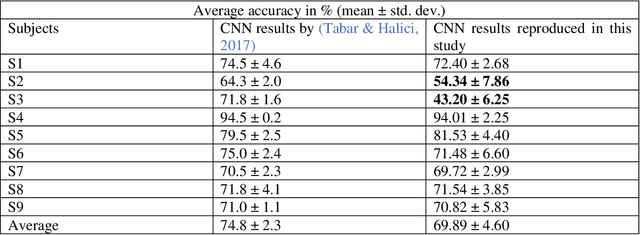

Abstract:Brain-computer interfaces (BCIs) enable direct communication between humans and machines by translating brain activity into control commands. EEG is one of the most common sources of neural signals because of its inexpensive and non-invasive nature. However, interpretation of EEG signals is non-trivial because EEG signals have a low spatial resolution and are often distorted with noise and artifacts. Therefore, it is possible that meaningful patterns for classifying EEG signals are deeply hidden. Nowadays, state-of-the-art deep-learning algorithms have proven to be quite efficient in learning hidden, meaningful patterns. However, the performance of the deep learning algorithms depends upon the quality and the amount of the provided training data. Hence, a better input formation (feature extraction) technique and a generative model to produce high-quality data can enable the deep learning algorithms to adapt high generalization quality. In this study, we proposed a novel input formation (feature extraction) method in conjunction with a novel deep learning based generative model to harness new training examples. The feature vectors are extracted using a modified Short Time Fourier Transform (STFT) called anchored-STFT. Anchored-STFT, inspired by wavelet transform, tries to minimize the tradeoff between time and frequency resolution. As a result, it extracts the inputs (feature vectors) with better time and frequency resolution compared to the standard STFT. Secondly, we introduced a novel generative adversarial data augmentation technique called gradient norm adversarial augmentation (GNAA) for generating more training data. Thirdly, we investigated the existence and significance of adversarial inputs in EEG data. Our approach obtained the kappa value of 0.814 for BCI competition II dataset III and 0.755 for BCI competition IV dataset 2b for session-to-session transfer on test data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge