Oliver Tse

Evolution of Gaussians in the Hellinger-Kantorovich-Boltzmann gradient flow

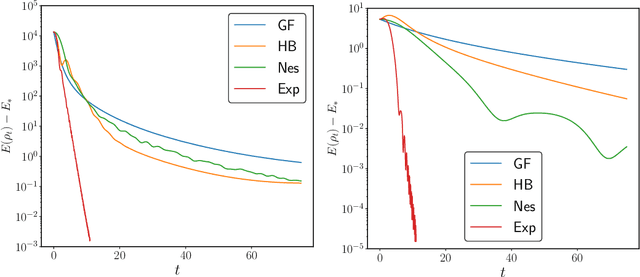

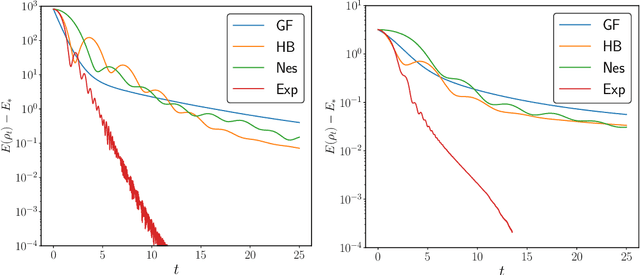

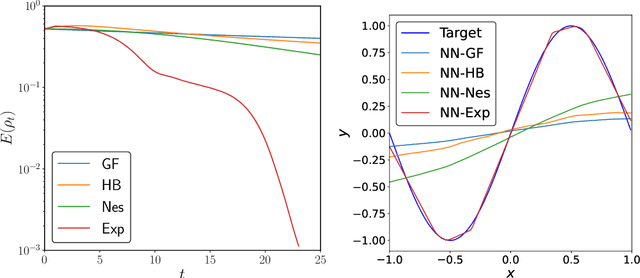

Apr 29, 2025Abstract:This study leverages the basic insight that the gradient-flow equation associated with the relative Boltzmann entropy, in relation to a Gaussian reference measure within the Hellinger-Kantorovich (HK) geometry, preserves the class of Gaussian measures. This invariance serves as the foundation for constructing a reduced gradient structure on the parameter space characterizing Gaussian densities. We derive explicit ordinary differential equations that govern the evolution of mean, covariance, and mass under the HK-Boltzmann gradient flow. The reduced structure retains the additive form of the HK metric, facilitating a comprehensive analysis of the dynamics involved. We explore the geodesic convexity of the reduced system, revealing that global convexity is confined to the pure transport scenario, while a variant of sublevel semi-convexity is observed in the general case. Furthermore, we demonstrate exponential convergence to equilibrium through Polyak-Lojasiewicz-type inequalities, applicable both globally and on sublevel sets. By monitoring the evolution of covariance eigenvalues, we refine the decay rates associated with convergence. Additionally, we extend our analysis to non-Gaussian targets exhibiting strong log-lambda-concavity, corroborating our theoretical results with numerical experiments that encompass a Gaussian-target gradient flow and a Bayesian logistic regression application.

Accelerating optimization over the space of probability measures

Oct 09, 2023

Abstract:Acceleration of gradient-based optimization methods is an issue of significant practical and theoretical interest, particularly in machine learning applications. Most research has focused on optimization over Euclidean spaces, but given the need to optimize over spaces of probability measures in many machine learning problems, it is of interest to investigate accelerated gradient methods in this context too. To this end, we introduce a Hamiltonian-flow approach that is analogous to moment-based approaches in Euclidean space. We demonstrate that algorithms based on this approach can achieve convergence rates of arbitrarily high order. Numerical examples illustrate our claim.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge