Okan Koc

Phase Portraits as Movement Primitives for Fast Humanoid Robot Control

Dec 07, 2019

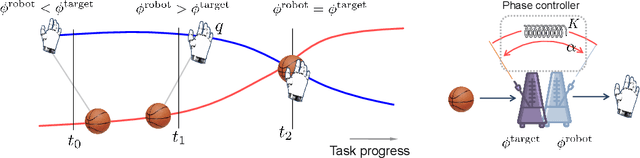

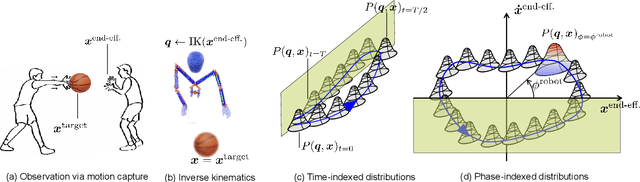

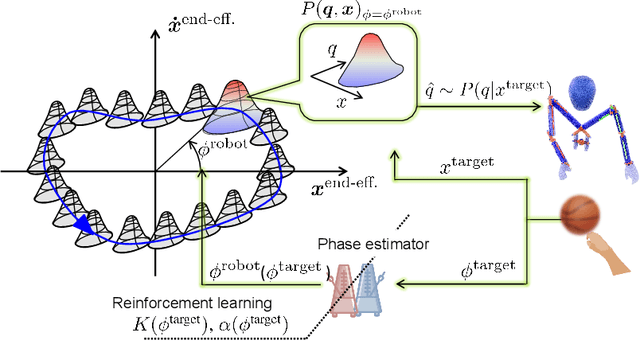

Abstract:Currently, usual approaches for fast robot control are largely reliant on solving online optimal control problems. Such methods are known to be computationally intensive and sensitive to model accuracy. On the other hand, animals plan complex motor actions not only fast but seemingly with little effort even on unseen tasks. This natural sense of time and coordination motivates us to approach robot control from a motor skill learning perspective to design fast and computationally light controllers that can be learned autonomously by the robot under mild modeling assumptions. This article introduces Phase Portrait Movement Primitives (PPMP), a primitive that predicts dynamics on a low dimensional phase space which in turn is used to govern the high dimensional kinematics of the task. The stark difference with other primitive formulations is a built-in mechanism for phase prediction in the form of coupled oscillators that replaces model-based state estimators such as Kalman filters. The policy is trained by optimizing the parameters of the oscillators whose output is connected to a kinematic distribution in the form of a phase portrait. The drastic reduction in dimensionality allows us to efficiently train and execute PPMPs on a real human-sized, dual-arm humanoid upper body on a task involving 20 degrees-of-freedom. We demonstrate PPMPs in interactions requiring fast reactions times while generating anticipative pose adaptation in both discrete and cyclic tasks.

Optimizing Execution of Dynamic Goal-Directed Robot Movements with Learning Control

Mar 17, 2019

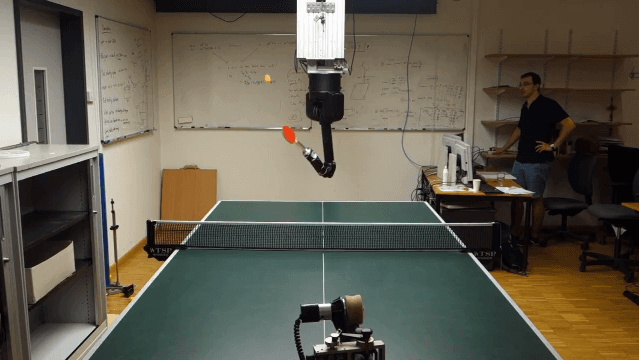

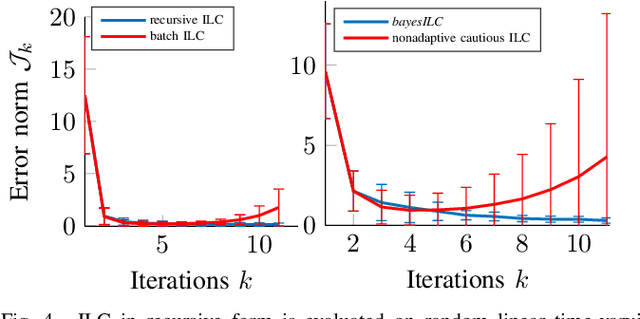

Abstract:Highly dynamic tasks that require large accelerations and precise tracking usually rely on accurate models and/or high gain feedback. While kinematic optimization allows for efficient representation and online generation of hitting trajectories, learning to track such dynamic movements with inaccurate models remains an open problem. In particular, stability issues surrounding the learning performance, in the iteration domain, can prevent the successful implementation of model based learning approaches. To achieve accurate tracking for such tasks in a stable and efficient way, we propose a new adaptive Iterative Learning Control (ILC) algorithm that is implemented efficiently using a recursive approach. Moreover, covariance estimates of model matrices are used to exercise caution during learning. We evaluate the performance of the proposed approach in extensive simulations and in our robotic table tennis platform, where we show how the striking performance of two seven degree of freedom anthropomorphic robot arms can be optimized. Our implementation on the table tennis platform compares favorably with high-gain PD-control, model-free ILC (simple PD feedback type) and model-based ILC without cautious adaptation.

Learning to serve: an experimental study for a new learning from demonstrations framework

Mar 17, 2019

Abstract:Learning from demonstrations is an easy and intuitive way to show examples of successful behavior to a robot. However, the fact that humans optimize or take advantage of their body and not of the robot, usually called the embodiment problem in robotics, often prevents industrial robots from executing the task in a straightforward way. The shown movements often do not or cannot utilize the degrees of freedom of the robot efficiently, and moreover suffer from excessive execution errors. In this paper, we explore a variety of solutions that address these shortcomings. In particular, we learn sparse movement primitive parameters from several demonstrations of a successful table tennis serve. The number of parameters learned using our procedure is independent of the degrees of freedom of the robot. Moreover, they can be ranked according to their importance in the regression task. Learning few parameters that are ranked is a desirable feature to combat the curse of dimensionality in Reinforcement Learning. Preliminary real robot experiments on the Barrett WAM for a table tennis serve using the learned movement primitives show that the representation can capture successfully the style of the movement with few parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge