Nusrat Binta Nizam

BUET Multi-disease Heart Sound Dataset: A Comprehensive Auscultation Dataset for Developing Computer-Aided Diagnostic Systems

Sep 01, 2024Abstract:Cardiac auscultation, an integral tool in diagnosing cardiovascular diseases (CVDs), often relies on the subjective interpretation of clinicians, presenting a limitation in consistency and accuracy. Addressing this, we introduce the BUET Multi-disease Heart Sound (BMD-HS) dataset - a comprehensive and meticulously curated collection of heart sound recordings. This dataset, encompassing 864 recordings across five distinct classes of common heart sounds, represents a broad spectrum of valvular heart diseases, with a focus on diagnostically challenging cases. The standout feature of the BMD-HS dataset is its innovative multi-label annotation system, which captures a diverse range of diseases and unique disease states. This system significantly enhances the dataset's utility for developing advanced machine learning models in automated heart sound classification and diagnosis. By bridging the gap between traditional auscultation practices and contemporary data-driven diagnostic methods, the BMD-HS dataset is poised to revolutionize CVD diagnosis and management, providing an invaluable resource for the advancement of cardiac health research. The dataset is publicly available at this link: https://github.com/mHealthBuet/BMD-HS-Dataset.

Anatomy X-Net: A Semi-Supervised Anatomy Aware Convolutional Neural Network for Thoracic Disease Classification

Jun 10, 2021

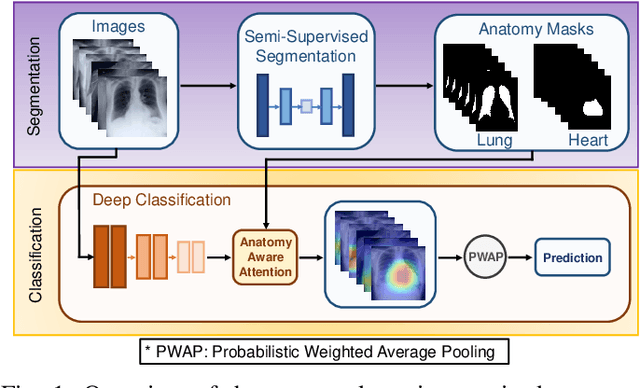

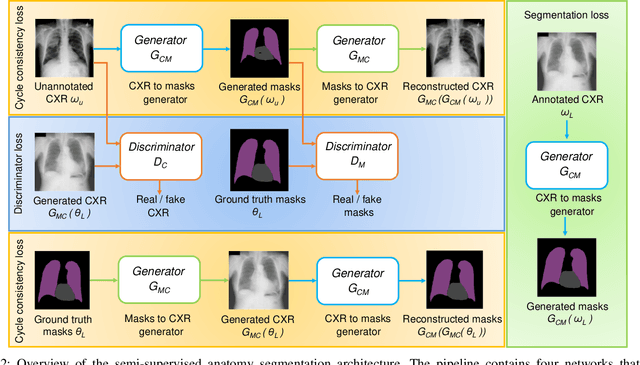

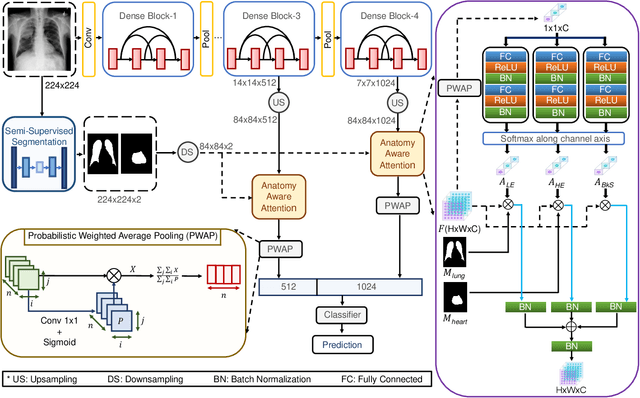

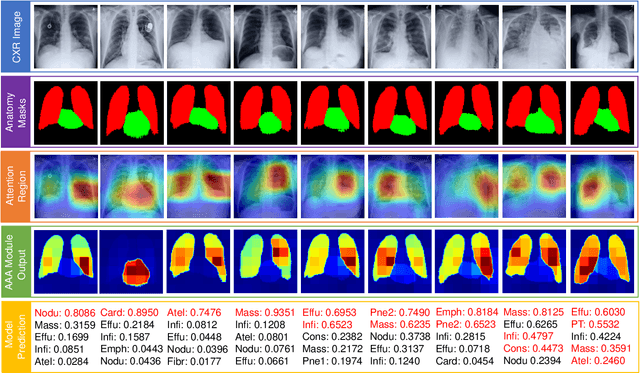

Abstract:Thoracic disease detection from chest radiographs using deep learning methods has been an active area of research in the last decade. Most previous methods attempt to focus on the diseased organs of the image by identifying spatial regions responsible for significant contributions to the model's prediction. In contrast, expert radiologists first locate the prominent anatomical structures before determining if those regions are anomalous. Therefore, integrating anatomical knowledge within deep learning models could bring substantial improvement in automatic disease classification. This work proposes an anatomy-aware attention-based architecture named Anatomy X-Net, that prioritizes the spatial features guided by the pre-identified anatomy regions. We leverage a semi-supervised learning method using the JSRT dataset containing organ-level annotation to obtain the anatomical segmentation masks (for lungs and heart) for the NIH and CheXpert datasets. The proposed Anatomy X-Net uses the pre-trained DenseNet-121 as the backbone network with two corresponding structured modules, the Anatomy Aware Attention (AAA) and Probabilistic Weighted Average Pooling (PWAP), in a cohesive framework for anatomical attention learning. Our proposed method sets new state-of-the-art performance on the official NIH test set with an AUC score of 0.8439, proving the efficacy of utilizing the anatomy segmentation knowledge to improve the thoracic disease classification. Furthermore, the Anatomy X-Net yields an averaged AUC of 0.9020 on the Stanford CheXpert dataset, improving on existing methods that demonstrate the generalizability of the proposed framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge