Nuri Cingillioglu

Neuro-symbolic Rule Learning in Real-world Classification Tasks

Mar 29, 2023

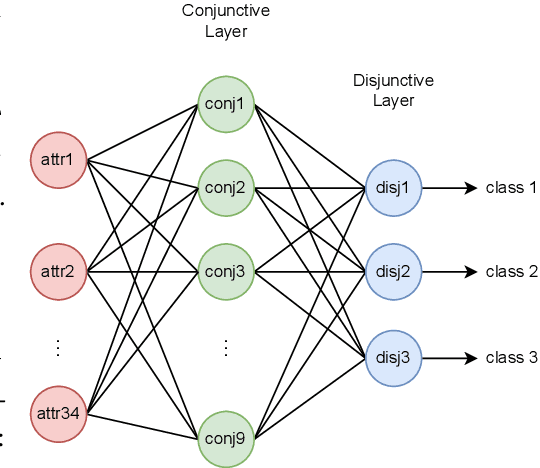

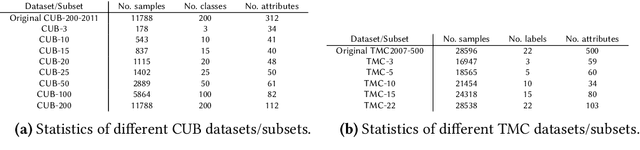

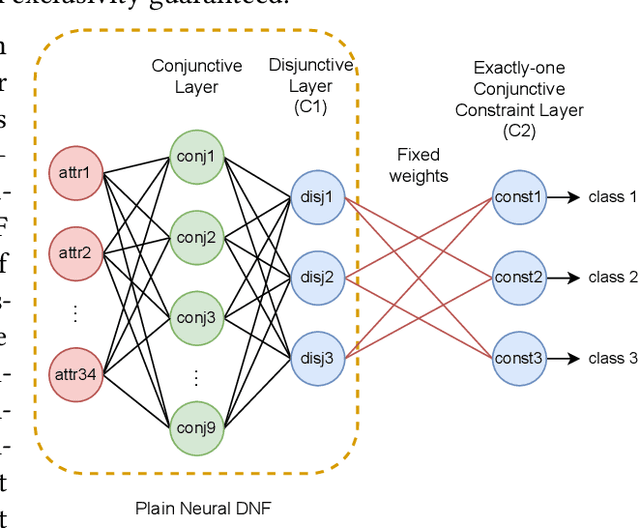

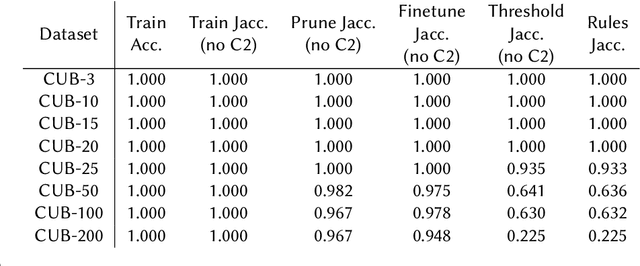

Abstract:Neuro-symbolic rule learning has attracted lots of attention as it offers better interpretability than pure neural models and scales better than symbolic rule learning. A recent approach named pix2rule proposes a neural Disjunctive Normal Form (neural DNF) module to learn symbolic rules with feed-forward layers. Although proved to be effective in synthetic binary classification, pix2rule has not been applied to more challenging tasks such as multi-label and multi-class classifications over real-world data. In this paper, we address this limitation by extending the neural DNF module to (i) support rule learning in real-world multi-class and multi-label classification tasks, (ii) enforce the symbolic property of mutual exclusivity (i.e. predicting exactly one class) in multi-class classification, and (iii) explore its scalability over large inputs and outputs. We train a vanilla neural DNF model similar to pix2rule's neural DNF module for multi-label classification, and we propose a novel extended model called neural DNF-EO (Exactly One) which enforces mutual exclusivity in multi-class classification. We evaluate the classification performance, scalability and interpretability of our neural DNF-based models, and compare them against pure neural models and a state-of-the-art symbolic rule learner named FastLAS. We demonstrate that our neural DNF-based models perform similarly to neural networks, but provide better interpretability by enabling the extraction of logical rules. Our models also scale well when the rule search space grows in size, in contrast to FastLAS, which fails to learn in multi-class classification tasks with 200 classes and in all multi-label settings.

pix2rule: End-to-end Neuro-symbolic Rule Learning

Jun 14, 2021

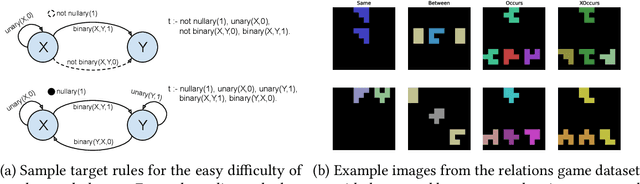

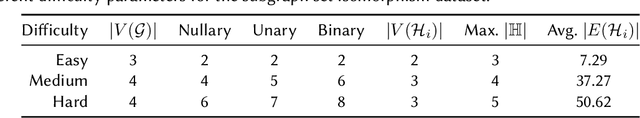

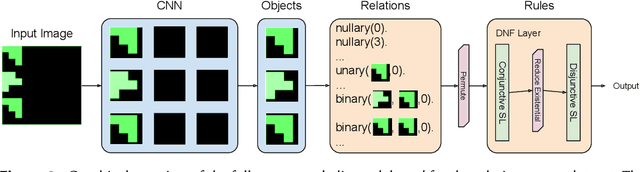

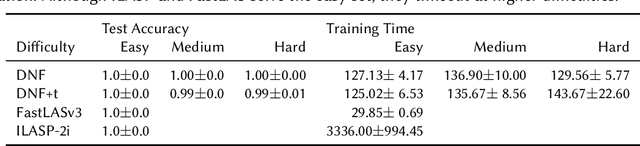

Abstract:Humans have the ability to seamlessly combine low-level visual input with high-level symbolic reasoning often in the form of recognising objects, learning relations between them and applying rules. Neuro-symbolic systems aim to bring a unifying approach to connectionist and logic-based principles for visual processing and abstract reasoning respectively. This paper presents a complete neuro-symbolic method for processing images into objects, learning relations and logical rules in an end-to-end fashion. The main contribution is a differentiable layer in a deep learning architecture from which symbolic relations and rules can be extracted by pruning and thresholding. We evaluate our model using two datasets: subgraph isomorphism task for symbolic rule learning and an image classification domain with compound relations for learning objects, relations and rules. We demonstrate that our model scales beyond state-of-the-art symbolic learners and outperforms deep relational neural network architectures.

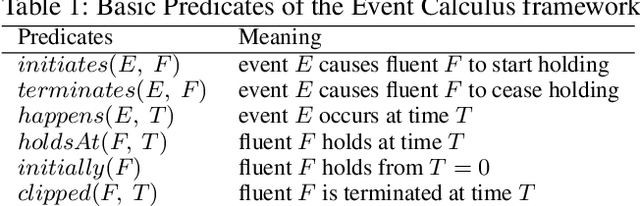

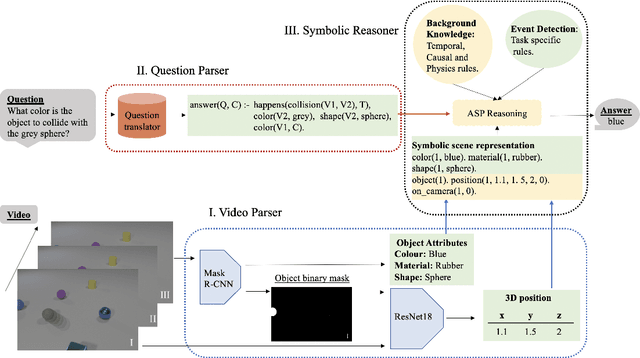

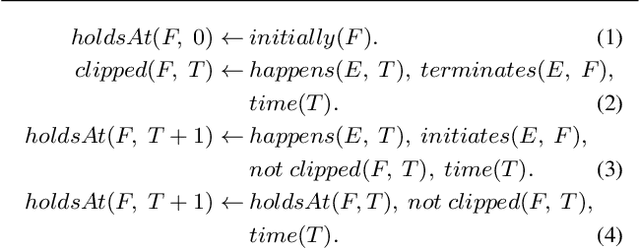

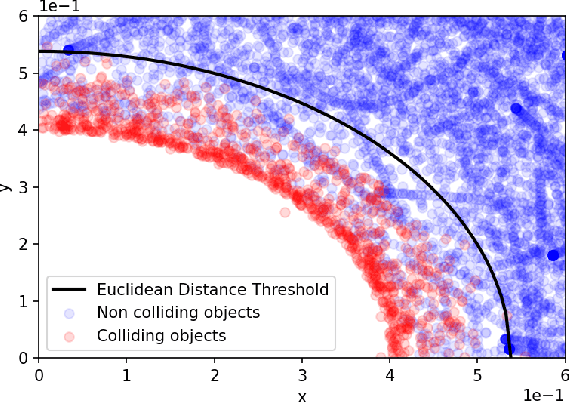

HySTER: A Hybrid Spatio-Temporal Event Reasoner

Jan 17, 2021

Abstract:The task of Video Question Answering (VideoQA) consists in answering natural language questions about a video and serves as a proxy to evaluate the performance of a model in scene sequence understanding. Most methods designed for VideoQA up-to-date are end-to-end deep learning architectures which struggle at complex temporal and causal reasoning and provide limited transparency in reasoning steps. We present the HySTER: a Hybrid Spatio-Temporal Event Reasoner to reason over physical events in videos. Our model leverages the strength of deep learning methods to extract information from video frames with the reasoning capabilities and explainability of symbolic artificial intelligence in an answer set programming framework. We define a method based on general temporal, causal and physics rules which can be transferred across tasks. We apply our model to the CLEVRER dataset and demonstrate state-of-the-art results in question answering accuracy. This work sets the foundations for the incorporation of inductive logic programming in the field of VideoQA.

Learning Invariants through Soft Unification

Sep 16, 2019

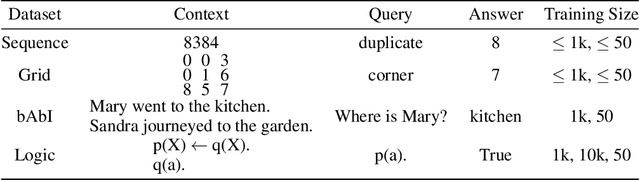

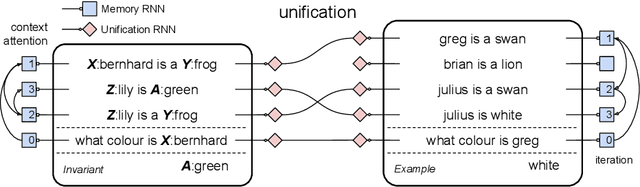

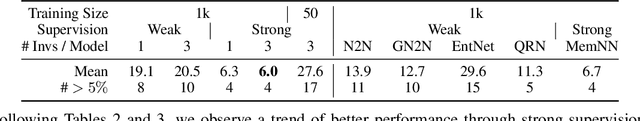

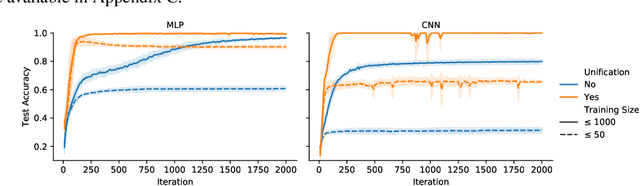

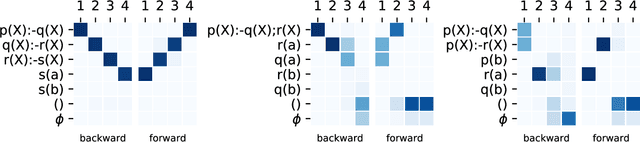

Abstract:Human reasoning involves recognising common underlying principles across many examples by utilising variables. The by-products of such reasoning are invariants that capture patterns across examples such as "if someone went somewhere then they are there" without mentioning specific people or places. Humans learn what variables are and how to use them at a young age, and the question this paper addresses is whether machines can also learn and use variables solely from examples without requiring human pre-engineering. We propose Unification Networks that incorporate soft unification into neural networks to learn variables and by doing so lift examples into invariants that can then be used to solve a given task. We evaluate our approach on four datasets to demonstrate that learning invariants captures patterns in the data and can improve performance over baselines.

DeepLogic: End-to-End Logical Reasoning

May 18, 2018

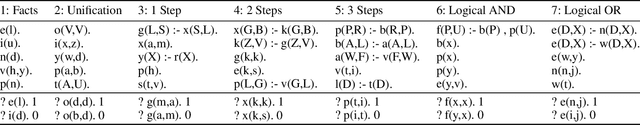

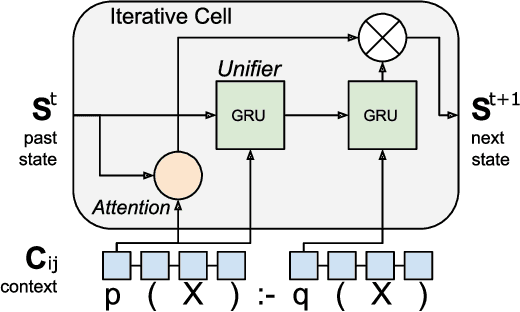

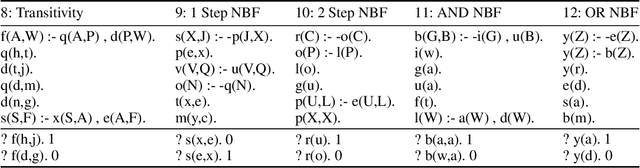

Abstract:Neural networks have been learning complex multi-hop reasoning in various domains. One such formal setting for reasoning, logic, provides a challenging case for neural networks. In this article, we propose a Neural Inference Network (NIN) for learning logical inference over classes of logic programs. Trained in an end-to-end fashion NIN learns representations of normal logic programs, by processing them at a character level, and the reasoning algorithm for checking whether a logic program entails a given query. We define 12 classes of logic programs that exemplify increased level of complexity of the inference process (multi-hop and default reasoning) and show that our NIN passes 10 out of the 12 tasks. We also analyse the learnt representations of logic programs that NIN uses to perform the logical inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge