Norberto Pires

High-level programming and control for industrial robotics: using a hand-held accelerometer-based input device for gesture and posture recognition

Sep 09, 2013

Abstract:Purpose - Most industrial robots are still programmed using the typical teaching process, through the use of the robot teach pendant. This is a tedious and time-consuming task that requires some technical expertise, and hence new approaches to robot programming are required. The purpose of this paper is to present a robotic system that allows users to instruct and program a robot with a high-level of abstraction from the robot language. Design/methodology/approach - The paper presents in detail a robotic system that allows users, especially non-expert programmers, to instruct and program a robot just showing it what it should do, in an intuitive way. This is done using the two most natural human interfaces (gestures and speech), a force control system and several code generation techniques. Special attention will be given to the recognition of gestures, where the data extracted from a motion sensor (three-axis accelerometer) embedded in the Wii remote controller was used to capture human hand behaviours. Gestures (dynamic hand positions) as well as manual postures (static hand positions) are recognized using a statistical approach and artificial neural networks. Practical implications - The key contribution of this paper is that it offers a practical method to program robots by means of gestures and speech, improving work efficiency and saving time. Originality/value - This paper presents an alternative to the typical robot teaching process, extending the concept of human-robot interaction and co-worker scenario. Since most companies do not have engineering resources to make changes or add new functionalities to their robotic manufacturing systems, this system constitutes a major advantage for small- to medium-sized enterprises.

* Industrial Robot: An International Journal

Accelerometer-based control of an industrial robotic arm

Sep 09, 2013

Abstract:Most of industrial robots are still programmed using the typical teaching process, through the use of the robot teach pendant. In this paper is proposed an accelerometer-based system to control an industrial robot using two low-cost and small 3-axis wireless accelerometers. These accelerometers are attached to the human arms, capturing its behavior (gestures and postures). An Artificial Neural Network (ANN) trained with a back-propagation algorithm was used to recognize arm gestures and postures, which then will be used as input in the control of the robot. The aim is that the robot starts the movement almost at the same time as the user starts to perform a gesture or posture (low response time). The results show that the system allows the control of an industrial robot in an intuitive way. However, the achieved recognition rate of gestures and postures (92%) should be improved in future, keeping the compromise with the system response time (160 milliseconds). Finally, the results of some tests performed with an industrial robot are presented and discussed.

Real-Time and Continuous Hand Gesture Spotting: an Approach Based on Artificial Neural Networks

Sep 09, 2013

Abstract:New and more natural human-robot interfaces are of crucial interest to the evolution of robotics. This paper addresses continuous and real-time hand gesture spotting, i.e., gesture segmentation plus gesture recognition. Gesture patterns are recognized by using artificial neural networks (ANNs) specifically adapted to the process of controlling an industrial robot. Since in continuous gesture recognition the communicative gestures appear intermittently with the noncommunicative, we are proposing a new architecture with two ANNs in series to recognize both kinds of gesture. A data glove is used as interface technology. Experimental results demonstrated that the proposed solution presents high recognition rates (over 99% for a library of ten gestures and over 96% for a library of thirty gestures), low training and learning time and a good capacity to generalize from particular situations.

Discretization and fitting of nominal data for autonomous robots

Sep 09, 2013

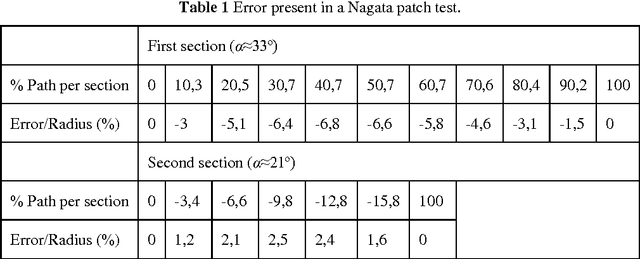

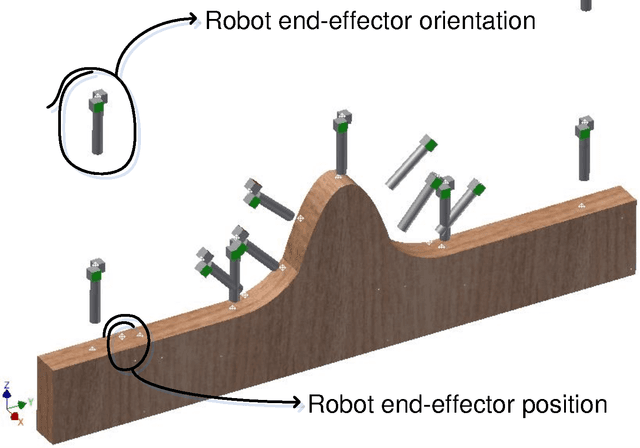

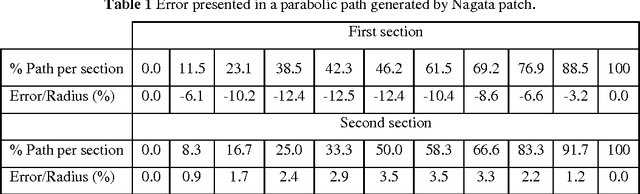

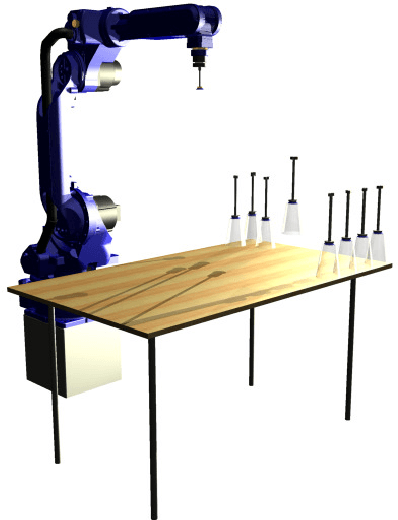

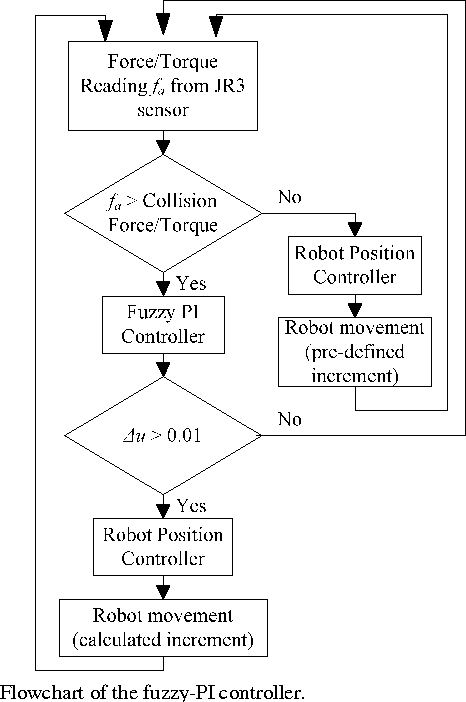

Abstract:This paper presents methodologies to discretize nominal robot paths extracted from 3-D CAD drawings. Behind robot path discretization is the ability to have a robot adjusting the traversed paths so that the contact between robot tool and work-piece is properly maintained. In addition, a hybrid force/motion control system based on Fuzzy-PI control is proposed to adjust robot paths with external sensory feedback. All these capabilities allow to facilitate the robot programming process and to increase the robots autonomy.

* Expert Systems with Applications

CAD-based robot programming: The role of Fuzzy-PI force control in unstructured environments

Sep 09, 2013

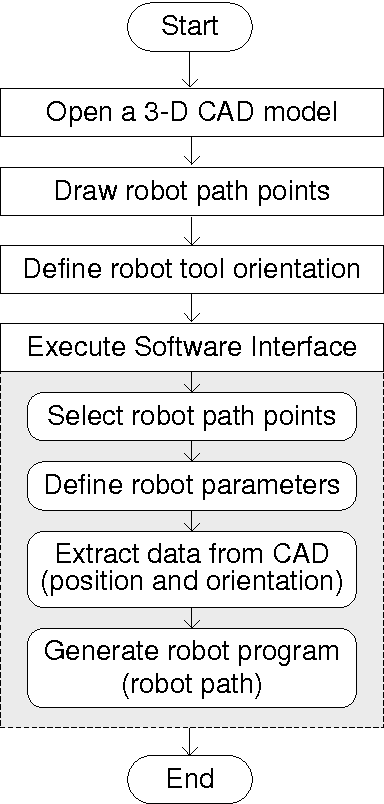

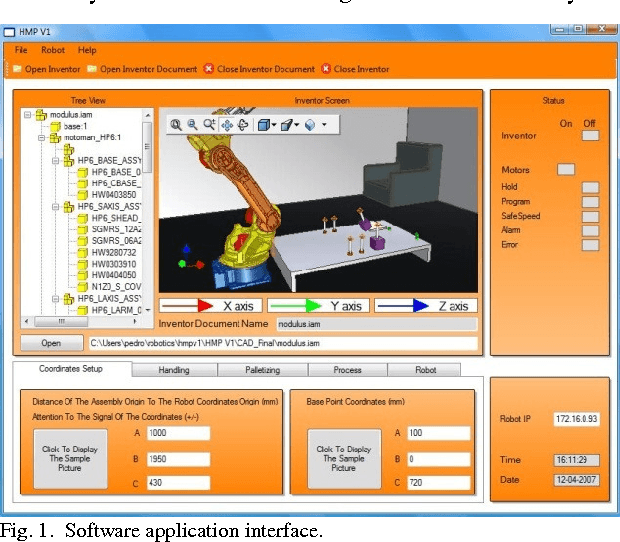

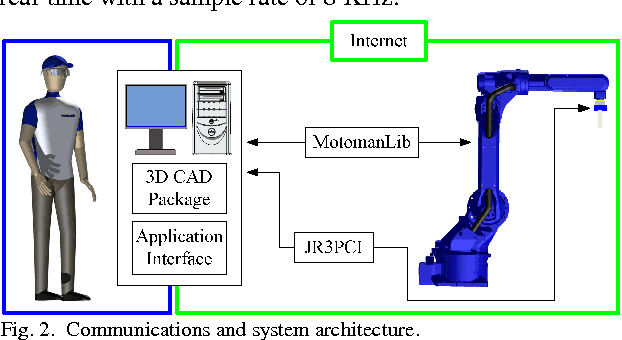

Abstract:More and more, new ways of interaction between humans and robots are desired, something that allow us to program a robot in an intuitive way, quickly and with a high-level of abstraction from the robot language. In this paper is presented a CAD-based system that allows users with basic skills in CAD and without skills in robot programming to generate robot programs from a CAD model of a robotic cell. When the CAD model reproduces exactly the real scenario, the system presents a satisfactory performance. On the contrary, when the CAD model does not reproduce exactly the real scenario or the calibration process is poorly done, we are dealing with uncertain (unstructured environment). In order to minimize or eliminate the previously mentioned problems, it was introduced sensory feedback (force and torque sensing) in the robotic framework. By controlling the end-effector pose and specifying its relationship to the interaction/contact forces, robot programmers can ensure that the robot maneuvers in an unstructured environment, damping possible impacts and also increasing the tolerance to positioning errors from the calibration process. Fuzzy-PI reasoning was used as a force control technique. The effectiveness of the proposed approach was evaluated in a series of experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge