Niteesh Balachandra Midlagajni

MoGaze: A Dataset of Full-Body Motions that Includes Workspace Geometry and Eye-Gaze

Nov 23, 2020

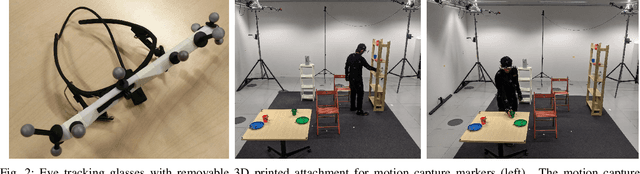

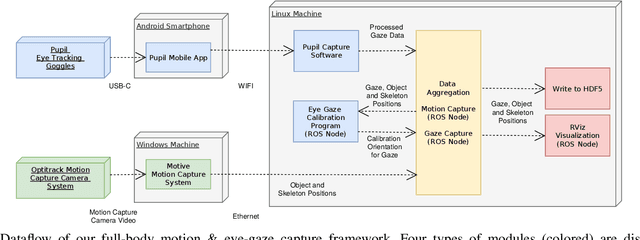

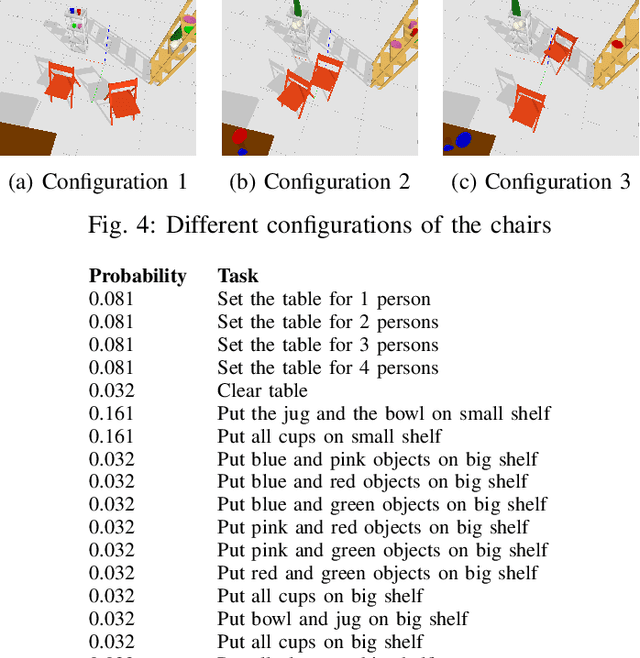

Abstract:As robots become more present in open human environments, it will become crucial for robotic systems to understand and predict human motion. Such capabilities depend heavily on the quality and availability of motion capture data. However, existing datasets of full-body motion rarely include 1) long sequences of manipulation tasks, 2) the 3D model of the workspace geometry, and 3) eye-gaze, which are all important when a robot needs to predict the movements of humans in close proximity. Hence, in this paper, we present a novel dataset of full-body motion for everyday manipulation tasks, which includes the above. The motion data was captured using a traditional motion capture system based on reflective markers. We additionally captured eye-gaze using a wearable pupil-tracking device. As we show in experiments, the dataset can be used for the design and evaluation of full-body motion prediction algorithms. Furthermore, our experiments show eye-gaze as a powerful predictor of human intent. The dataset includes 180 min of motion capture data with 1627 pick and place actions being performed. It is available at https://humans-to-robots-motion.github.io/mogaze and is planned to be extended to collaborative tasks with two humans in the near future.

Anticipating Human Intention for Full-Body Motion Prediction in Object Grasping and Placing Tasks

Jul 20, 2020

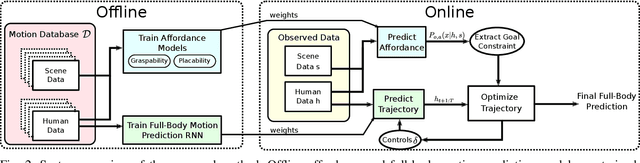

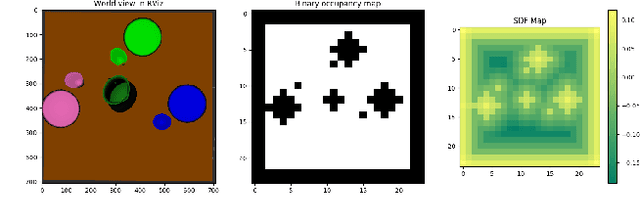

Abstract:Motion prediction in unstructured environments is a difficult problem and is essential for safe and efficient human-robot space sharing and collaboration. In this work, we focus on manipulation movements in environments such as homes, workplaces or restaurants, where the overall task and environment can be leveraged to produce accurate motion prediction. For these cases we propose an algorithmic framework that accounts explicitly for the environment geometry based on a model of affordances and a model of short-term human dynamics both trained on motion capture data. We propose dedicated function networks for graspability and placebility affordances and we make use of a dedicated RNN for short-term motion prediction. The prediction of grasp and placement probability densities are used by a constraint-based trajectory optimizer to produce a full-body motion prediction over the entire horizon. We show by comparing to ground truth data that we achieve similar performance for full-body motion predictions as using oracle grasp and place locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge