Ninad Hogade

Sustainable Carbon-Aware and Water-Efficient LLM Scheduling in Geo-Distributed Cloud Datacenters

May 29, 2025

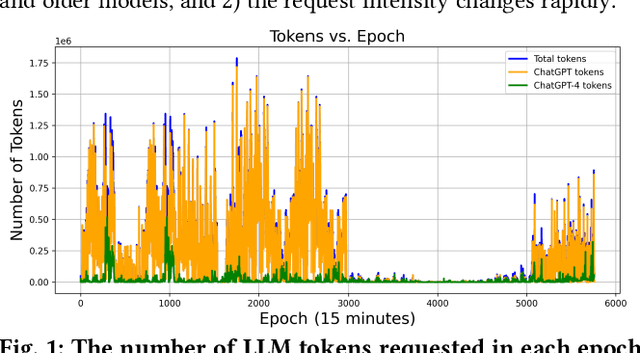

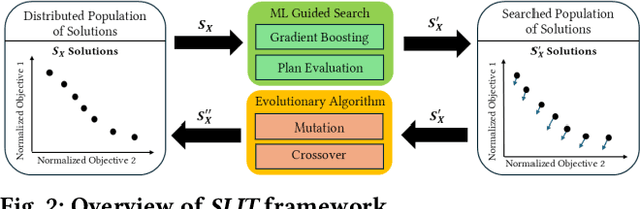

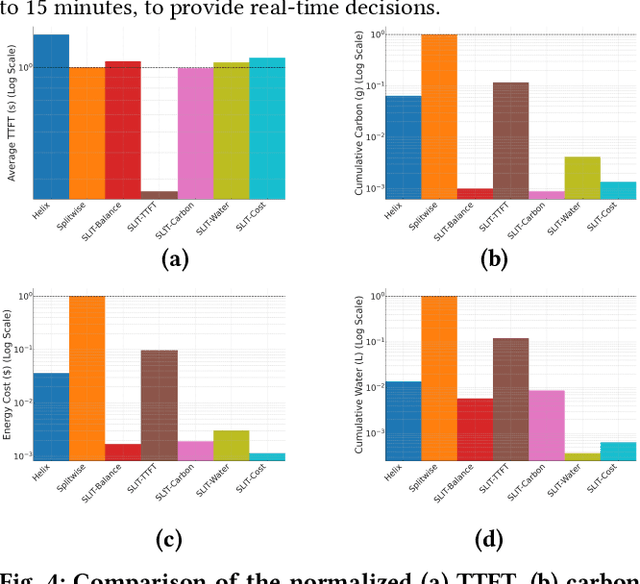

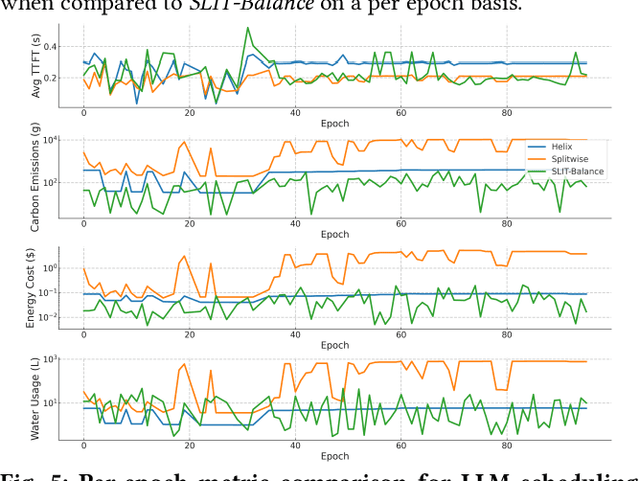

Abstract:In recent years, Large Language Models (LLM) such as ChatGPT, CoPilot, and Gemini have been widely adopted in different areas. As the use of LLMs continues to grow, many efforts have focused on reducing the massive training overheads of these models. But it is the environmental impact of handling user requests to LLMs that is increasingly becoming a concern. Recent studies estimate that the costs of operating LLMs in their inference phase can exceed training costs by 25x per year. As LLMs are queried incessantly, the cumulative carbon footprint for the operational phase has been shown to far exceed the footprint during the training phase. Further, estimates indicate that 500 ml of fresh water is expended for every 20-50 requests to LLMs during inference. To address these important sustainability issues with LLMs, we propose a novel framework called SLIT to co-optimize LLM quality of service (time-to-first token), carbon emissions, water usage, and energy costs. The framework utilizes a machine learning (ML) based metaheuristic to enhance the sustainability of LLM hosting across geo-distributed cloud datacenters. Such a framework will become increasingly vital as LLMs proliferate.

A Framework for SLO, Carbon, and Wastewater-Aware Sustainable FaaS Cloud Platform Management

Oct 09, 2024

Abstract:Function-as-a-Service (FaaS) is a growing cloud computing paradigm that is expected to reduce the user cost of service over traditional serverful approaches. However, the environmental impact of FaaS has not received much attention. We investigate FaaS scheduling and scaling from a sustainability perspective in this work. We find that the service-level objectives (SLOs) of FaaS and carbon emissions conflict with each other. We also find that SLO-focused FaaS scheduling can exacerbate water use in a datacenter. We propose a novel sustainability-focused FaaS scheduling and scaling framework to co-optimize SLO performance, carbon emissions, and wastewater generation.

Game-Theoretic Deep Reinforcement Learning to Minimize Carbon Emissions and Energy Costs for AI Inference Workloads in Geo-Distributed Data Centers

Apr 01, 2024

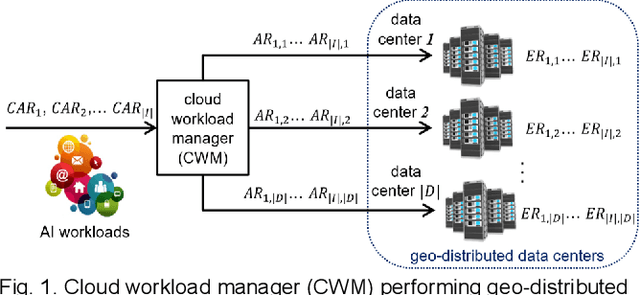

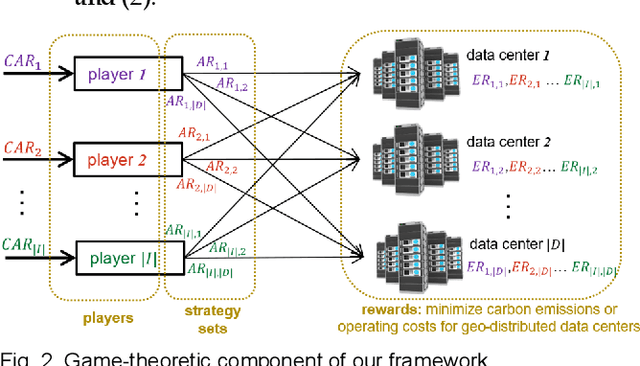

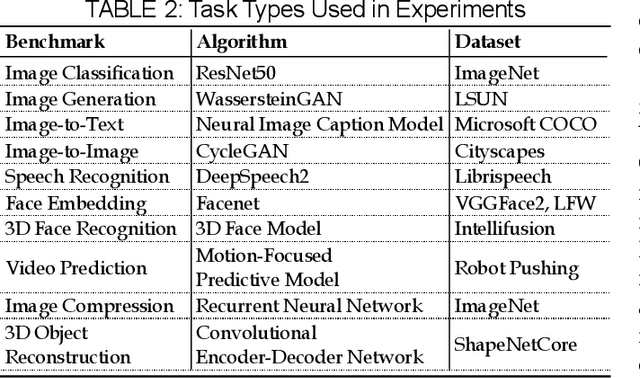

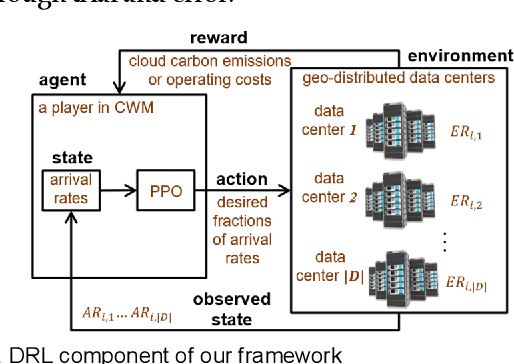

Abstract:Data centers are increasingly using more energy due to the rise in Artificial Intelligence (AI) workloads, which negatively impacts the environment and raises operational costs. Reducing operating expenses and carbon emissions while maintaining performance in data centers is a challenging problem. This work introduces a unique approach combining Game Theory (GT) and Deep Reinforcement Learning (DRL) for optimizing the distribution of AI inference workloads in geo-distributed data centers to reduce carbon emissions and cloud operating (energy + data transfer) costs. The proposed technique integrates the principles of non-cooperative Game Theory into a DRL framework, enabling data centers to make intelligent decisions regarding workload allocation while considering the heterogeneity of hardware resources, the dynamic nature of electricity prices, inter-data center data transfer costs, and carbon footprints. We conducted extensive experiments comparing our game-theoretic DRL (GT-DRL) approach with current DRL-based and other optimization techniques. The results demonstrate that our strategy outperforms the state-of-the-art in reducing carbon emissions and minimizing cloud operating costs without compromising computational performance. This work has significant implications for achieving sustainability and cost-efficiency in data centers handling AI inference workloads across diverse geographic locations.

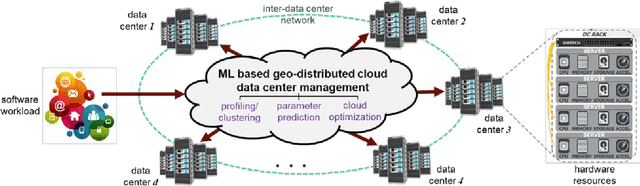

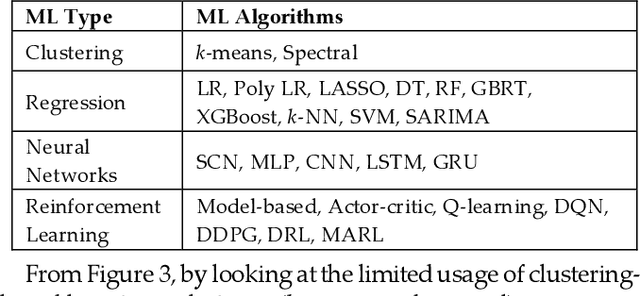

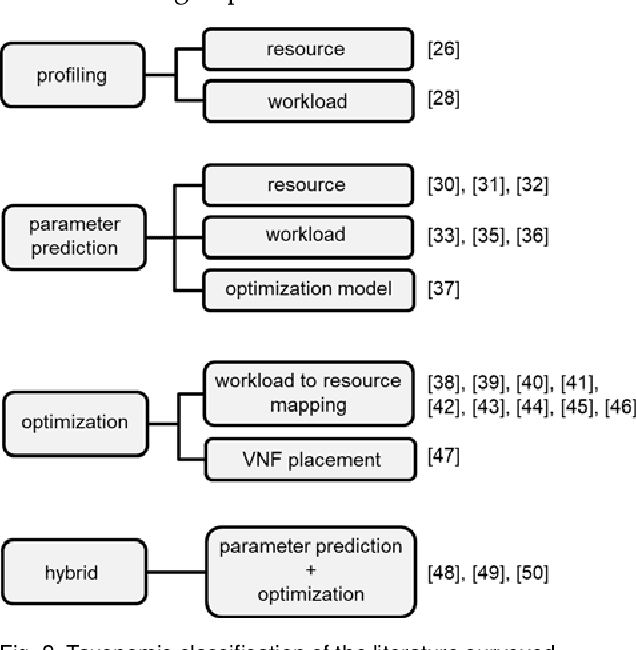

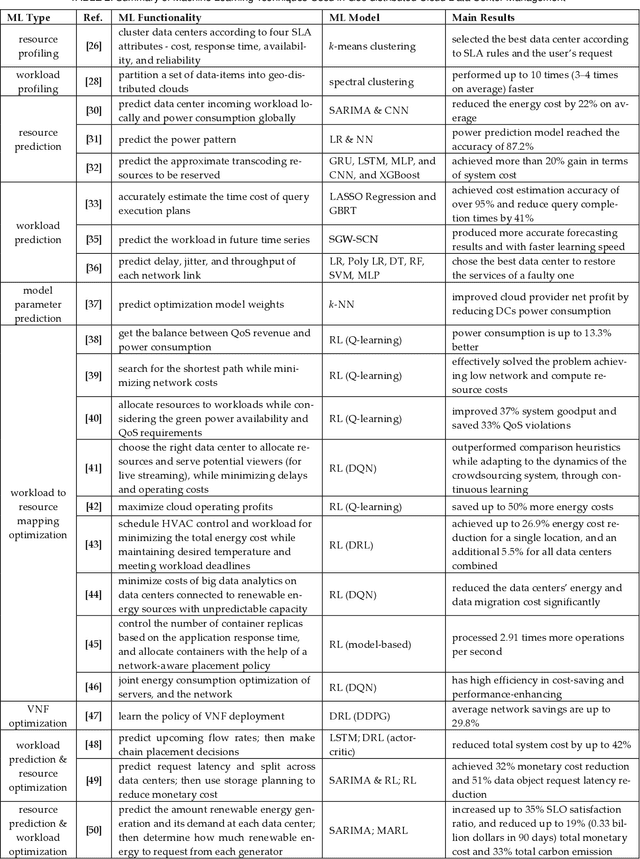

A Survey on Machine Learning for Geo-Distributed Cloud Data Center Management

May 17, 2022

Abstract:Cloud workloads today are typically managed in a distributed environment and processed across geographically distributed data centers. Cloud service providers have been distributing data centers globally to reduce operating costs while also improving quality of service by using intelligent workload and resource management strategies. Such large scale and complex orchestration of software workload and hardware resources remains a difficult problem to solve efficiently. Researchers and practitioners have been trying to address this problem by proposing a variety of cloud management techniques. Mathematical optimization techniques have historically been used to address cloud management issues. But these techniques are difficult to scale to geo-distributed problem sizes and have limited applicability in dynamic heterogeneous system environments, forcing cloud service providers to explore intelligent data-driven and Machine Learning (ML) based alternatives. The characterization, prediction, control, and optimization of complex, heterogeneous, and ever-changing distributed cloud resources and workloads employing ML methodologies have received much attention in recent years. In this article, we review the state-of-the-art ML techniques for the cloud data center management problem. We examine the challenges and the issues in current research focused on ML for cloud management and explore strategies for addressing these issues. We also discuss advantages and disadvantages of ML techniques presented in the recent literature and make recommendations for future research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge