Game-Theoretic Deep Reinforcement Learning to Minimize Carbon Emissions and Energy Costs for AI Inference Workloads in Geo-Distributed Data Centers

Paper and Code

Apr 01, 2024

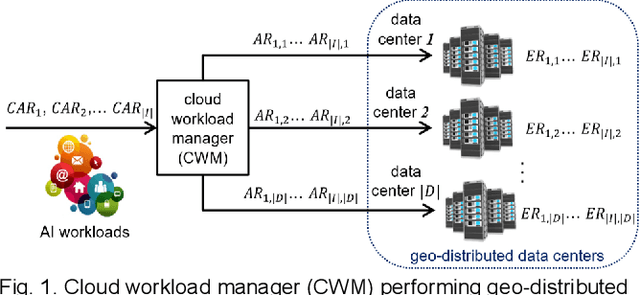

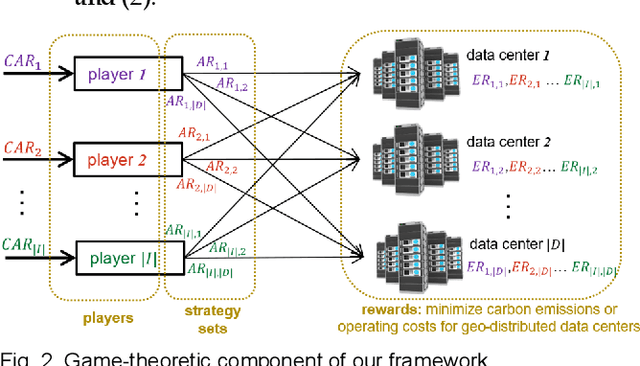

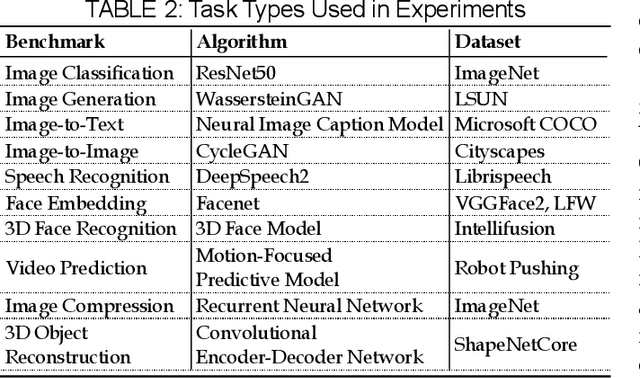

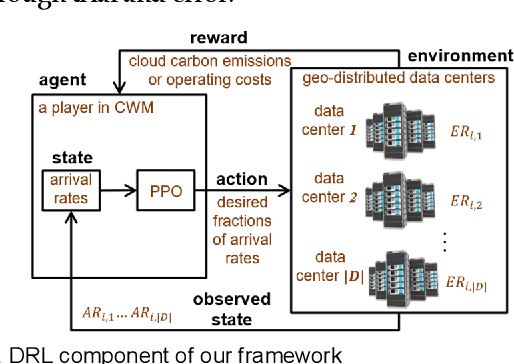

Data centers are increasingly using more energy due to the rise in Artificial Intelligence (AI) workloads, which negatively impacts the environment and raises operational costs. Reducing operating expenses and carbon emissions while maintaining performance in data centers is a challenging problem. This work introduces a unique approach combining Game Theory (GT) and Deep Reinforcement Learning (DRL) for optimizing the distribution of AI inference workloads in geo-distributed data centers to reduce carbon emissions and cloud operating (energy + data transfer) costs. The proposed technique integrates the principles of non-cooperative Game Theory into a DRL framework, enabling data centers to make intelligent decisions regarding workload allocation while considering the heterogeneity of hardware resources, the dynamic nature of electricity prices, inter-data center data transfer costs, and carbon footprints. We conducted extensive experiments comparing our game-theoretic DRL (GT-DRL) approach with current DRL-based and other optimization techniques. The results demonstrate that our strategy outperforms the state-of-the-art in reducing carbon emissions and minimizing cloud operating costs without compromising computational performance. This work has significant implications for achieving sustainability and cost-efficiency in data centers handling AI inference workloads across diverse geographic locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge