Nikola Surjanovic

AutoSGD: Automatic Learning Rate Selection for Stochastic Gradient Descent

May 27, 2025Abstract:The learning rate is an important tuning parameter for stochastic gradient descent (SGD) and can greatly influence its performance. However, appropriate selection of a learning rate schedule across all iterations typically requires a non-trivial amount of user tuning effort. To address this, we introduce AutoSGD: an SGD method that automatically determines whether to increase or decrease the learning rate at a given iteration and then takes appropriate action. We introduce theory supporting the convergence of AutoSGD, along with its deterministic counterpart for standard gradient descent. Empirical results suggest strong performance of the method on a variety of traditional optimization problems and machine learning tasks.

AutoStep: Locally adaptive involutive MCMC

Oct 24, 2024

Abstract:Many common Markov chain Monte Carlo (MCMC) kernels can be formulated using a deterministic involutive proposal with a step size parameter. Selecting an appropriate step size is often a challenging task in practice; and for complex multiscale targets, there may not be one choice of step size that works well globally. In this work, we address this problem with a novel class of involutive MCMC methods -- AutoStep MCMC -- that selects an appropriate step size at each iteration adapted to the local geometry of the target distribution. We prove that AutoStep MCMC is $\pi$-invariant and has other desirable properties under mild assumptions on the target distribution $\pi$ and involutive proposal. Empirical results examine the effect of various step size selection design choices, and show that AutoStep MCMC is competitive with state-of-the-art methods in terms of effective sample size per unit cost on a range of challenging target distributions.

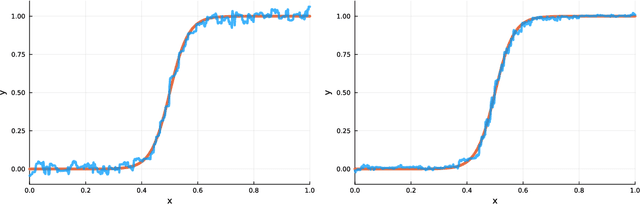

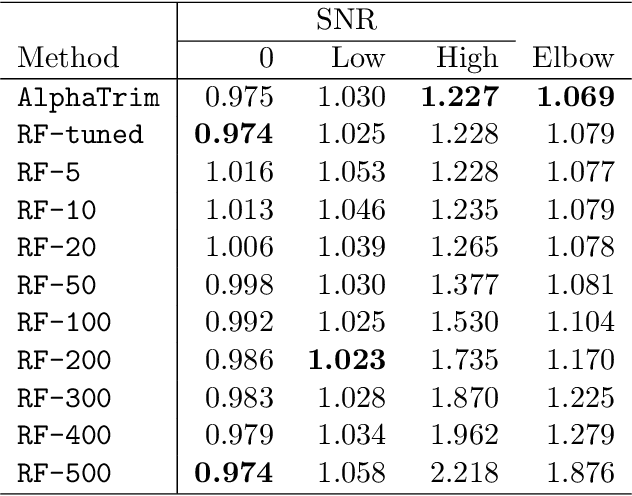

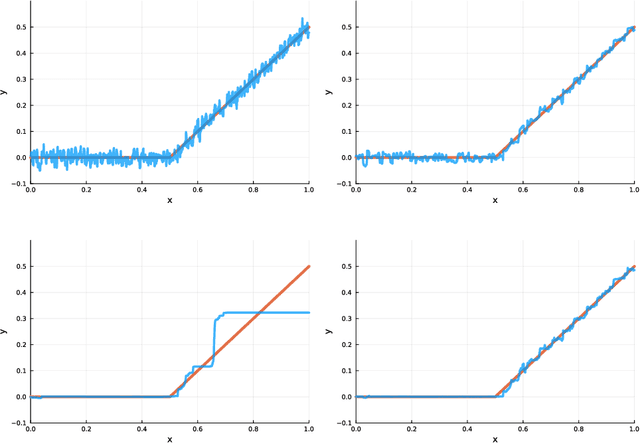

Alpha-Trimming: Locally Adaptive Tree Pruning for Random Forests

Aug 13, 2024

Abstract:We demonstrate that adaptively controlling the size of individual regression trees in a random forest can improve predictive performance, contrary to the conventional wisdom that trees should be fully grown. A fast pruning algorithm, alpha-trimming, is proposed as an effective approach to pruning trees within a random forest, where more aggressive pruning is performed in regions with a low signal-to-noise ratio. The amount of overall pruning is controlled by adjusting the weight on an information criterion penalty as a tuning parameter, with the standard random forest being a special case of our alpha-trimmed random forest. A remarkable feature of alpha-trimming is that its tuning parameter can be adjusted without refitting the trees in the random forest once the trees have been fully grown once. In a benchmark suite of 46 example data sets, mean squared prediction error is often substantially lowered by using our pruning algorithm and is never substantially increased compared to a random forest with fully-grown trees at default parameter settings.

MCMC-driven learning

Feb 14, 2024

Abstract:This paper is intended to appear as a chapter for the Handbook of Markov Chain Monte Carlo. The goal of this chapter is to unify various problems at the intersection of Markov chain Monte Carlo (MCMC) and machine learning$\unicode{x2014}$which includes black-box variational inference, adaptive MCMC, normalizing flow construction and transport-assisted MCMC, surrogate-likelihood MCMC, coreset construction for MCMC with big data, Markov chain gradient descent, Markovian score climbing, and more$\unicode{x2014}$within one common framework. By doing so, the theory and methods developed for each may be translated and generalized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge