Niklas Corell

ASDF: Assembly State Detection Utilizing Late Fusion by Integrating 6D Pose Estimation

Mar 25, 2024

Abstract:In medical and industrial domains, providing guidance for assembly processes is critical to ensure efficiency and safety. Errors in assembly can lead to significant consequences such as extended surgery times, and prolonged manufacturing or maintenance times in industry. Assembly scenarios can benefit from in-situ AR visualization to provide guidance, reduce assembly times and minimize errors. To enable in-situ visualization 6D pose estimation can be leveraged. Existing 6D pose estimation techniques primarily focus on individual objects and static captures. However, assembly scenarios have various dynamics including occlusion during assembly and dynamics in the assembly objects appearance. Existing work, combining object detection/6D pose estimation and assembly state detection focuses either on pure deep learning-based approaches, or limit the assembly state detection to building blocks. To address the challenges of 6D pose estimation in combination with assembly state detection, our approach ASDF builds upon the strengths of YOLOv8, a real-time capable object detection framework. We extend this framework, refine the object pose and fuse pose knowledge with network-detected pose information. Utilizing our late fusion in our Pose2State module results in refined 6D pose estimation and assembly state detection. By combining both pose and state information, our Pose2State module predicts the final assembly state with precision. Our evaluation on our ASDF dataset shows that our Pose2State module leads to an improved assembly state detection and that the improvement of the assembly state further leads to a more robust 6D pose estimation. Moreover, on the GBOT dataset, we outperform the pure deep learning-based network, and even outperform the hybrid and pure tracking-based approaches.

GBOT: Graph-Based 3D Object Tracking for Augmented Reality-Assisted Assembly Guidance

Feb 12, 2024

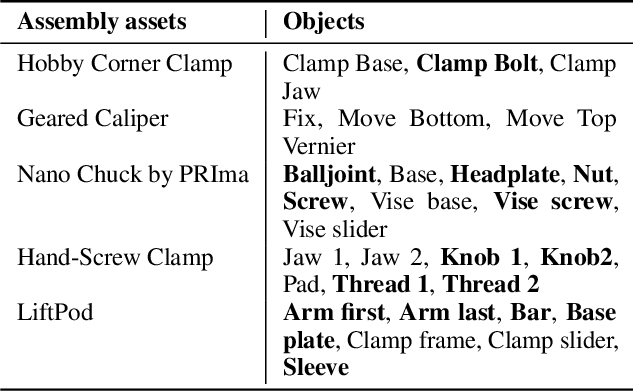

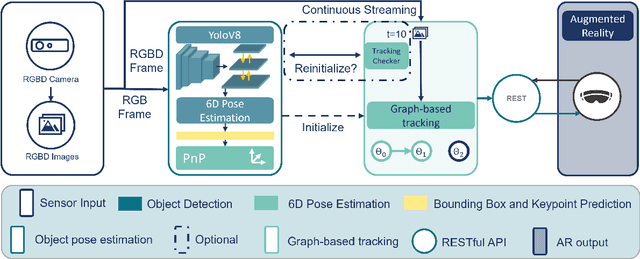

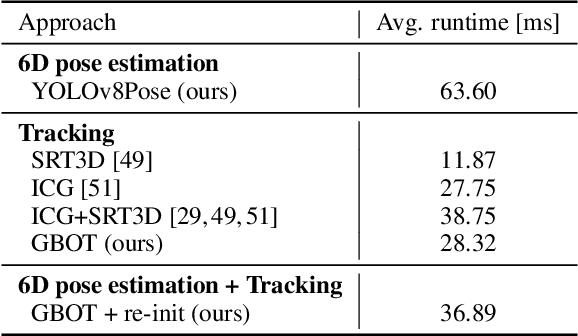

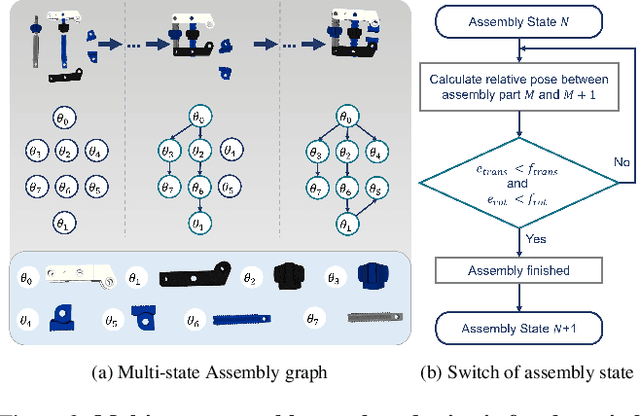

Abstract:Guidance for assemblable parts is a promising field for augmented reality. Augmented reality assembly guidance requires 6D object poses of target objects in real time. Especially in time-critical medical or industrial settings, continuous and markerless tracking of individual parts is essential to visualize instructions superimposed on or next to the target object parts. In this regard, occlusions by the user's hand or other objects and the complexity of different assembly states complicate robust and real-time markerless multi-object tracking. To address this problem, we present Graph-based Object Tracking (GBOT), a novel graph-based single-view RGB-D tracking approach. The real-time markerless multi-object tracking is initialized via 6D pose estimation and updates the graph-based assembly poses. The tracking through various assembly states is achieved by our novel multi-state assembly graph. We update the multi-state assembly graph by utilizing the relative poses of the individual assembly parts. Linking the individual objects in this graph enables more robust object tracking during the assembly process. For evaluation, we introduce a synthetic dataset of publicly available and 3D printable assembly assets as a benchmark for future work. Quantitative experiments in synthetic data and further qualitative study in real test data show that GBOT can outperform existing work towards enabling context-aware augmented reality assembly guidance. Dataset and code will be made publically available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge