Nihad Karim Chowdhury

Robust COVID-19 Detection from Cough Sounds using Deep Neural Decision Tree and Forest: A Comprehensive Cross-Datasets Evaluation

Jan 02, 2025

Abstract:This research presents a robust approach to classifying COVID-19 cough sounds using cutting-edge machine-learning techniques. Leveraging deep neural decision trees and deep neural decision forests, our methodology demonstrates consistent performance across diverse cough sound datasets. We begin with a comprehensive extraction of features to capture a wide range of audio features from individuals, whether COVID-19 positive or negative. To determine the most important features, we use recursive feature elimination along with cross-validation. Bayesian optimization fine-tunes hyper-parameters of deep neural decision tree and deep neural decision forest models. Additionally, we integrate the SMOTE during training to ensure a balanced representation of positive and negative data. Model performance refinement is achieved through threshold optimization, maximizing the ROC-AUC score. Our approach undergoes a comprehensive evaluation in five datasets: Cambridge, Coswara, COUGHVID, Virufy, and the combined Virufy with the NoCoCoDa dataset. Consistently outperforming state-of-the-art methods, our proposed approach yields notable AUC scores of 0.97, 0.98, 0.92, 0.93, 0.99, and 0.99 across the respective datasets. Merging all datasets into a combined dataset, our method, using a deep neural decision forest classifier, achieves an AUC of 0.97. Also, our study includes a comprehensive cross-datasets analysis, revealing demographic and geographic differences in the cough sounds associated with COVID-19. These differences highlight the challenges in transferring learned features across diverse datasets and underscore the potential benefits of dataset integration, improving generalizability and enhancing COVID-19 detection from audio signals.

MMTF-DES: A Fusion of Multimodal Transformer Models for Desire, Emotion, and Sentiment Analysis of Social Media Data

Oct 22, 2023

Abstract:Desire is a set of human aspirations and wishes that comprise verbal and cognitive aspects that drive human feelings and behaviors, distinguishing humans from other animals. Understanding human desire has the potential to be one of the most fascinating and challenging research domains. It is tightly coupled with sentiment analysis and emotion recognition tasks. It is beneficial for increasing human-computer interactions, recognizing human emotional intelligence, understanding interpersonal relationships, and making decisions. However, understanding human desire is challenging and under-explored because ways of eliciting desire might be different among humans. The task gets more difficult due to the diverse cultures, countries, and languages. Prior studies overlooked the use of image-text pairwise feature representation, which is crucial for the task of human desire understanding. In this research, we have proposed a unified multimodal transformer-based framework with image-text pair settings to identify human desire, sentiment, and emotion. The core of our proposed method lies in the encoder module, which is built using two state-of-the-art multimodal transformer models. These models allow us to extract diverse features. To effectively extract visual and contextualized embedding features from social media image and text pairs, we conducted joint fine-tuning of two pre-trained multimodal transformer models: Vision-and-Language Transformer (ViLT) and Vision-and-Augmented-Language Transformer (VAuLT). Subsequently, we use an early fusion strategy on these embedding features to obtain combined diverse feature representations of the image-text pair. This consolidation incorporates diverse information about this task, enabling us to robustly perceive the context and image pair from multiple perspectives.

An Ensemble-based Multi-Criteria Decision Making Method for COVID-19 Cough Classification

Oct 01, 2021

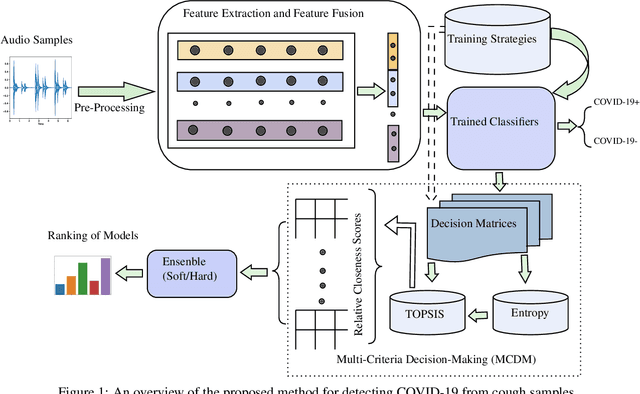

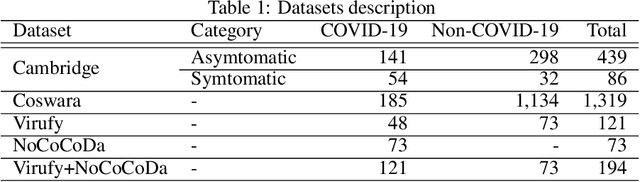

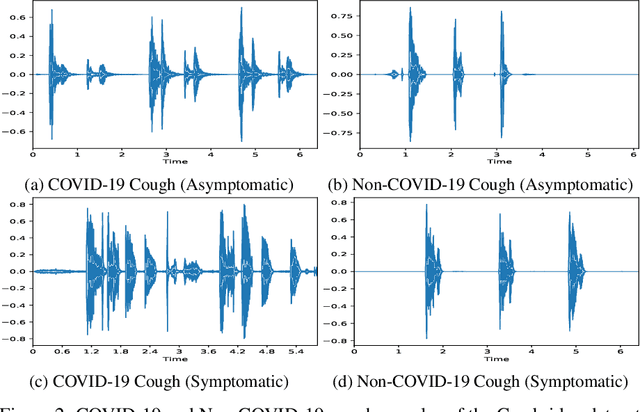

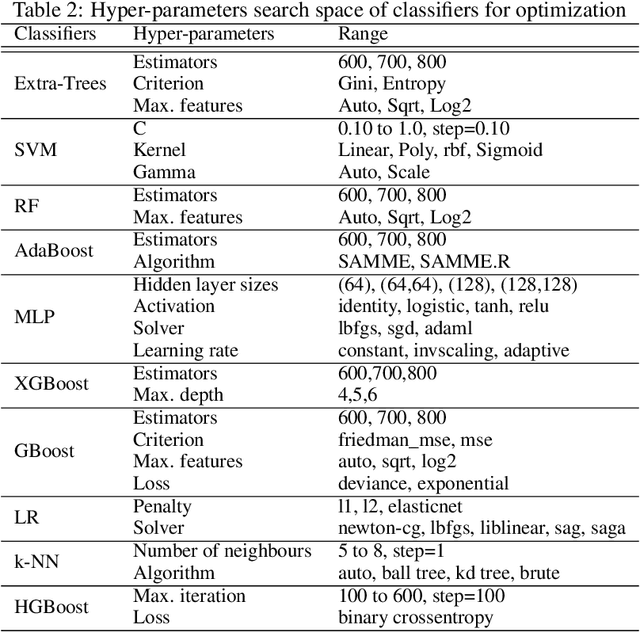

Abstract:The objectives of this research are analysing the performance of the state-of-the-art machine learning techniques for classifying COVID-19 from cough sound and identifying the model(s) that consistently perform well across different cough datasets. Different performance evaluation metrics (such as precision, sensitivity, specificity, AUC, accuracy, etc.) make it difficult to select the best performance model. To address this issue, in this paper, we propose an ensemble-based multi-criteria decision making (MCDM) method for selecting top performance machine learning technique(s) for COVID-19 cough classification. We use four cough datasets, namely Cambridge, Coswara, Virufy, and NoCoCoDa to verify the proposed method. At first, our proposed method uses the audio features of cough samples and then applies machine learning (ML) techniques to classify them as COVID-19 or non-COVID-19. Then, we consider a multi-criteria decision-making (MCDM) method that combines ensemble technologies (i.e., soft and hard) to select the best model. In MCDM, we use the technique for order preference by similarity to ideal solution (TOPSIS) for ranking purposes, while entropy is applied to calculate evaluation criteria weights. In addition, we apply the feature reduction process through recursive feature elimination with cross-validation under different estimators. The results of our empirical evaluations show that the proposed method outperforms the state-of-the-art models.

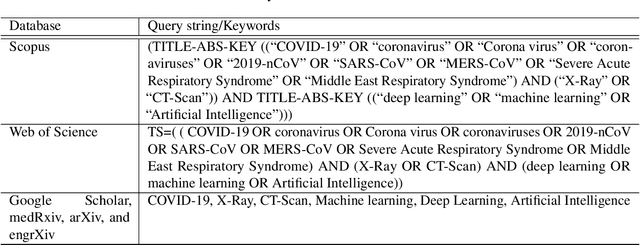

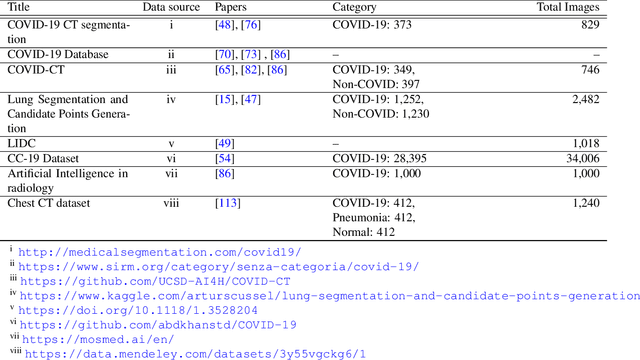

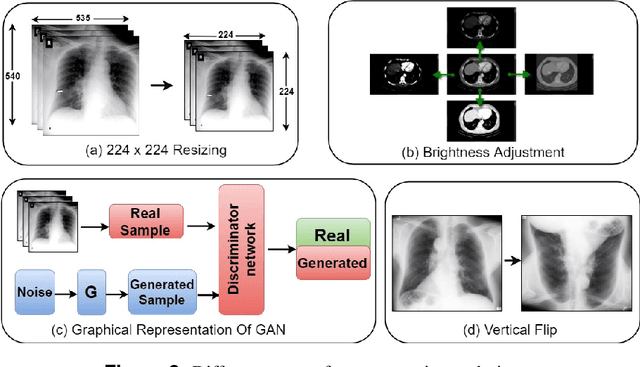

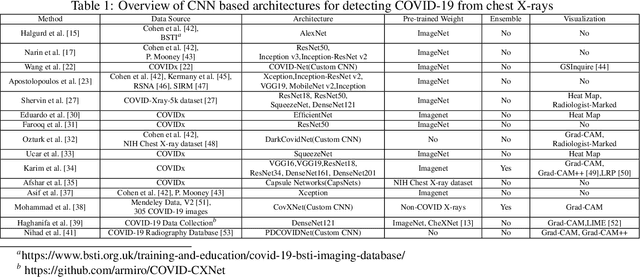

A Survey of Machine Learning Techniques for Detecting and Diagnosing COVID-19 from Imaging

Jul 25, 2021

Abstract:Due to the limited availability and high cost of the reverse transcription-polymerase chain reaction (RT-PCR) test, many studies have proposed machine learning techniques for detecting COVID-19 from medical imaging. The purpose of this study is to systematically review, assess, and synthesize research articles that have used different machine learning techniques to detect and diagnose COVID-19 from chest X-ray and CT scan images. A structured literature search was conducted in the relevant bibliographic databases to ensure that the survey solely centered on reproducible and high-quality research. We selected papers based on our inclusion criteria. In this survey, we reviewed $98$ articles that fulfilled our inclusion criteria. We have surveyed a complete pipeline of chest imaging analysis techniques related to COVID-19, including data collection, pre-processing, feature extraction, classification, and visualization. We have considered CT scans and X-rays as both are widely used to describe the latest developments in medical imaging to detect COVID-19. This survey provides researchers with valuable insights into different machine learning techniques and their performance in the detection and diagnosis of COVID-19 from chest imaging. At the end, the challenges and limitations in detecting COVID-19 using machine learning techniques and the future direction of research are discussed.

* 23 pages, 6 figures, accepted in Quantitative Biology

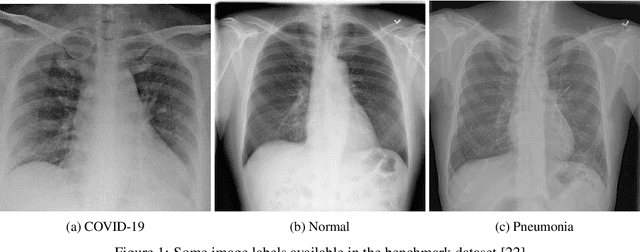

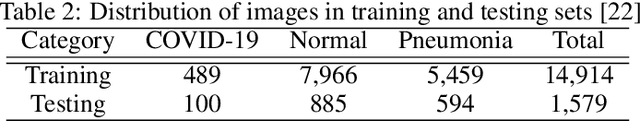

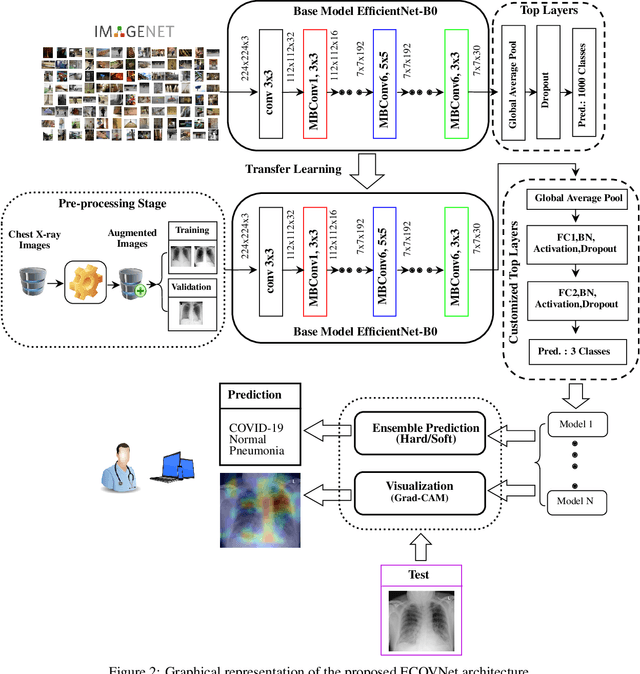

ECOVNet: An Ensemble of Deep Convolutional Neural Networks Based on EfficientNet to Detect COVID-19 From Chest X-rays

Oct 16, 2020

Abstract:This paper proposed an ensemble of deep convolutional neural networks (CNN) based on EfficientNet, named ECOVNet, to detect COVID-19 using a large chest X-ray data set. At first, the open-access large chest X-ray collection is augmented, and then ImageNet pre-trained weights for EfficientNet is transferred with some customized fine-tuning top layers that are trained, followed by an ensemble of model snapshots to classify chest X-rays corresponding to COVID-19, normal, and pneumonia. The predictions of the model snapshots, which are created during a single training, are combined through two ensemble strategies, i.e., hard ensemble and soft ensemble to ameliorate classification performance and generalization in the related task of classifying chest X-rays.

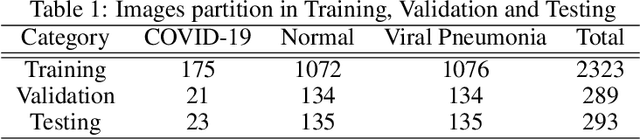

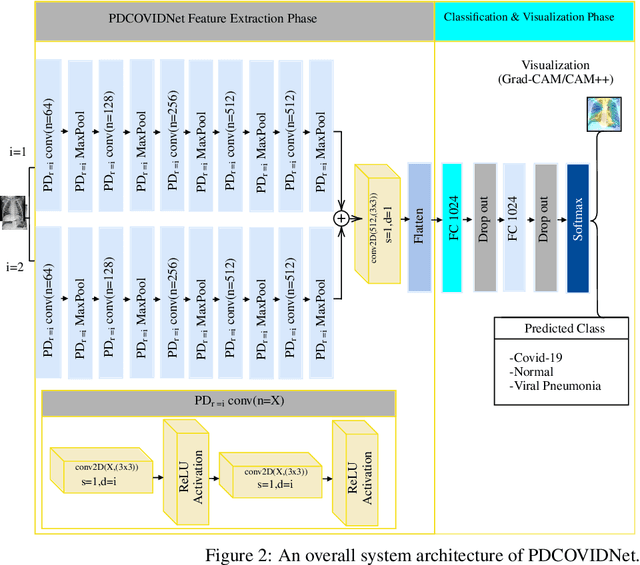

PDCOVIDNet: A Parallel-Dilated Convolutional Neural Network Architecture for Detecting COVID-19 from Chest X-Ray Images

Jul 29, 2020

Abstract:The COVID-19 pandemic continues to severely undermine the prosperity of the global health system. To combat this pandemic, effective screening techniques for infected patients are indispensable. There is no doubt that the use of chest X-ray images for radiological assessment is one of the essential screening techniques. Some of the early studies revealed that the patient's chest X-ray images showed abnormalities, which is natural for patients infected with COVID-19. In this paper, we proposed a parallel-dilated convolutional neural network (CNN) based COVID-19 detection system from chest x-ray images, named as Parallel-Dilated COVIDNet (PDCOVIDNet). First, the publicly available chest X-ray collection fully preloaded and enhanced, and then classified by the proposed method. Differing convolution dilation rate in a parallel form demonstrates the proof-of-principle for using PDCOVIDNet to extract radiological features for COVID-19 detection. Accordingly, we have assisted our method with two visualization methods, which are specifically designed to increase understanding of the key components associated with COVID-19 infection. Both visualization methods compute gradients for a given image category related to feature maps of the last convolutional layer to create a class-discriminative region. In our experiment, we used a total of 2,905 chest X-ray images, comprising three cases (such as COVID-19, normal, and viral pneumonia), and empirical evaluations revealed that the proposed method extracted more significant features expeditiously related to the suspected disease. The experimental results demonstrate that our proposed method significantly improves performance metrics: accuracy, precision, recall, and F1 scores reach 96.58%, 96.58%, 96.59%, and 96.58%, respectively, which is comparable or enhanced compared with the state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge