Nicolas Szczepanski

Computing Abductive Explanations for Boosted Trees

Sep 16, 2022

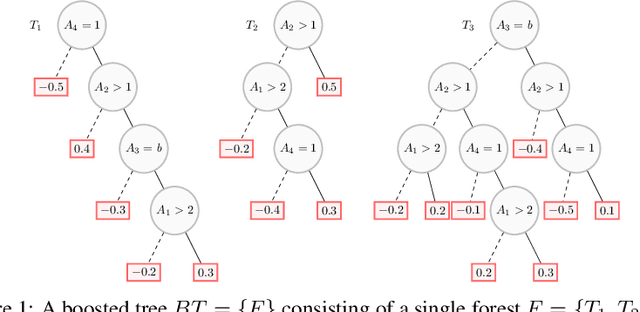

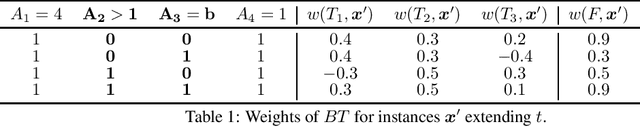

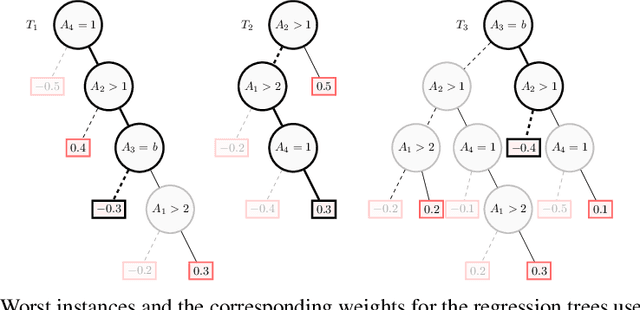

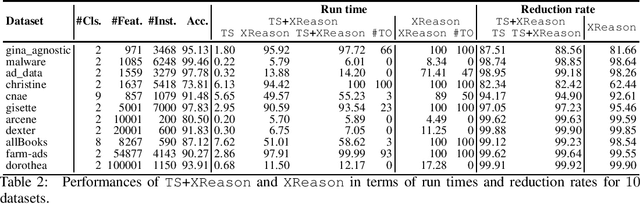

Abstract:Boosted trees is a dominant ML model, exhibiting high accuracy. However, boosted trees are hardly intelligible, and this is a problem whenever they are used in safety-critical applications. Indeed, in such a context, rigorous explanations of the predictions made are expected. Recent work have shown how subset-minimal abductive explanations can be derived for boosted trees, using automated reasoning techniques. However, the generation of such well-founded explanations is intractable in the general case. To improve the scalability of their generation, we introduce the notion of tree-specific explanation for a boosted tree. We show that tree-specific explanations are abductive explanations that can be computed in polynomial time. We also explain how to derive a subset-minimal abductive explanation from a tree-specific explanation. Experiments on various datasets show the computational benefits of leveraging tree-specific explanations for deriving subset-minimal abductive explanations.

PYCSP3: Modeling Combinatorial Constrained Problems in Python

Sep 01, 2020

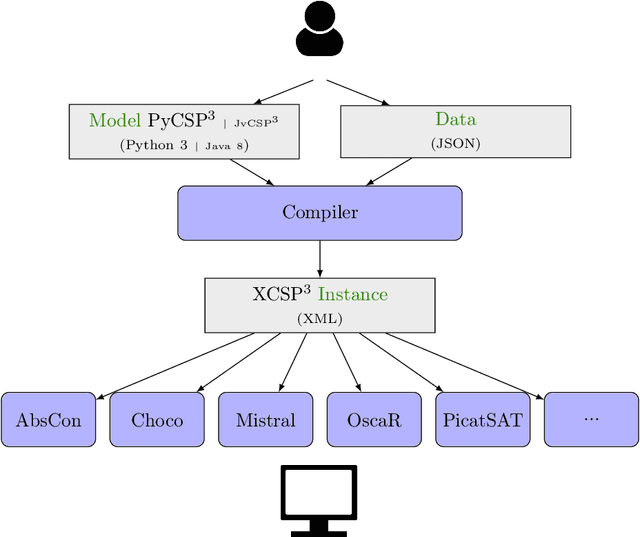

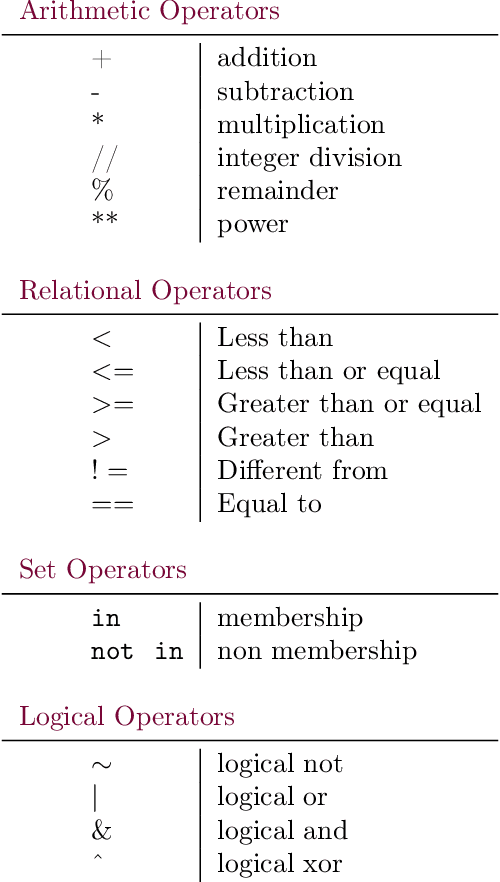

Abstract:In this document, we introduce PYCSP$3$, a Python library that allows us to write models of combinatorial constrained problems in a simple and declarative way. Currently, with PyCSP$3$, you can write models of constraint satisfaction and optimization problems. More specifically, you can build CSP (Constraint Satisfaction Problem) and COP (Constraint Optimization Problem) models. Importantly, there is a complete separation between modeling and solving phases: you write a model, you compile it (while providing some data) in order to generate an XCSP3 instance (file), and you solve that problem instance by means of a constraint solver. In this document, you will find all that you need to know about PYCSP$3$, with more than 40 illustrative models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge