Nicolai Spicher

Benchmarking the Impact of Noise on Deep Learning-based Classification of Atrial Fibrillation in 12-Lead ECG

Mar 24, 2023

Abstract:Electrocardiography analysis is widely used in various clinical applications and Deep Learning models for classification tasks are currently in the focus of research. Due to their data-driven character, they bear the potential to handle signal noise efficiently, but its influence on the accuracy of these methods is still unclear. Therefore, we benchmark the influence of four types of noise on the accuracy of a Deep Learning-based method for atrial fibrillation detection in 12-lead electrocardiograms. We use a subset of a publicly available dataset (PTBXL) and use the metadata provided by human experts regarding noise for assigning a signal quality to each electrocardiogram. Furthermore, we compute a quantitative signal-to-noise ratio for each electrocardiogram. We analyze the accuracy of the Deep Learning model with respect to both metrics and observe that the method can robustly identify atrial fibrillation, even in cases signals are labelled by human experts as being noisy on multiple leads. False positive and false negative rates are slightly worse for data being labelled as noisy. Interestingly, data annotated as showing baseline drift noise results in an accuracy very similar to data without. We conclude that the issue of processing noisy electrocardiography data can be addressed successfully by Deep Learning methods that might not need preprocessing as many conventional methods do.

Analysis of a Deep Learning Model for 12-Lead ECG Classification Reveals Learned Features Similar to Diagnostic Criteria

Nov 03, 2022

Abstract:Despite their remarkable performance, deep neural networks remain unadopted in clinical practice, which is considered to be partially due to their lack in explainability. In this work, we apply attribution methods to a pre-trained deep neural network (DNN) for 12-lead electrocardiography classification to open this "black box" and understand the relationship between model prediction and learned features. We classify data from a public data set and the attribution methods assign a "relevance score" to each sample of the classified signals. This allows analyzing what the network learned during training, for which we propose quantitative methods: average relevance scores over a) classes, b) leads, and c) average beats. The analyses of relevance scores for atrial fibrillation (AF) and left bundle branch block (LBBB) compared to healthy controls show that their mean values a) increase with higher classification probability and correspond to false classifications when around zero, and b) correspond to clinical recommendations regarding which lead to consider. Furthermore, c) visible P-waves and concordant T-waves result in clearly negative relevance scores in AF and LBBB classification, respectively. In summary, our analysis suggests that the DNN learned features similar to cardiology textbook knowledge.

Feasibility Analysis of Fifth-generation (5G) Mobile Networks for Transmission of Medical Imaging Data

Jul 30, 2021

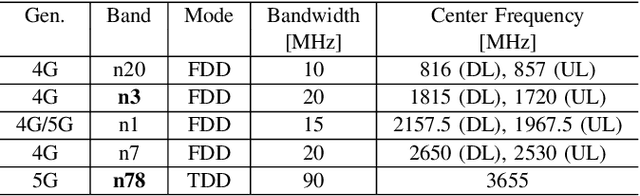

Abstract:Next to higher data rates and lower latency, the upcoming fifth-generation mobile network standard will introduce a new service ecosystem. Concepts such as multi-access edge computing or network slicing will enable tailoring service level requirements to specific use-cases. In medical imaging, researchers and clinicians are currently working towards higher portability of scanners. This includes i) small scanners to be wheeled inside the hospital to the bedside and ii) conventional scanners provided via trucks to remote areas. Both use-cases introduce the need for mobile networks adhering to high safety standards and providing high data rates. These requirements could be met by fifth-generation mobile networks. In this work, we analyze the feasibility of transferring medical imaging data using the current state of development of fifth-generation mobile networks (3GPP Release 15). We demonstrate the potential of reaching 100 Mbit/s upload rates using already available consumer-grade hardware. Furthermore, we show an effective average data throughput of 50 Mbit/s when transferring medical images using out-of-the-box open-source software based on the Digital Imaging and Communications in Medicine (DICOM) standard. During transmissions, we sample the radio frequency bands to analyse the characteristics of the mobile radio network. Additionally, we discuss the potential of new features such as network slicing that will be introduced in forthcoming releases.

Edge computing in 5G cellular networks for real-time analysis of electrocardiography recorded with wearable textile sensors

Jul 29, 2021

Abstract:Fifth-generation (5G) cellular networks promise higher data rates, lower latency, and large numbers of interconnected devices. Thereby, 5G will provide important steps towards unlocking the full potential of the Internet of Things (IoT). In this work, we propose a lightweight IoT platform for continuous vital sign analysis. Electrocardiography (ECG) is acquired via textile sensors and continuously sent from a smartphone to an edge device using cellular networks. The edge device applies a state-of-the art deep learning model for providing a binary end-to-end classification if a myocardial infarction is at hand. Using this infrastructure, experiments with four volunteers were conducted. We compare 3rd, 4th-, and 5th-generation cellular networks (release 15) with respect to transmission latency, data corruption, and duration of machine learning inference. The best performance is achieved using 5G showing an average transmission latency of 110ms and data corruption in 0.07% of ECG samples. Deep learning inference took approximately 170ms. In conclusion, 5G cellular networks in combination with edge devices are a suitable infrastructure for continuous vital sign analysis using deep learning models. Future 5G releases will introduce multi-access edge computing (MEC) as a paradigm for bringing edge devices nearer to mobile clients. This will decrease transmission latency and eventually enable automatic emergency alerting in near real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge