Nick Saw

SIGMA: An Open-Source Interactive System for Mixed-Reality Task Assistance Research

May 16, 2024

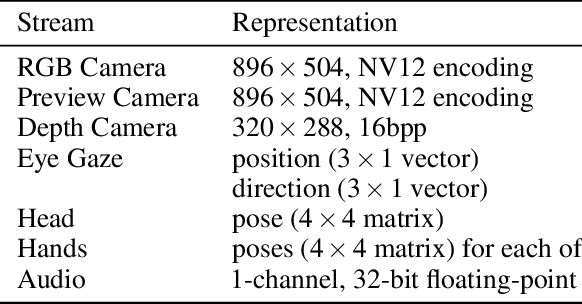

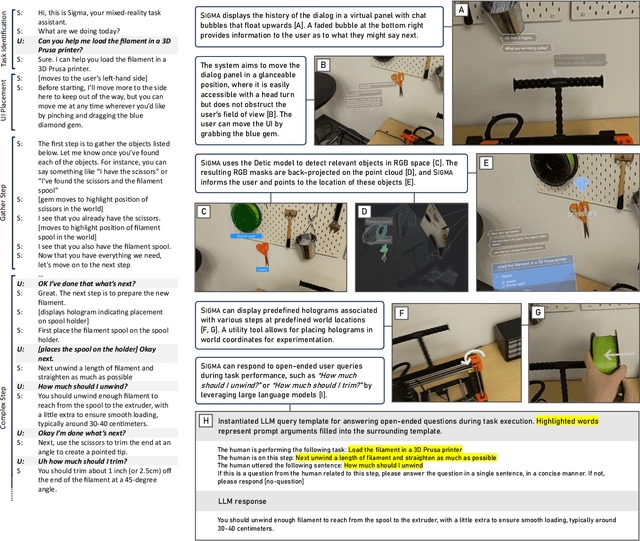

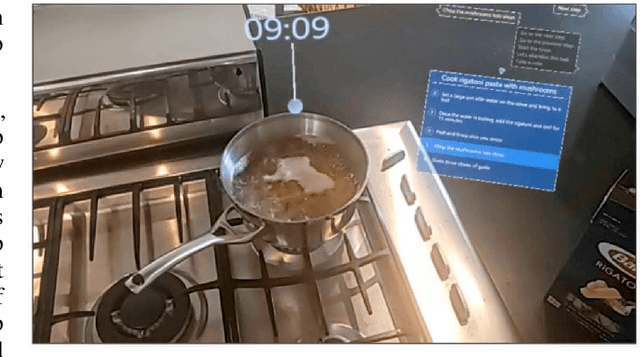

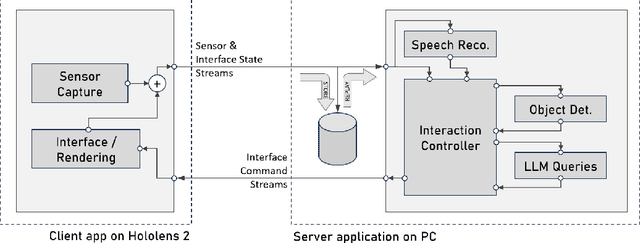

Abstract:We introduce an open-source system called SIGMA (short for "Situated Interactive Guidance, Monitoring, and Assistance") as a platform for conducting research on task-assistive agents in mixed-reality scenarios. The system leverages the sensing and rendering affordances of a head-mounted mixed-reality device in conjunction with large language and vision models to guide users step by step through procedural tasks. We present the system's core capabilities, discuss its overall design and implementation, and outline directions for future research enabled by the system. SIGMA is easily extensible and provides a useful basis for future research at the intersection of mixed reality and AI. By open-sourcing an end-to-end implementation, we aim to lower the barrier to entry, accelerate research in this space, and chart a path towards community-driven end-to-end evaluation of large language, vision, and multimodal models in the context of real-world interactive applications.

Platform for Situated Intelligence

Mar 29, 2021

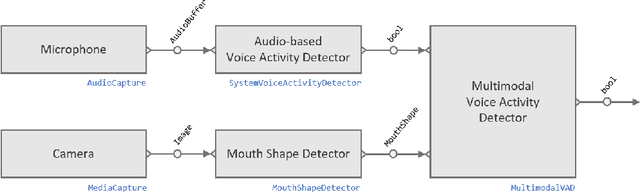

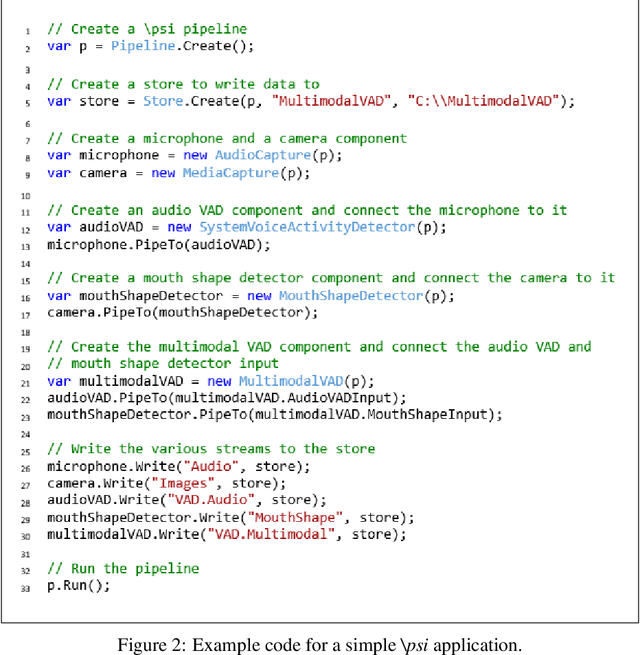

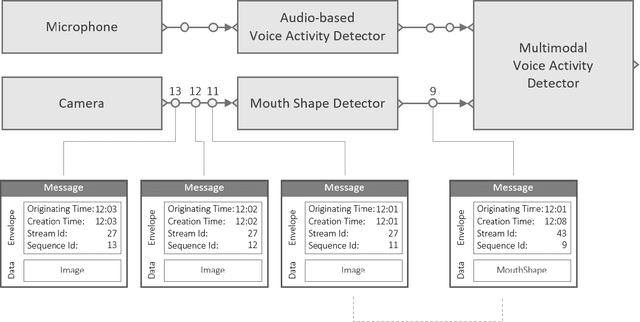

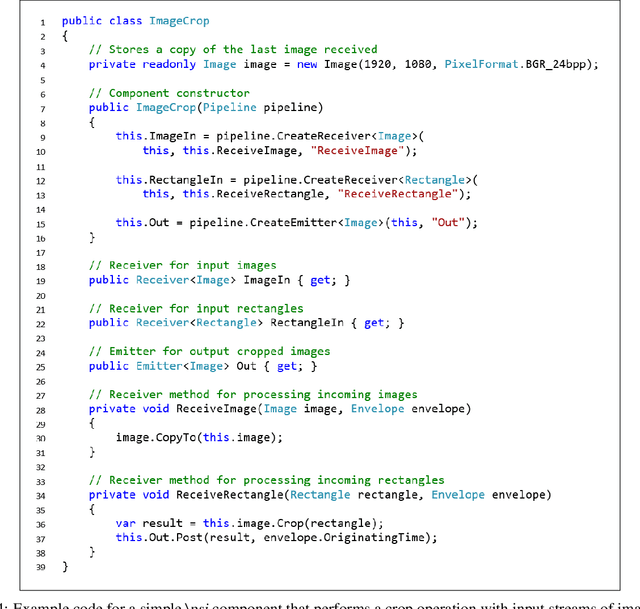

Abstract:We introduce Platform for Situated Intelligence, an open-source framework created to support the rapid development and study of multimodal, integrative-AI systems. The framework provides infrastructure for sensing, fusing, and making inferences from temporal streams of data across different modalities, a set of tools that enable visualization and debugging, and an ecosystem of components that encapsulate a variety of perception and processing technologies. These assets jointly provide the means for rapidly constructing and refining multimodal, integrative-AI systems, while retaining the efficiency and performance characteristics required for deployment in open-world settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge