Nestor Gonzalez Lopez

gym-gazebo2, a toolkit for reinforcement learning using ROS 2 and Gazebo

Mar 18, 2019

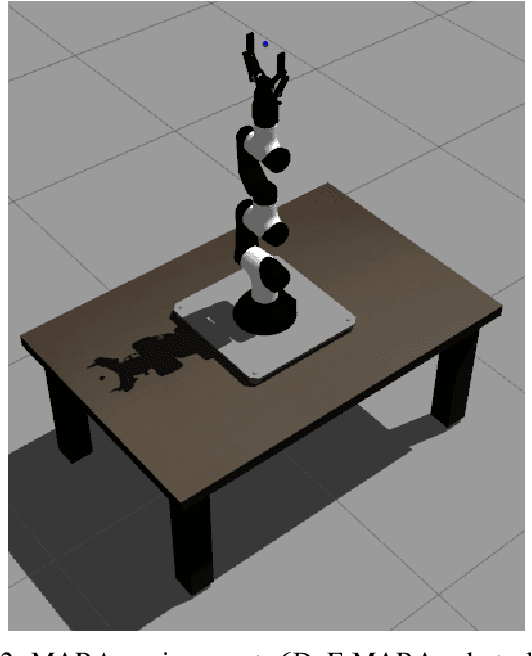

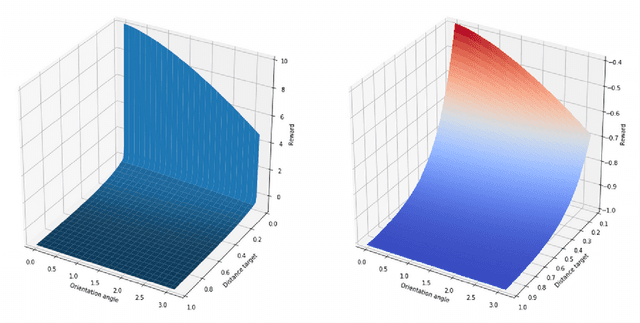

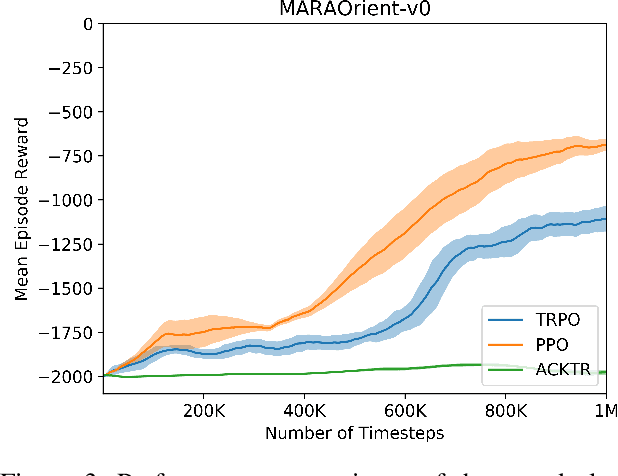

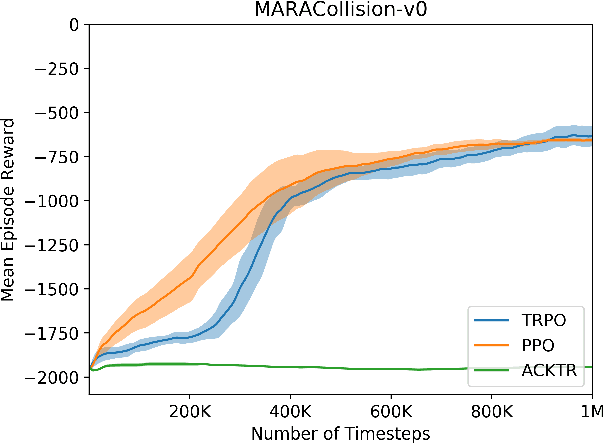

Abstract:This paper presents an upgraded, real world application oriented version of gym-gazebo, the Robot Operating System (ROS) and Gazebo based Reinforcement Learning (RL) toolkit, which complies with OpenAI Gym. The content discusses the new ROS 2 based software architecture and summarizes the results obtained using Proximal Policy Optimization (PPO). Ultimately, the output of this work presents a benchmarking system for robotics that allows different techniques and algorithms to be compared using the same virtual conditions. We have evaluated environments with different levels of complexity of the Modular Articulated Robotic Arm (MARA), reaching accuracies in the millimeter scale. The converged results show the feasibility and usefulness of the gym-gazebo 2 toolkit, its potential and applicability in industrial use cases, using modular robots.

ROS2Learn: a reinforcement learning framework for ROS 2

Mar 18, 2019

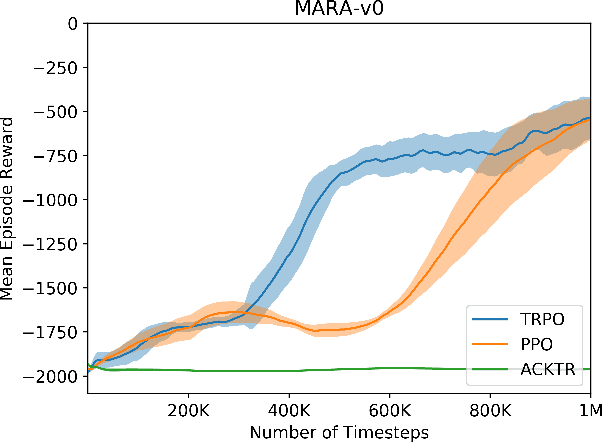

Abstract:We propose a novel framework for Deep Reinforcement Learning (DRL) in modular robotics to train a robot directly from joint states, using traditional robotic tools. We use an state-of-the-art implementation of the Proximal Policy Optimization, Trust Region Policy Optimization and Actor-Critic Kronecker-Factored Trust Region algorithms to learn policies in four different Modular Articulated Robotic Arm (MARA) environments. We support this process using a framework that communicates with typical tools used in robotics, such as Gazebo and Robot Operating System 2 (ROS 2). We evaluate several algorithms in modular robots with an empirical study in simulation.

Extending the OpenAI Gym for robotics: a toolkit for reinforcement learning using ROS and Gazebo

Feb 07, 2017

Abstract:This paper presents an extension of the OpenAI Gym for robotics using the Robot Operating System (ROS) and the Gazebo simulator. The content discusses the software architecture proposed and the results obtained by using two Reinforcement Learning techniques: Q-Learning and Sarsa. Ultimately, the output of this work presents a benchmarking system for robotics that allows different techniques and algorithms to be compared using the same virtual conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge