Nesrine Chehata

Multi-Layer Modeling of Dense Vegetation from Aerial LiDAR Scans

Apr 25, 2022

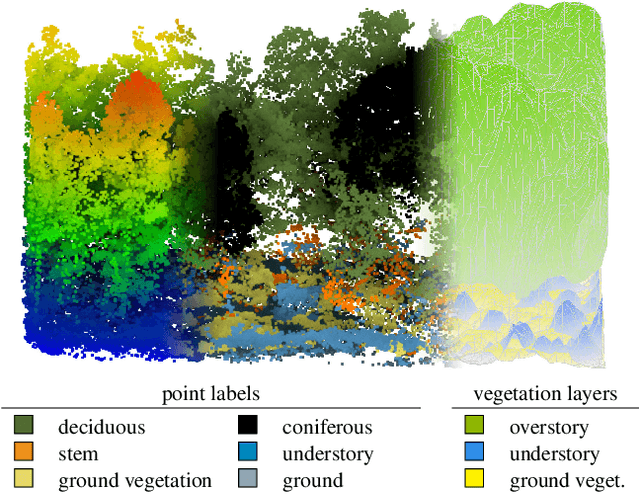

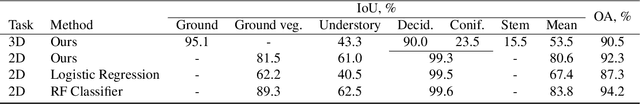

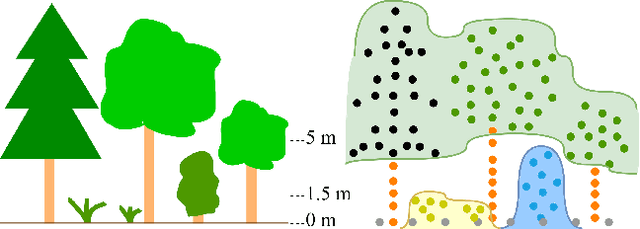

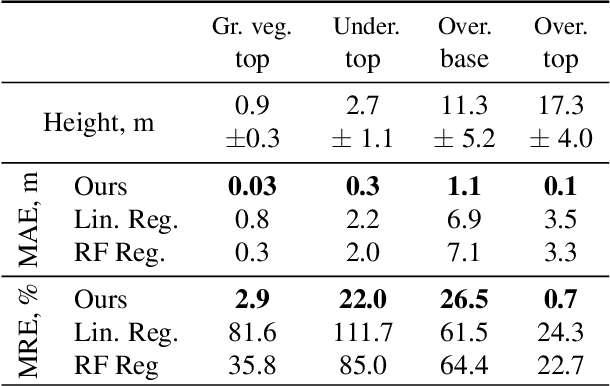

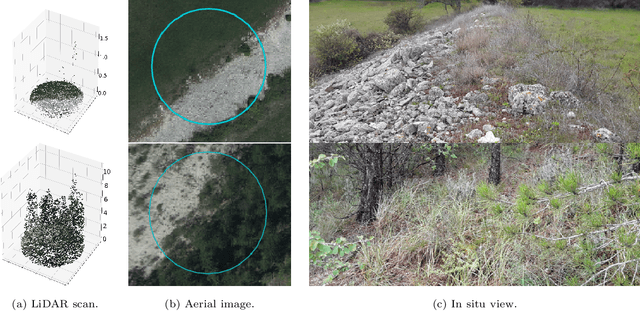

Abstract:The analysis of the multi-layer structure of wild forests is an important challenge of automated large-scale forestry. While modern aerial LiDARs offer geometric information across all vegetation layers, most datasets and methods focus only on the segmentation and reconstruction of the top of canopy. We release WildForest3D, which consists of 29 study plots and over 2000 individual trees across 47 000m2 with dense 3D annotation, along with occupancy and height maps for 3 vegetation layers: ground vegetation, understory, and overstory. We propose a 3D deep network architecture predicting for the first time both 3D point-wise labels and high-resolution layer occupancy rasters simultaneously. This allows us to produce a precise estimation of the thickness of each vegetation layer as well as the corresponding watertight meshes, therefore meeting most forestry purposes. Both the dataset and the model are released in open access: https://github.com/ekalinicheva/multi_layer_vegetation.

Predicting Vegetation Stratum Occupancy from Airborne LiDAR Data with Deep Learning

Jan 20, 2022

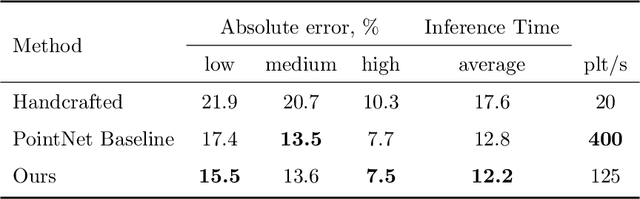

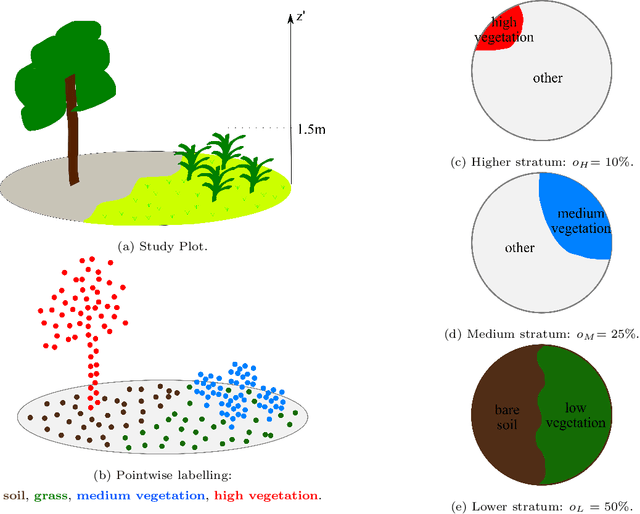

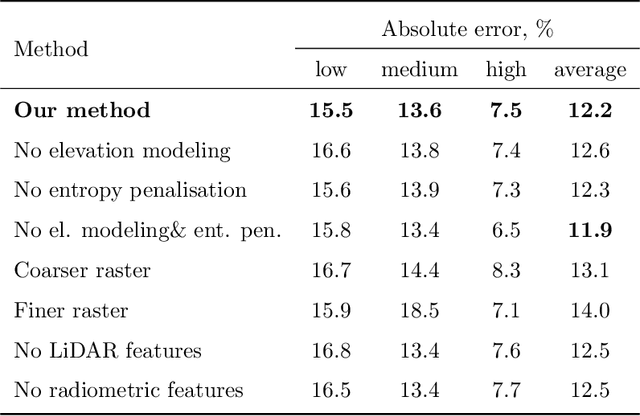

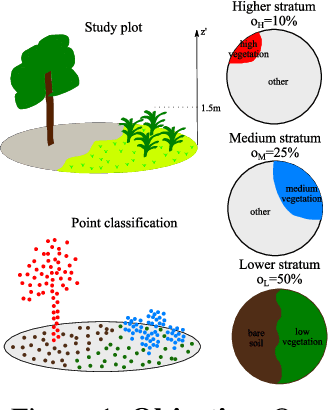

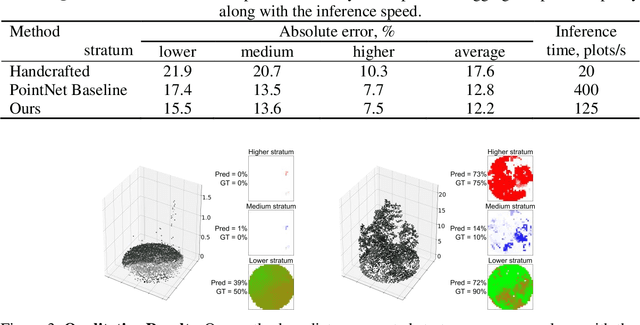

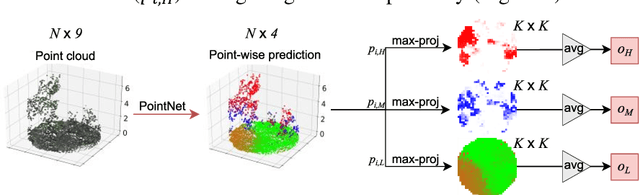

Abstract:We propose a new deep learning-based method for estimating the occupancy of vegetation strata from airborne 3D LiDAR point clouds. Our model predicts rasterized occupancy maps for three vegetation strata corresponding to lower, medium, and higher cover. Our weakly-supervised training scheme allows our network to only be supervised with vegetation occupancy values aggregated over cylindrical plots containing thousands of points. Such ground truth is easier to produce than pixel-wise or point-wise annotations. Our method outperforms handcrafted and deep learning baselines in terms of precision by up to 30%, while simultaneously providing visual and interpretable predictions. We provide an open-source implementation along with a dataset of 199 agricultural plots to train and evaluate weakly supervised occupancy regression algorithms.

Vegetation Stratum Occupancy Prediction from Airborne LiDAR 3D Point Clouds

Dec 27, 2021

Abstract:We propose a new deep learning-based method for estimating the occupancy of vegetation strata from 3D point clouds captured from an aerial platform. Our model predicts rasterized occupancy maps for three vegetation strata: lower, medium, and higher strata. Our training scheme allows our network to only being supervized with values aggregated over cylindrical plots, which are easier to produce than pixel-wise or point-wise annotations. Our method outperforms handcrafted and deep learning baselines in terms of precision while simultaneously providing visual and interpretable predictions. We provide an open-source implementation of our method along along a dataset of 199 agricultural plots to train and evaluate occupancy regression algorithms.

Multi-Modal Temporal Attention Models for Crop Mapping from Satellite Time Series

Dec 14, 2021

Abstract:Optical and radar satellite time series are synergetic: optical images contain rich spectral information, while C-band radar captures useful geometrical information and is immune to cloud cover. Motivated by the recent success of temporal attention-based methods across multiple crop mapping tasks, we propose to investigate how these models can be adapted to operate on several modalities. We implement and evaluate multiple fusion schemes, including a novel approach and simple adjustments to the training procedure, significantly improving performance and efficiency with little added complexity. We show that most fusion schemes have advantages and drawbacks, making them relevant for specific settings. We then evaluate the benefit of multimodality across several tasks: parcel classification, pixel-based segmentation, and panoptic parcel segmentation. We show that by leveraging both optical and radar time series, multimodal temporal attention-based models can outmatch single-modality models in terms of performance and resilience to cloud cover. To conduct these experiments, we augment the PASTIS dataset with spatially aligned radar image time series. The resulting dataset, PASTIS-R, constitutes the first large-scale, multimodal, and open-access satellite time series dataset with semantic and instance annotations.

Satellite Image Time Series Classification with Pixel-Set Encoders and Temporal Self-Attention

Nov 18, 2019

Abstract:Satellite image time series, bolstered by their growing availability, are at the forefront of an extensive effort towards automated Earth monitoring by international institutions. In particular, large-scale control of agricultural parcels is an issue of major political and economic importance. In this regard, hybrid convolutional-recurrent neural architectures have shown promising results for the automated classification of satellite image time series.We propose an alternative approach in which the convolutional layers are advantageously replaced with encoders operating on unordered sets of pixels to exploit the typically coarse resolution of publicly available satellite images. We also propose to extract temporal features using a bespoke neural architecture based on self-attention instead of recurrent networks. We demonstrate experimentally that our method not only outperforms previous state-of-the-art approaches in terms of precision, but also significantly decreases processing time and memory requirements. Lastly, we release a large open-access annotated dataset as a benchmark for future work on satellite image time series.

Time-Space tradeoff in deep learning models for crop classification on satellite multi-spectral image time series

Jan 29, 2019

Abstract:In this article, we investigate several structured deep learning models for crop type classification on multi-spectral time series. In particular, our aim is to assess the respective importance of spatial and temporal structures in such data. With this objective, we consider several designs of convolutional, recurrent, and hybrid neural networks, and assess their performance on a large dataset of freely available Sentinel-2 imagery. We find that the best-performing approaches are hybrid configurations for which most of the parameters (up to 90%) are allocated to modeling the temporal structure of the data. Our results thus constitute a set of guidelines for the design of bespoke deep learning models for crop type classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge