Neelay Joglekar

Autonomous Image-to-Grasp Robotic Suturing Using Reliability-Driven Suture Thread Reconstruction

Aug 29, 2024

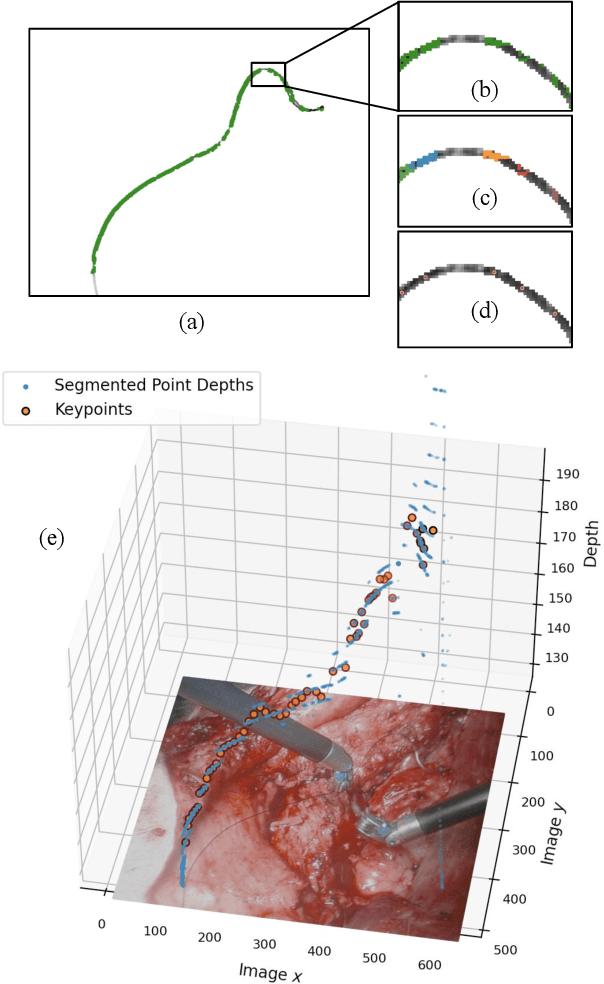

Abstract:Automating suturing during robotically-assisted surgery reduces the burden on the operating surgeon, enabling them to focus on making higher-level decisions rather than fatiguing themselves in the numerous intricacies of a surgical procedure. Accurate suture thread reconstruction and grasping are vital prerequisites for suturing, particularly for avoiding entanglement with surgical tools and performing complex thread manipulation. However, such methods must be robust to heavy perceptual degradation resulting from heavy noise and thread feature sparsity from endoscopic images. We develop a reconstruction algorithm that utilizes quadratic programming optimization to fit smooth splines to thread observations, satisfying reliability bounds estimated from measured observation noise. Additionally, we craft a grasping policy that generates gripper trajectories that maximize the probability of a successful grasp. Our full image-to-grasp pipeline is rigorously evaluated with over 400 grasping trials, exhibiting state-of-the-art accuracy. We show that this strategy can be applied to the various techniques in autonomous suture needle manipulation to achieve autonomous surgery in a generalizable way.

Suture Thread Spline Reconstruction from Endoscopic Images for Robotic Surgery with Reliability-driven Keypoint Detection

Sep 27, 2022

Abstract:Automating the process of manipulating and delivering sutures during robotic surgery is a prominent problem at the frontier of surgical robotics, as automating this task can significantly reduce surgeons' fatigue during tele-operated surgery and allow them to spend more time addressing higher-level clinical decision making. Accomplishing autonomous suturing and suture manipulation in the real world requires accurate suture thread localization and reconstruction, the process of creating a 3D shape representation of suture thread from 2D stereo camera surgical image pairs. This is a very challenging problem due to how limited pixel information is available for the threads, as well as their sensitivity to lighting and specular reflection. We present a suture thread reconstruction work that uses reliable keypoints and a Minimum Variation Spline (MVS) smoothing optimization to construct a 3D centerline from a segmented surgical image pair. This method is comparable to previous suture thread reconstruction works, with the possible benefit of increased accuracy of grasping point estimation. Our code and datasets will be available at: https://github.com/ucsdarclab/thread-reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge