Nathanaël Jarrassé

Compensation Effect Amplification Control (CEAC): A movement-based approach for coordinated position and velocity control of the elbow of upper-limb prostheses

Jan 08, 2026Abstract:Despite advances in upper-limb (UL) prosthetic design, achieving intuitive control of intermediate joints - such as the wrist and elbow - remains challenging, particularly for continuous and velocity-modulated movements. We introduce a novel movement-based control paradigm entitled Compensation Effect Amplification Control (CEAC) that leverages users' trunk flexion and extension as input for controlling prosthetic elbow velocity. Considering that the trunk can be both a functional and compensatory joint when performing upper-limb actions, CEAC amplifies the natural coupling between trunk and prosthesis while introducing a controlled delay that allows users to modulate both the position and velocity of the prosthetic joint. We evaluated CEAC in a generic drawing task performed by twelve able-bodied participants using a supernumerary prosthesis with an active elbow. Additionally a multiple-target-reaching task was performed by a subset of ten participants. Results demonstrate task performances comparable to those obtained with natural arm movements, even when gesture velocity or drawing size were varied, while maintaining ergonomic trunk postures. Analysis revealed that CEAC effectively restores joint coordinated action, distributes movement effort between trunk and elbow, enabling intuitive trajectory control without requiring extreme compensatory movements. Overall, CEAC offers a promising control strategy for intermediate joints of UL prostheses, particularly in tasks requiring continuous and precise coordination.

JcvPCA and JsvCRP : a set of metrics to evaluate changes in joint coordination strategies

May 13, 2025Abstract:Characterizing changes in inter-joint coordination presents significant challenges, as it necessitates the examination of relationships between multiple degrees of freedom during movements and their temporal evolution. Existing metrics are inadequate in providing physiologically coherent results that document both the temporal and spatial aspects of inter-joint coordination. In this article, we introduce two novel metrics to enhance the analysis of inter-joint coordination. The first metric, Joint Contribution Variation based on Principal Component Analysis (JcvPCA), evaluates the variation in each joint's contribution during series of movements. The second metric, Joint Synchronization Variation based on Continuous Relative Phase (JsvCRP), measures the variation in temporal synchronization among joints between two movement datasets. We begin by presenting each metric and explaining their derivation. We then demonstrate the application of these metrics using simulated and experimental datasets involving identical movement tasks performed with distinct coordination strategies. The results show that these metrics can successfully differentiate between unique coordination strategies, providing meaningful insights into joint collaboration during movement. These metrics hold significant potential for fields such as ergonomics and clinical rehabilitation, where a precise understanding of the evolution of inter-joint coordination strategies is crucial. Potential applications include evaluating the effects of upper limb exoskeletons in industrial settings or monitoring the progress of patients undergoing neurological rehabilitation.

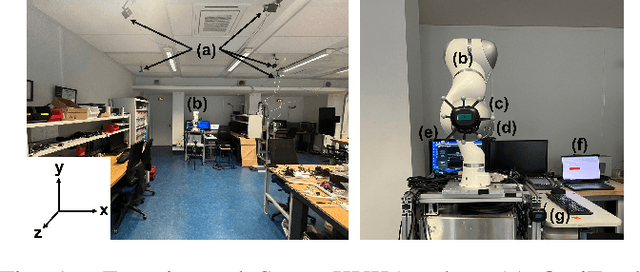

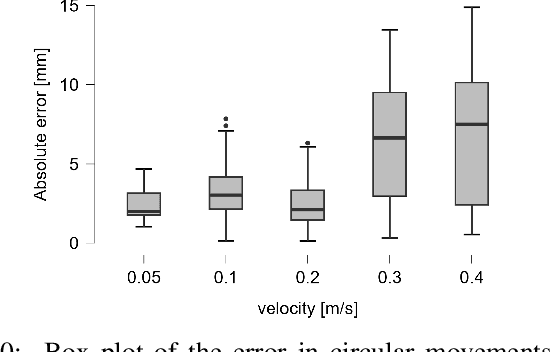

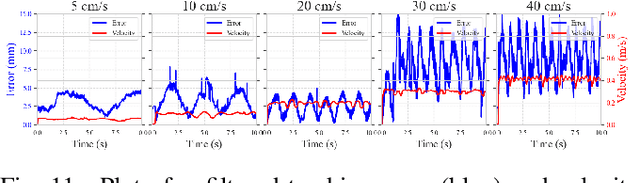

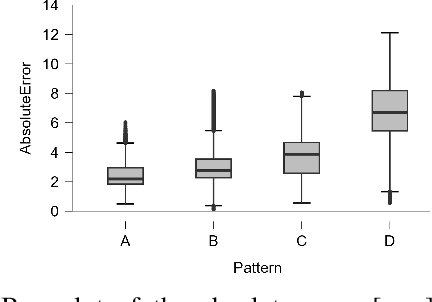

Evaluating the precision of the HTC VIVE Ultimate Tracker with robotic and human movements under varied environmental conditions

Sep 04, 2024

Abstract:The HTC VIVE Ultimate Tracker, utilizing inside-out tracking with internal stereo cameras providing 6 DoF tracking without external cameras, offers a cost-efficient and straightforward setup for motion tracking. Initially designed for the gaming and VR industry, we explored its application beyond VR, providing source code for data capturing in both C++ and Python without requiring a VR headset. This study is the first to evaluate the tracker's precision across various experimental scenarios. To assess the robustness of the tracking precision, we employed a robotic arm as a precise and repeatable source of motion. Using the OptiTrack system as a reference, we conducted tests under varying experimental conditions: lighting, movement velocity, environmental changes caused by displacing objects in the scene, and human movement in front of the trackers, as well as varying the displacement size relative to the calibration center. On average, the HTC VIVE Ultimate Tracker achieved a precision of 4.98 mm +/- 4 mm across various conditions. The most critical factors affecting accuracy were lighting conditions, movement velocity, and range of motion relative to the calibration center. For practical evaluation, we captured human movements with 5 trackers in realistic motion capture scenarios. Our findings indicate sufficient precision for capturing human movements, validated through two tasks: a low-dynamic pick-and-place task and high-dynamic fencing movements performed by an elite athlete. Even though its precision is lower than that of conventional fixed-camera-based motion capture systems and its performance is influenced by several factors, the HTC VIVE Ultimate Tracker demonstrates adequate accuracy for a variety of motion tracking applications. Its ability to capture human or object movements outside of VR or MOCAP environments makes it particularly versatile.

Teleoperation of a robotic manipulator in peri-personal space: a virtual wand approach

Jun 13, 2024

Abstract:The paper deals with the well-known problem of teleoperating a robotic arm along six degrees of freedom. The prevailing and most effective approach to this problem involves a direct position-to-position mapping, imposing robotic end-effector movements that mirrors those of the user. In the particular case where the robot stands near the operator, there are alternatives to this approach. Drawing inspiration from head pointers utilized in the 1980s, originally designed to enable drawing with limited head motions for tetraplegic individuals, we propose a "virtual wand" mapping. It employs a virtual rigid linkage between the hand and the robot's end-effector. With this approach, rotations produce amplified translations through a lever arm, creating a "rotation-to-position" coupling. This approach expands the translation workspace at the expense of a reduced rotation space. We compare the virtual wand approach to the one-to-one position mapping through the realization of 6-DoF reaching tasks. Results indicate that the two different mappings perform comparably well, are equally well-received by users, and exhibit similar motor control behaviors. Nevertheless, the virtual wand mapping is anticipated to outperform in tasks characterized by large translations and minimal effector rotations, whereas direct mapping is expected to demonstrate advantages in large rotations with minimal translations. These results pave the way for new interactions and interfaces, particularly in disability assistance utilizing head movements (instead of hands). Leveraging body parts with substantial rotations could enable the accomplishment of tasks previously deemed infeasible with standard direct coupling interfaces.

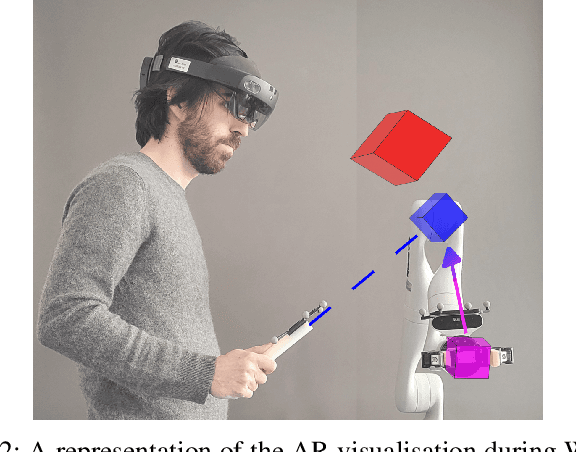

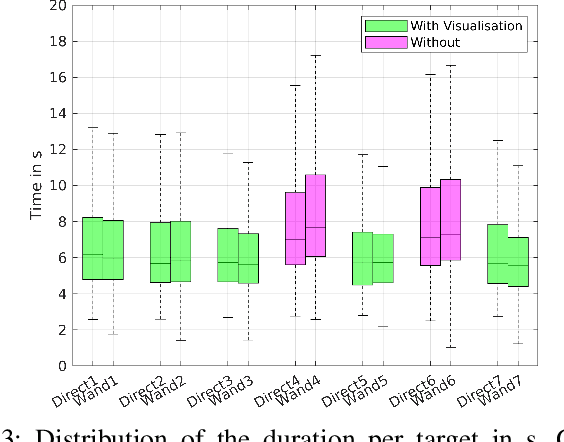

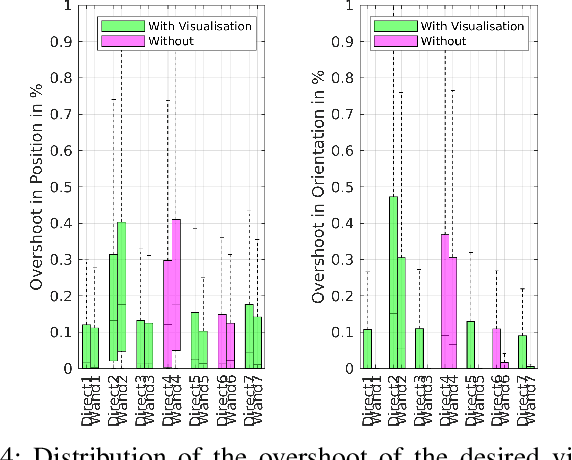

Hands-free teleoperation of a nearby manipulator through a virtual body-to-robot link

Jun 13, 2024Abstract:This paper introduces an innovative control approach for teleoperating a robot in close proximity to a human operator, which could be useful to control robots embedded on wheelchairs. The method entails establishing a virtual connection between a specific body part and the robot's end-effector, visually displayed through an Augmented Reality (AR) headset. This linkage enables the transformation of body rotations into amplified effector translations, extending the robot's workspace beyond the capabilities of direct one-to-one mapping. Moreover, the linkage can be reconfigured using a joystick, resulting in a hybrid position/velocity control mode using the body/joystick motions respectively. After providing a comprehensive overview of the control methodology, we present the results of an experimental campaign designed to elucidate the advantages and drawbacks of our approach compared to the conventional joystick-based teleoperation method. The body-link control demonstrates slightly faster task completion and is naturally preferred over joystick velocity control, albeit being more physically demanding for tasks with a large range. The hybrid mode, where participants could simultaneously utilize both modes, emerges as a compromise, combining the intuitiveness of the body mode with the extensive task range of the velocity mode. Finally, we provide preliminary observations on potential assistive applications using head motions, especially for operators with limited range of motion in their bodies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge