Nat Wannawas

Physics-informed reinforcement learning via probabilistic co-adjustment functions

Sep 11, 2023Abstract:Reinforcement learning of real-world tasks is very data inefficient, and extensive simulation-based modelling has become the dominant approach for training systems. However, in human-robot interaction and many other real-world settings, there is no appropriate one-model-for-all due to differences in individual instances of the system (e.g. different people) or necessary oversimplifications in the simulation models. This requires two approaches: 1. either learning the individual system's dynamics approximately from data which requires data-intensive training or 2. using a complete digital twin of the instances, which may not be realisable in many cases. We introduce two approaches: co-kriging adjustments (CKA) and ridge regression adjustment (RRA) as novel ways to combine the advantages of both approaches. Our adjustment methods are based on an auto-regressive AR1 co-kriging model that we integrate with GP priors. This yield a data- and simulation-efficient way of using simplistic simulation models (e.g., simple two-link model) and rapidly adapting them to individual instances (e.g., biomechanics of individual people). Using CKA and RRA, we obtain more accurate uncertainty quantification of the entire system's dynamics than pure GP-based and AR1 methods. We demonstrate the efficiency of co-kriging adjustment with an interpretable reinforcement learning control example, learning to control a biomechanical human arm using only a two-link arm simulation model (offline part) and CKA derived from a small amount of interaction data (on-the-fly online). Our method unlocks an efficient and uncertainty-aware way to implement reinforcement learning methods in real world complex systems for which only imperfect simulation models exist.

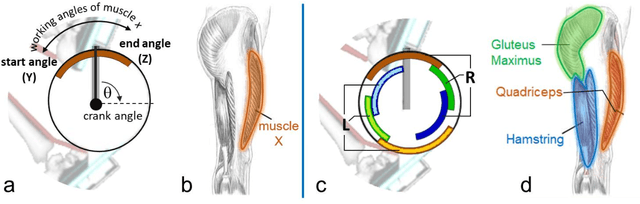

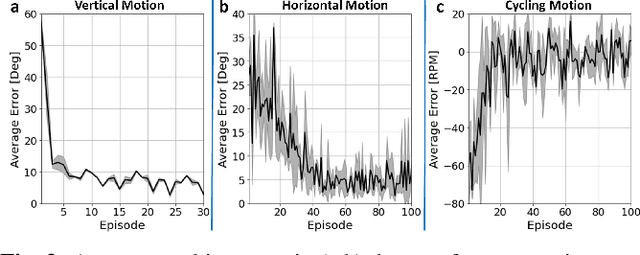

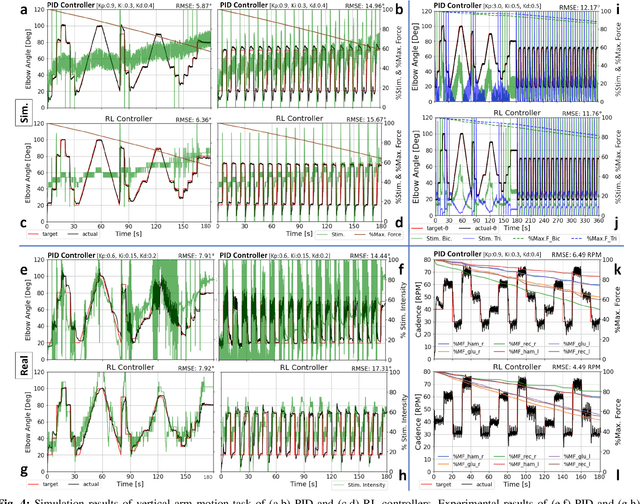

Towards AI-controlled FES-restoration of movements: Learning cycling stimulation pattern with reinforcement learning

Mar 22, 2023Abstract:Functional electrical stimulation (FES) has been increasingly integrated with other rehabilitation devices, including robots. FES cycling is one of the common FES applications in rehabilitation, which is performed by stimulating leg muscles in a certain pattern. The appropriate pattern varies across individuals and requires manual tuning which can be time-consuming and challenging for the individual user. Here, we present an AI-based method for finding the patterns, which requires no extra hardware or sensors. Our method has two phases, starting with finding model-based patterns using reinforcement learning and detailed musculoskeletal models. The models, built using open-source software, can be customised through our automated script and can be therefore used by non-technical individuals without extra cost. Next, our method fine-tunes the pattern using real cycling data. We test our both in simulation and experimentally on a stationary tricycle. In the simulation test, our method can robustly deliver model-based patterns for different cycling configurations. The experimental evaluation shows that our method can find a model-based pattern that induces higher cycling speed than an EMG-based pattern. By using just 100 seconds of cycling data, our method can deliver a fine-tuned pattern that gives better cycling performance. Beyond FES cycling, this work is a showcase, displaying the feasibility and potential of human-in-the-loop AI in real-world rehabilitation.

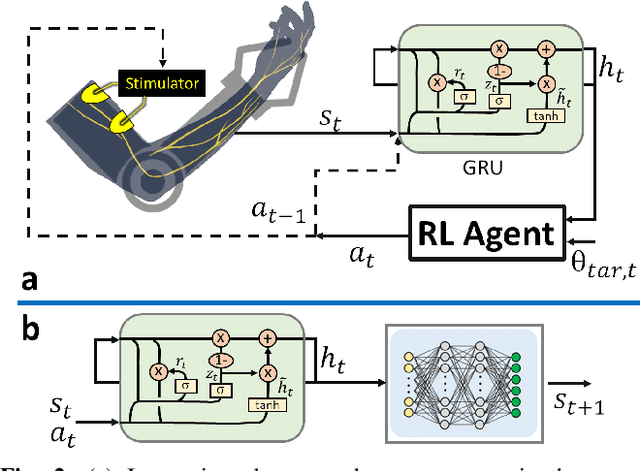

Towards AI-controlled FES-restoration of arm movements: Controlling for progressive muscular fatigue with Gaussian state-space models

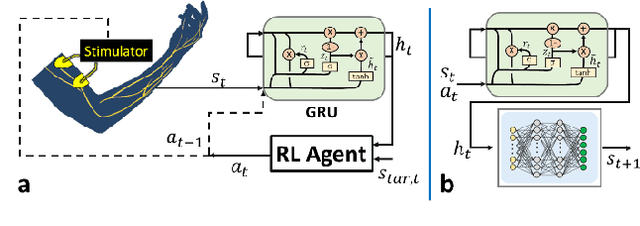

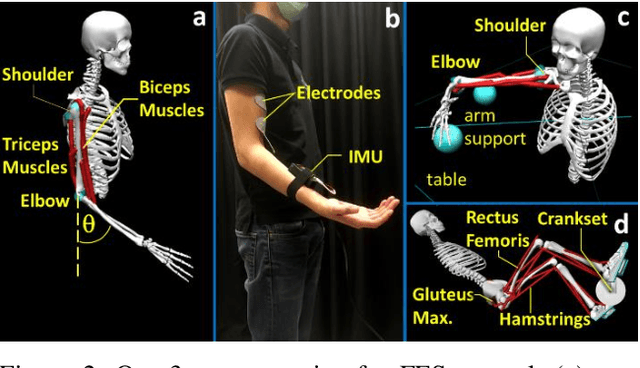

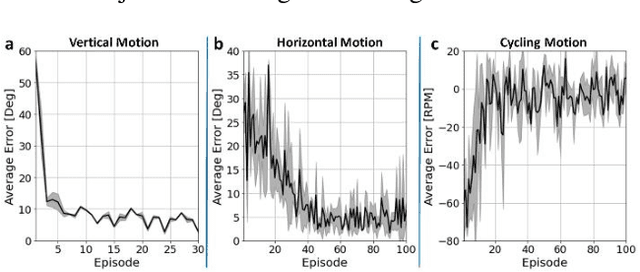

Jan 10, 2023Abstract:Reaching disability limits an individual's ability in performing daily tasks. Surface Functional Electrical Stimulation (FES) offers a non-invasive solution to restore lost ability. However, inducing desired movements using FES is still an open engineering problem. This problem is accentuated by the complexities of human arms' neuromechanics and the variations across individuals. Reinforcement Learning (RL) emerges as a promising approach to govern customised control rules for different settings. Yet, one remaining challenge of controlling FES systems for RL is unobservable muscle fatigue that progressively changes as an unknown function of the stimulation, thereby breaking the Markovian assumption of RL. In this work, we present a method to address the unobservable muscle fatigue issue, allowing our RL controller to achieve higher control performances. Our method is based on a Gaussian State-Space Model (GSSM) that utilizes recurrent neural networks to learn Markovian state-spaces from partial observations. The GSSM is used as a filter that converts the observations into the state-space representation for RL to preserve the Markovian assumption. Here, we start with presenting the modification of the original GSSM to address an overconfident issue. We then present the interaction between RL and the modified GSSM, followed by the setup for FES control learning. We test our RL-GSSM system on a planar reaching setting in simulation using a detailed neuromechanical model. The results show that the GSSM can help improve the RL's control performance to the comparable level of the ideal case that the fatigue is observable.

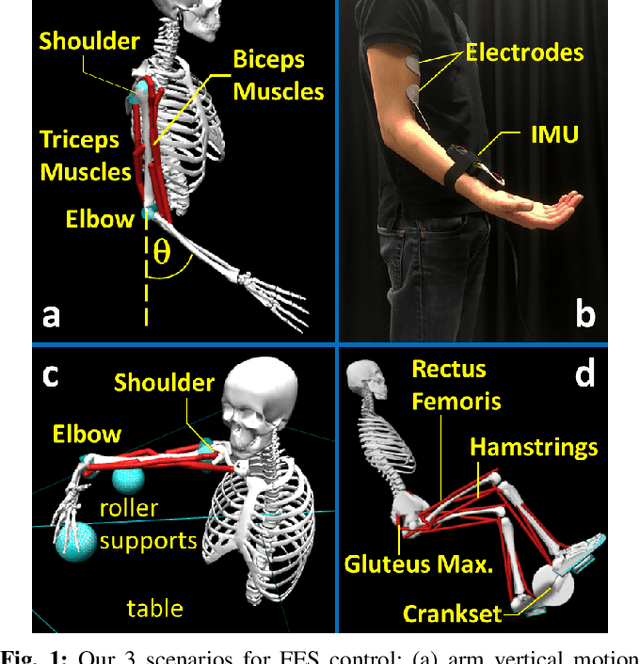

Towards AI-controlled FES-restoration of arm movements: neuromechanics-based reinforcement learning for 3-D reaching

Jan 10, 2023Abstract:Reaching disabilities affect the quality of life. Functional Electrical Stimulation (FES) can restore lost motor functions. Yet, there remain challenges in controlling FES to induce desired movements. Neuromechanical models are valuable tools for developing FES control methods. However, focusing on the upper extremity areas, several existing models are either overly simplified or too computationally demanding for control purposes. Besides the model-related issues, finding a general method for governing the control rules for different tasks and subjects remains an engineering challenge. Here, we present our approach toward FES-based restoration of arm movements to address those fundamental issues in controlling FES. Firstly, we present our surface-FES-oriented neuromechanical models of human arms built using well-accepted, open-source software. The models are designed to capture significant dynamics in FES controls with minimal computational cost. Our models are customisable and can be used for testing different control methods. Secondly, we present the application of reinforcement learning (RL) as a general method for governing the control rules. In combination, our customisable models and RL-based control method open the possibility of delivering customised FES controls for different subjects and settings with minimal engineering intervention. We demonstrate our approach in planar and 3D settings.

Neuromuscular Reinforcement Learning to Actuate Human Limbs through FES

Sep 16, 2022

Abstract:Functional Electrical Stimulation (FES) is a technique to evoke muscle contraction through low-energy electrical signals. FES can animate paralysed limbs. Yet, an open challenge remains on how to apply FES to achieve desired movements. This challenge is accentuated by the complexities of human bodies and the non-stationarities of the muscles' responses. The former causes difficulties in performing inverse dynamics, and the latter causes control performance to degrade over extended periods of use. Here, we engage the challenge via a data-driven approach. Specifically, we learn to control FES through Reinforcement Learning (RL) which can automatically customise the stimulation for the patients. However, RL typically has Markovian assumptions while FES control systems are non-Markovian because of the non-stationarities. To deal with this problem, we use a recurrent neural network to create Markovian state representations. We cast FES controls into RL problems and train RL agents to control FES in different settings in both simulations and the real world. The results show that our RL controllers can maintain control performances over long periods and have better stimulation characteristics than PID controllers.

Neuromechanics-based Deep Reinforcement Learning of Neurostimulation Control in FES cycling

Apr 02, 2021

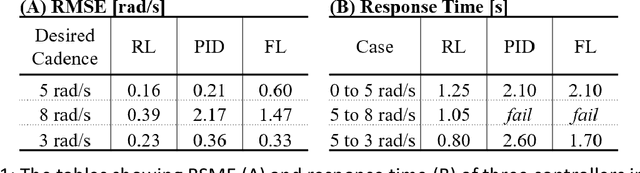

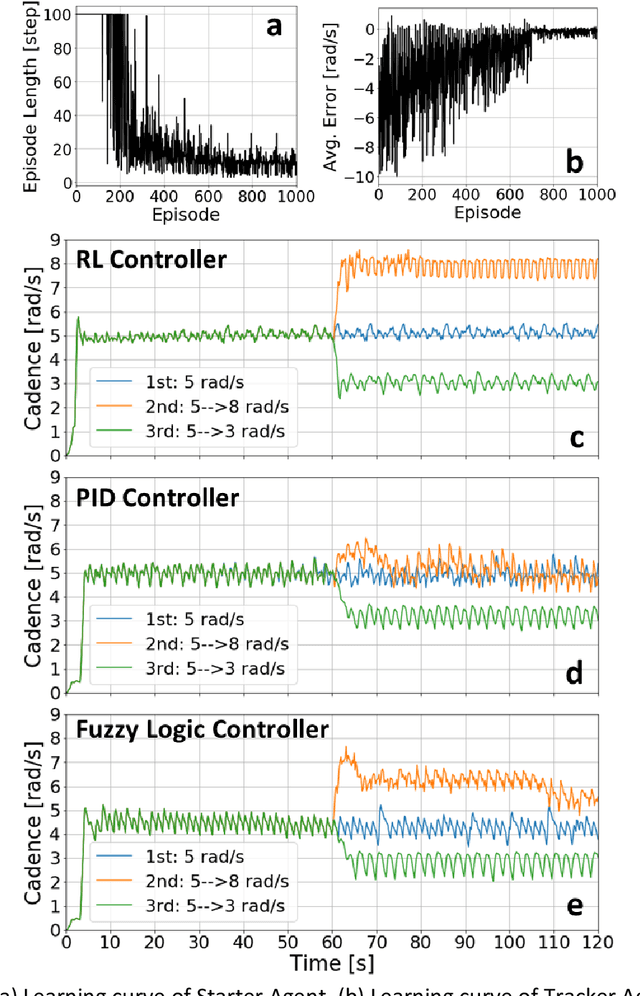

Abstract:Functional Electrical Stimulation (FES) can restore motion to a paralysed person's muscles. Yet, control stimulating many muscles to restore the practical function of entire limbs is an unsolved problem. Current neurostimulation engineering still relies on 20th Century control approaches and correspondingly shows only modest results that require daily tinkering to operate at all. Here, we present our state of the art Deep Reinforcement Learning (RL) developed for real time adaptive neurostimulation of paralysed legs for FES cycling. Core to our approach is the integration of a personalised neuromechanical component into our reinforcement learning framework that allows us to train the model efficiently without demanding extended training sessions with the patient and working out of the box. Our neuromechanical component includes merges musculoskeletal models of muscle and or tendon function and a multistate model of muscle fatigue, to render the neurostimulation responsive to a paraplegic's cyclist instantaneous muscle capacity. Our RL approach outperforms PID and Fuzzy Logic controllers in accuracy and performance. Crucially, our system learned to stimulate a cyclist's legs from ramping up speed at the start to maintaining a high cadence in steady state racing as the muscles fatigue. A part of our RL neurostimulation system has been successfully deployed at the Cybathlon 2020 bionic Olympics in the FES discipline with our paraplegic cyclist winning the Silver medal among 9 competing teams.

I am Robot: Neuromuscular Reinforcement Learning to Actuate Human Limbs through Functional Electrical Stimulation

Mar 09, 2021

Abstract:Human movement disorders or paralysis lead to the loss of control of muscle activation and thus motor control. Functional Electrical Stimulation (FES) is an established and safe technique for contracting muscles by stimulating the skin above a muscle to induce its contraction. However, an open challenge remains on how to restore motor abilities to human limbs through FES, as the problem of controlling the stimulation is unclear. We are taking a robotics perspective on this problem, by developing robot learning algorithms that control the ultimate humanoid robot, the human body, through electrical muscle stimulation. Human muscles are not trivial to control as actuators due to their force production being non-stationary as a result of fatigue and other internal state changes, in contrast to robot actuators which are well-understood and stationary over broad operation ranges. We present our Deep Reinforcement Learning approach to the control of human muscles with FES, using a recurrent neural network for dynamic state representation, to overcome the unobserved elements of the behaviour of human muscles under external stimulation. We demonstrate our technique both in neuromuscular simulations but also experimentally on a human. Our results show that our controller can learn to manipulate human muscles, applying appropriate levels of stimulation to achieve the given tasks while compensating for advancing muscle fatigue which arises throughout the tasks. Additionally, our technique can learn quickly enough to be implemented in real-world human-in-the-loop settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge