Mahendran Subramanian

Applications of Machine Learning in Chemical and Biological Oceanography

Sep 23, 2022

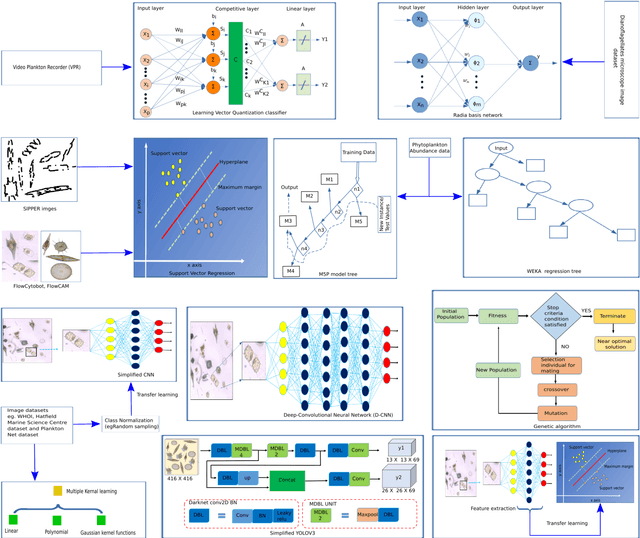

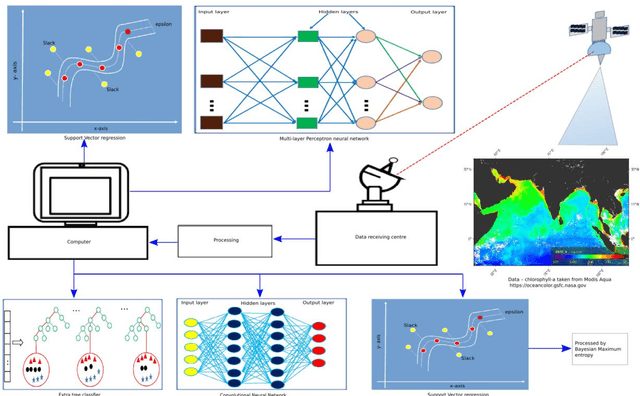

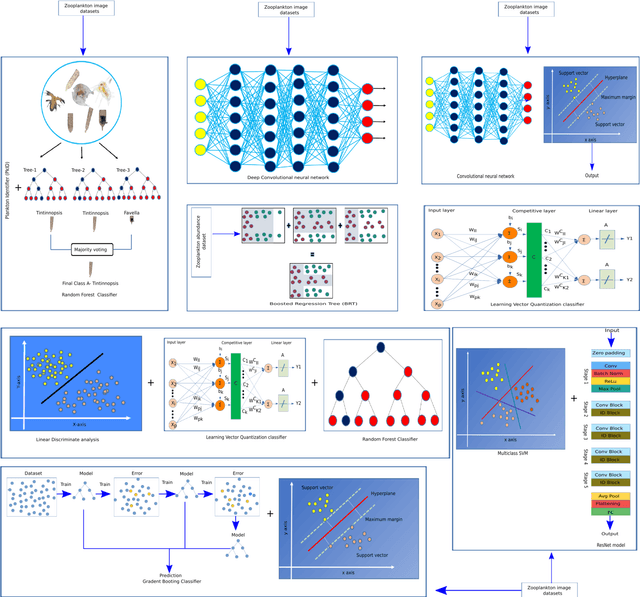

Abstract:Machine learning (ML) refers to computer algorithms that predict a meaningful output or categorise complex systems based on a large amount of data. ML applied in a variety of areas, including natural science, engineering, space exploration, and even gaming development. This article focused on the use of machine learning in the field of chemical and biological oceanography. In the prediction of global fixed nitrogen levels, partial carbon dioxide pressure, and other chemical properties, the application of ML is a promising tool. Machine learning is also utilised in the field of biological oceanography to detect planktonic forms from various images (i.e., microscopy, FlowCAM and video recorder), spectrometers, and other signal processing techniques. Moreover, ML successfully classified the mammals using their acoustics, detecting endangered mammalian and fish species in a specific environment. Most importantly, using environmental data, the ML proved to be an effective method for predicting hypoxic conditions and the harmful algal bloom events, an important measurement in terms of environmental monitoring. Furthermore, machine learning was used to construct a number of databases for various species that will be useful to other researchers, and the creation of new algorithms will help the marine research community better comprehend the chemistry and biology of the ocean.

Neuromechanics-based Deep Reinforcement Learning of Neurostimulation Control in FES cycling

Apr 02, 2021

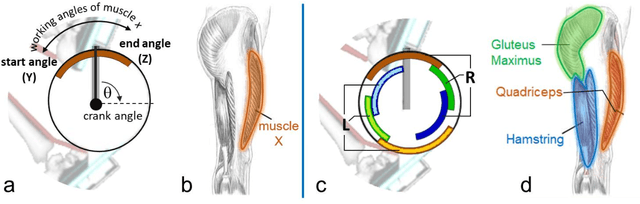

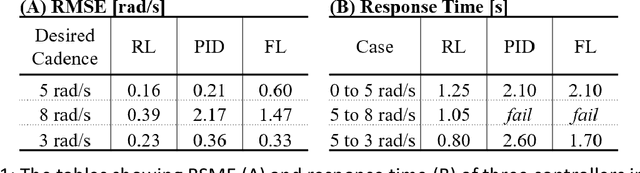

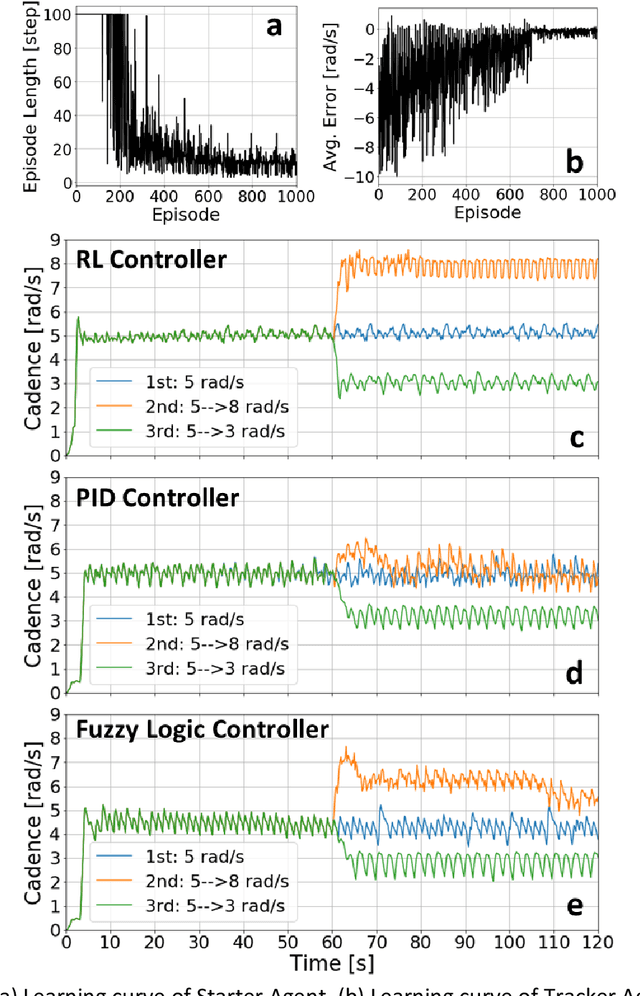

Abstract:Functional Electrical Stimulation (FES) can restore motion to a paralysed person's muscles. Yet, control stimulating many muscles to restore the practical function of entire limbs is an unsolved problem. Current neurostimulation engineering still relies on 20th Century control approaches and correspondingly shows only modest results that require daily tinkering to operate at all. Here, we present our state of the art Deep Reinforcement Learning (RL) developed for real time adaptive neurostimulation of paralysed legs for FES cycling. Core to our approach is the integration of a personalised neuromechanical component into our reinforcement learning framework that allows us to train the model efficiently without demanding extended training sessions with the patient and working out of the box. Our neuromechanical component includes merges musculoskeletal models of muscle and or tendon function and a multistate model of muscle fatigue, to render the neurostimulation responsive to a paraplegic's cyclist instantaneous muscle capacity. Our RL approach outperforms PID and Fuzzy Logic controllers in accuracy and performance. Crucially, our system learned to stimulate a cyclist's legs from ramping up speed at the start to maintaining a high cadence in steady state racing as the muscles fatigue. A part of our RL neurostimulation system has been successfully deployed at the Cybathlon 2020 bionic Olympics in the FES discipline with our paraplegic cyclist winning the Silver medal among 9 competing teams.

Gaze-contingent decoding of human navigation intention on an autonomous wheelchair platform

Mar 04, 2021

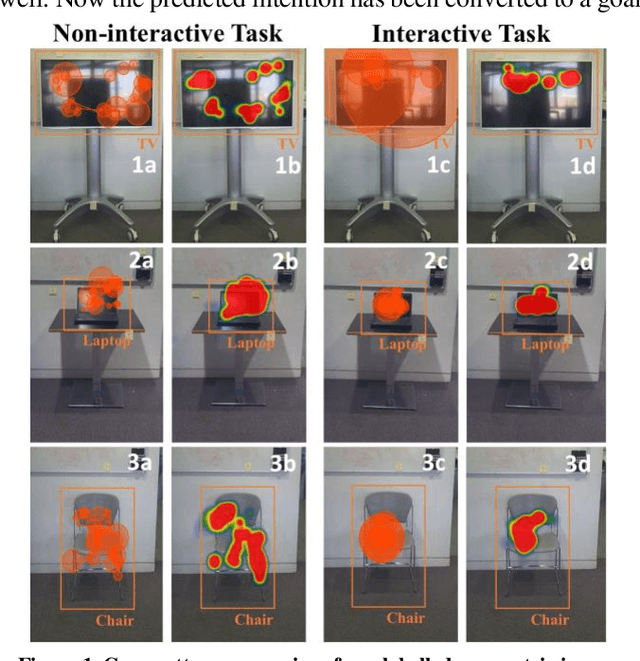

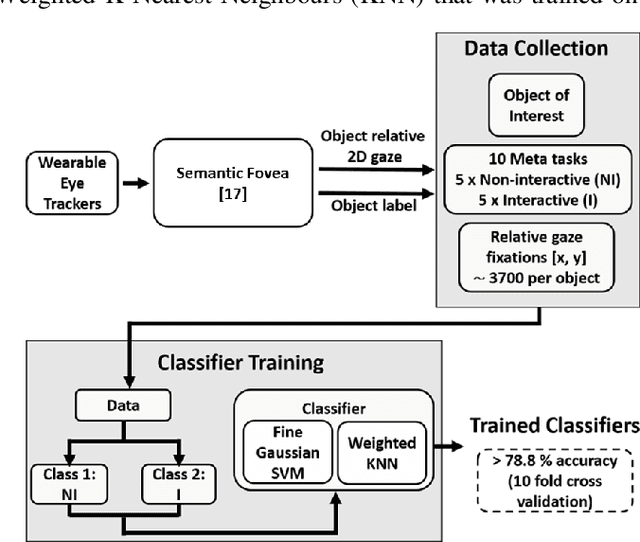

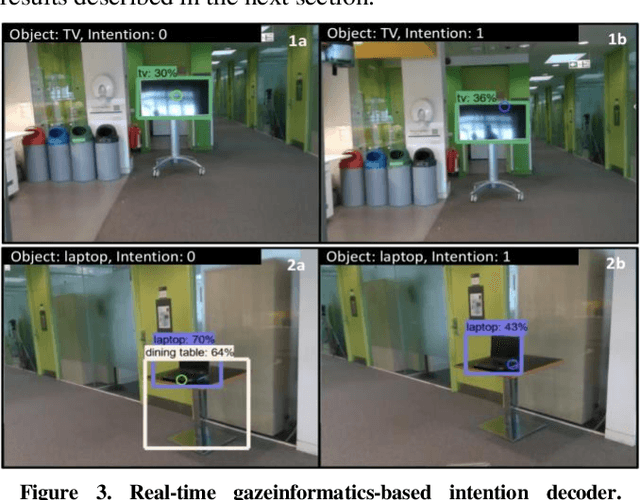

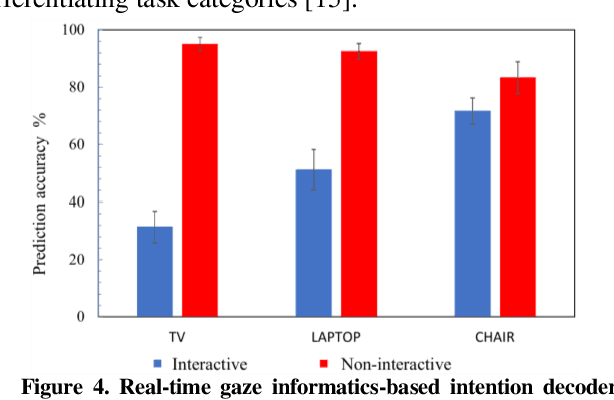

Abstract:We have pioneered the Where-You-Look-Is Where-You-Go approach to controlling mobility platforms by decoding how the user looks at the environment to understand where they want to navigate their mobility device. However, many natural eye-movements are not relevant for action intention decoding, only some are, which places a challenge on decoding, the so-called Midas Touch Problem. Here, we present a new solution, consisting of 1. deep computer vision to understand what object a user is looking at in their field of view, with 2. an analysis of where on the object's bounding box the user is looking, to 3. use a simple machine learning classifier to determine whether the overt visual attention on the object is predictive of a navigation intention to that object. Our decoding system ultimately determines whether the user wants to drive to e.g., a door or just looks at it. Crucially, we find that when users look at an object and imagine they were moving towards it, the resulting eye-movements from this motor imagery (akin to neural interfaces) remain decodable. Once a driving intention and thus also the location is detected our system instructs our autonomous wheelchair platform, the A.Eye-Drive, to navigate to the desired object while avoiding static and moving obstacles. Thus, for navigation purposes, we have realised a cognitive-level human interface, as it requires the user only to cognitively interact with the desired goal, not to continuously steer their wheelchair to the target (low-level human interfacing).

Mechanomyography based closed-loop Functional Electrical Stimulation cycling system

Jul 30, 2018

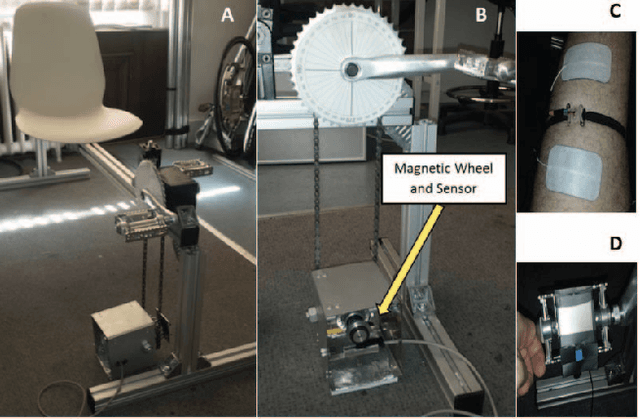

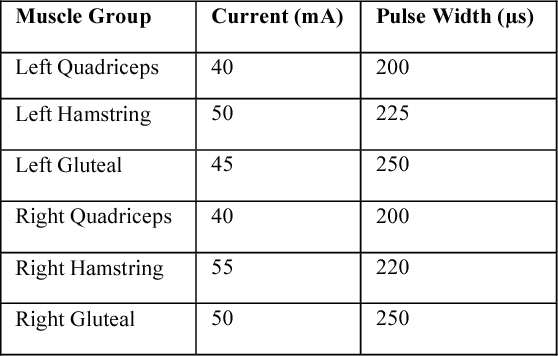

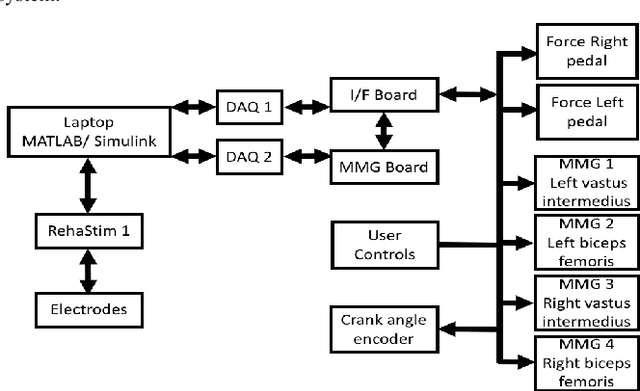

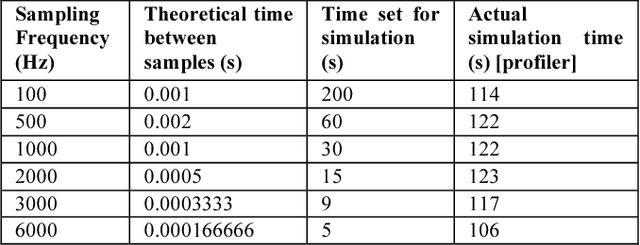

Abstract:Functional Electrical Stimulation (FES) systems are successful in restoring motor function and supporting paralyzed users. Commercially available FES products are open loop, meaning that the system is unable to adapt to changing conditions with the user and their muscles which results in muscle fatigue and poor stimulation protocols. This is because it is difficult to close the loop between stimulation and monitoring of muscle contraction using adaptive stimulation. FES causes electrical artefacts which make it challenging to monitor muscle contractions with traditional methods such as electromyography (EMG). We look to overcome this limitation by combining FES with novel mechanomyographic (MMG) sensors to be able to monitor muscle activity during stimulation in real time. To provide a meaningful task we built an FES cycling rig with a software interface that enabled us to perform adaptive recording and stimulation, and then combine this with sensors to record forces applied to the pedals using force sensitive resistors (FSRs), crank angle position using a magnetic incremental encoder and inputs from the user using switches and a potentiometer. We illustrated this with a closed-loop stimulation algorithm that used the inputs from the sensors to control the output of a programmable RehaStim 1 FES stimulator (Hasomed) in real-time. This recumbent bicycle rig was used as a testing platform for FES cycling. The algorithm was designed to respond to a change in requested speed (RPM) from the user and change the stimulation power (% of maximum current mA) until this speed was achieved and then maintain it.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge