Naofumi Hama

Notes on Applicability of Explainable AI Methods to Machine Learning Models Using Features Extracted by Persistent Homology

Oct 15, 2023Abstract:Data analysis that uses the output of topological data analysis as input for machine learning algorithms has been the subject of extensive research. This approach offers a means of capturing the global structure of data. Persistent homology (PH), a common methodology within the field of TDA, has found wide-ranging applications in machine learning. One of the key reasons for the success of the PH-ML pipeline lies in the deterministic nature of feature extraction conducted through PH. The ability to achieve satisfactory levels of accuracy with relatively simple downstream machine learning models, when processing these extracted features, underlines the pipeline's superior interpretability. However, it must be noted that this interpretation has encountered issues. Specifically, it fails to accurately reflect the feasible parameter region in the data generation process, and the physical or chemical constraints that restrict this process. Against this backdrop, we explore the potential application of explainable AI methodologies to this PH-ML pipeline. We apply this approach to the specific problem of predicting gas adsorption in metal-organic frameworks and demonstrate that it can yield suggestive results. The codes to reproduce our results are available at https://github.com/naofumihama/xai_ph_ml

Model free Shapley values for high dimensional data

Nov 15, 2022

Abstract:A model-agnostic variable importance method can be used with arbitrary prediction functions. Here we present some model-free methods that do not require access to the prediction function. This is useful when that function is proprietary and not available, or just extremely expensive. It is also useful when studying residuals from a model. The cohort Shapley (CS) method is model-free but has exponential cost in the dimension of the input space. A supervised on-manifold Shapley method from Frye et al. (2020) is also model free but requires as input a second black box model that has to be trained for the Shapley value problem. We introduce an integrated gradient version of cohort Shapley, called IGCS, with cost $\mathcal{O}(nd)$. We show that over the vast majority of the relevant unit cube that the IGCS value function is close to a multilinear function for which IGCS matches CS. We use some area under the curve (AUC) measures to quantify the performance of IGCS. On a problem from high energy physics we verify that IGCS has nearly the same AUCs as CS. We also use it on a problem from computational chemistry in 1024 variables. We see there that IGCS attains much higher AUCs than we get from Monte Carlo sampling. The code is publicly available at https://github.com/cohortshapley/cohortintgrad.

Deletion and Insertion Tests in Regression Models

May 25, 2022

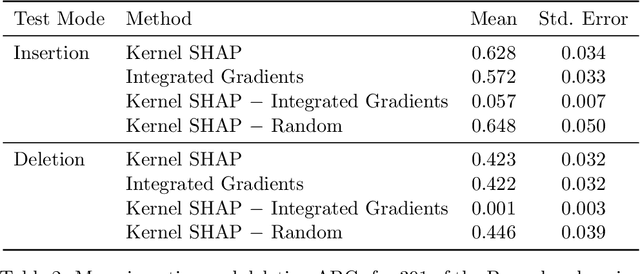

Abstract:A basic task in explainable AI (XAI) is to identify the most important features behind a prediction made by a black box function $f$. The insertion and deletion tests of \cite{petsiuk2018rise} are used to judge the quality of algorithms that rank pixels from most to least important for a classification. Motivated by regression problems we establish a formula for their area under the curve (AUC) criteria in terms of certain main effects and interactions in an anchored decomposition of $f$. We find an expression for the expected value of the AUC under a random ordering of inputs to $f$ and propose an alternative area above a straight line for the regression setting. We use this criterion to compare feature importances computed by integrated gradients (IG) to those computed by Kernel SHAP (KS). Exact computation of KS grows exponentially with dimension, while that of IG grows linearly with dimension. In two data sets including binary variables we find that KS is superior to IG in insertion and deletion tests, but only by a very small amount. Our comparison problems include some binary inputs that pose a challenge to IG because it must use values between the possible variable levels. We show that IG will match KS when $f$ is an additive function plus a multilinear function of the variables. This includes a multilinear interpolation over the binary variables that would cause IG to have exponential cost in a naive implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge