Deletion and Insertion Tests in Regression Models

Paper and Code

May 25, 2022

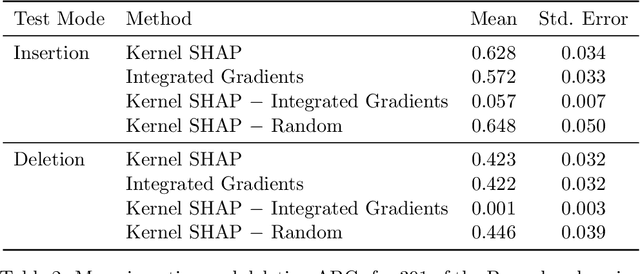

A basic task in explainable AI (XAI) is to identify the most important features behind a prediction made by a black box function $f$. The insertion and deletion tests of \cite{petsiuk2018rise} are used to judge the quality of algorithms that rank pixels from most to least important for a classification. Motivated by regression problems we establish a formula for their area under the curve (AUC) criteria in terms of certain main effects and interactions in an anchored decomposition of $f$. We find an expression for the expected value of the AUC under a random ordering of inputs to $f$ and propose an alternative area above a straight line for the regression setting. We use this criterion to compare feature importances computed by integrated gradients (IG) to those computed by Kernel SHAP (KS). Exact computation of KS grows exponentially with dimension, while that of IG grows linearly with dimension. In two data sets including binary variables we find that KS is superior to IG in insertion and deletion tests, but only by a very small amount. Our comparison problems include some binary inputs that pose a challenge to IG because it must use values between the possible variable levels. We show that IG will match KS when $f$ is an additive function plus a multilinear function of the variables. This includes a multilinear interpolation over the binary variables that would cause IG to have exponential cost in a naive implementation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge