Nanguang Chen

Q-space Guided Collaborative Attention Translation Network for Flexible Diffusion-Weighted Images Synthesis

May 14, 2025Abstract:This study, we propose a novel Q-space Guided Collaborative Attention Translation Networks (Q-CATN) for multi-shell, high-angular resolution DWI (MS-HARDI) synthesis from flexible q-space sampling, leveraging the commonly acquired structural MRI data. Q-CATN employs a collaborative attention mechanism to effectively extract complementary information from multiple modalities and dynamically adjust its internal representations based on flexible q-space information, eliminating the need for fixed sampling schemes. Additionally, we introduce a range of task-specific constraints to preserve anatomical fidelity in DWI, enabling Q-CATN to accurately learn the intrinsic relationships between directional DWI signal distributions and q-space. Extensive experiments on the Human Connectome Project (HCP) dataset demonstrate that Q-CATN outperforms existing methods, including 1D-qDL, 2D-qDL, MESC-SD, and QGAN, in estimating parameter maps and fiber tracts both quantitatively and qualitatively, while preserving fine-grained details. Notably, its ability to accommodate flexible q-space sampling highlights its potential as a promising toolkit for clinical and research applications. Our code is available at https://github.com/Idea89560041/Q-CATN.

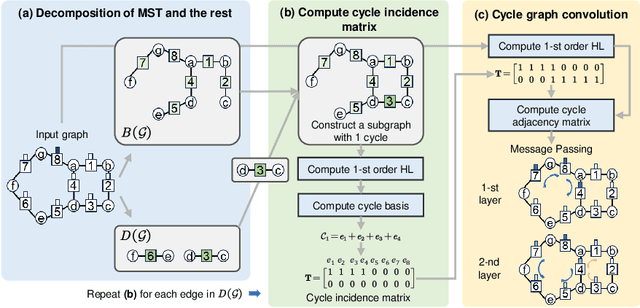

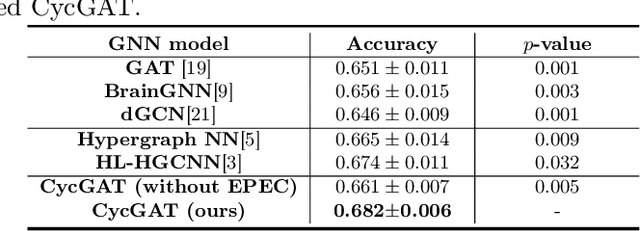

Topological Cycle Graph Attention Network for Brain Functional Connectivity

Mar 28, 2024

Abstract:This study, we introduce a novel Topological Cycle Graph Attention Network (CycGAT), designed to delineate a functional backbone within brain functional graph--key pathways essential for signal transmissio--from non-essential, redundant connections that form cycles around this core structure. We first introduce a cycle incidence matrix that establishes an independent cycle basis within a graph, mapping its relationship with edges. We propose a cycle graph convolution that leverages a cycle adjacency matrix, derived from the cycle incidence matrix, to specifically filter edge signals in a domain of cycles. Additionally, we strengthen the representation power of the cycle graph convolution by adding an attention mechanism, which is further augmented by the introduction of edge positional encodings in cycles, to enhance the topological awareness of CycGAT. We demonstrate CycGAT's localization through simulation and its efficacy on an ABCD study's fMRI data (n=8765), comparing it with baseline models. CycGAT outperforms these models, identifying a functional backbone with significantly fewer cycles, crucial for understanding neural circuits related to general intelligence. Our code will be released once accepted.

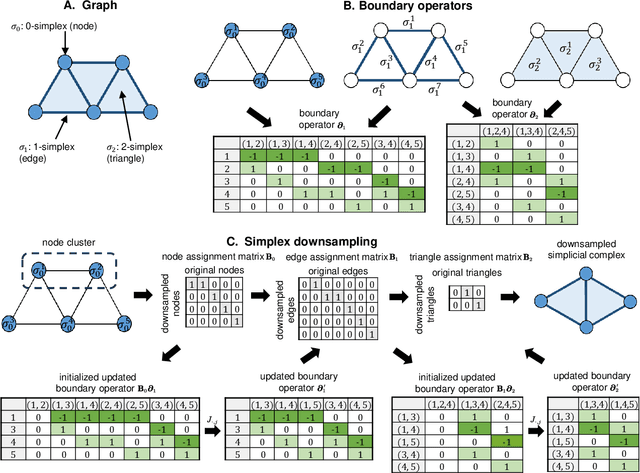

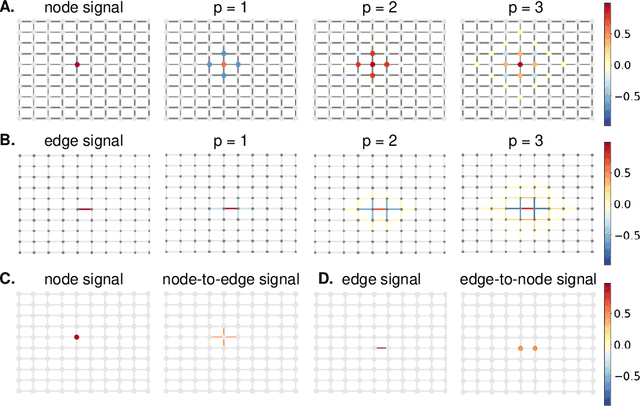

Advancing Graph Neural Networks with HL-HGAT: A Hodge-Laplacian and Attention Mechanism Approach for Heterogeneous Graph-Structured Data

Mar 11, 2024

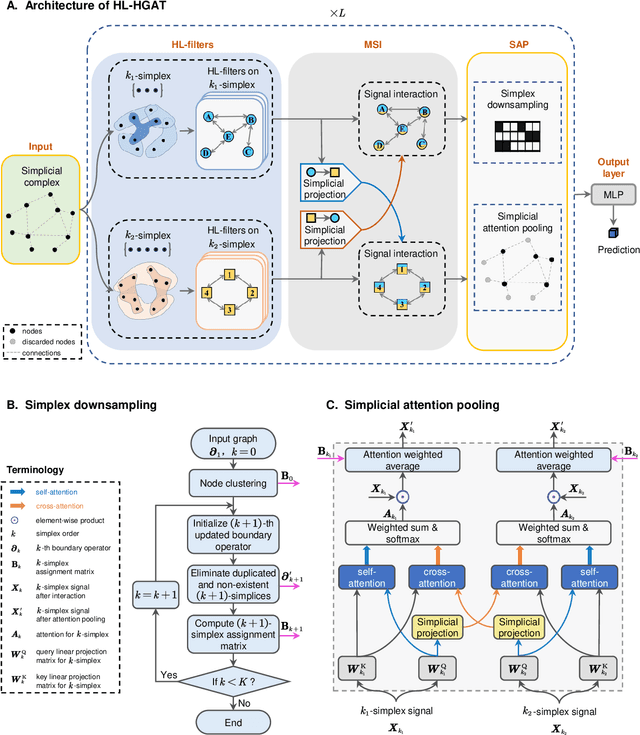

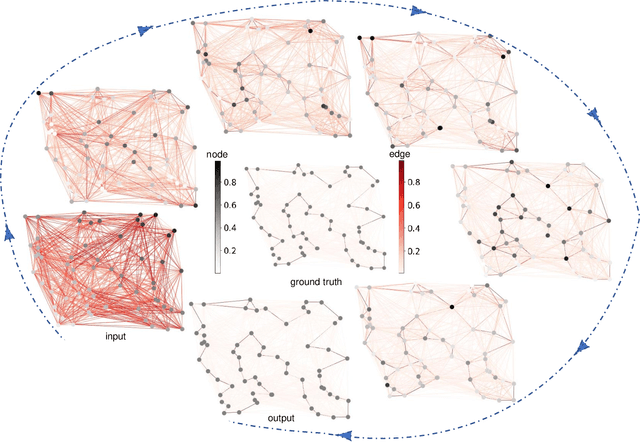

Abstract:Graph neural networks (GNNs) have proven effective in capturing relationships among nodes in a graph. This study introduces a novel perspective by considering a graph as a simplicial complex, encompassing nodes, edges, triangles, and $k$-simplices, enabling the definition of graph-structured data on any $k$-simplices. Our contribution is the Hodge-Laplacian heterogeneous graph attention network (HL-HGAT), designed to learn heterogeneous signal representations across $k$-simplices. The HL-HGAT incorporates three key components: HL convolutional filters (HL-filters), simplicial projection (SP), and simplicial attention pooling (SAP) operators, applied to $k$-simplices. HL-filters leverage the unique topology of $k$-simplices encoded by the Hodge-Laplacian (HL) operator, operating within the spectral domain of the $k$-th HL operator. To address computation challenges, we introduce a polynomial approximation for HL-filters, exhibiting spatial localization properties. Additionally, we propose a pooling operator to coarsen $k$-simplices, combining features through simplicial attention mechanisms of self-attention and cross-attention via transformers and SP operators, capturing topological interconnections across multiple dimensions of simplices. The HL-HGAT is comprehensively evaluated across diverse graph applications, including NP-hard problems, graph multi-label and classification challenges, and graph regression tasks in logistics, computer vision, biology, chemistry, and neuroscience. The results demonstrate the model's efficacy and versatility in handling a wide range of graph-based scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge