Nan Cai

Active Event Alignment for Monocular Distance Estimation

Oct 29, 2024

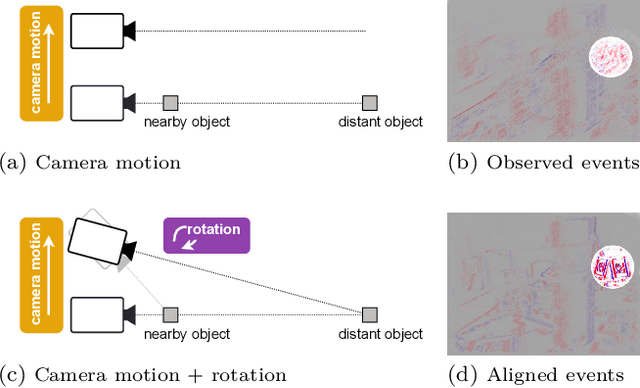

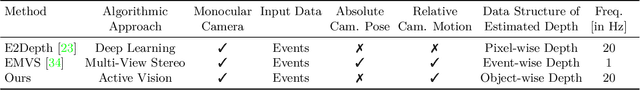

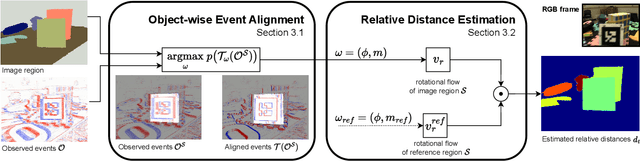

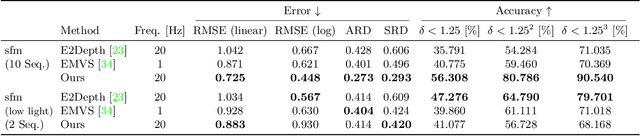

Abstract:Event cameras provide a natural and data efficient representation of visual information, motivating novel computational strategies towards extracting visual information. Inspired by the biological vision system, we propose a behavior driven approach for object-wise distance estimation from event camera data. This behavior-driven method mimics how biological systems, like the human eye, stabilize their view based on object distance: distant objects require minimal compensatory rotation to stay in focus, while nearby objects demand greater adjustments to maintain alignment. This adaptive strategy leverages natural stabilization behaviors to estimate relative distances effectively. Unlike traditional vision algorithms that estimate depth across the entire image, our approach targets local depth estimation within a specific region of interest. By aligning events within a small region, we estimate the angular velocity required to stabilize the image motion. We demonstrate that, under certain assumptions, the compensatory rotational flow is inversely proportional to the object's distance. The proposed approach achieves new state-of-the-art accuracy in distance estimation - a performance gain of 16% on EVIMO2. EVIMO2 event sequences comprise complex camera motion and substantial variance in depth of static real world scenes.

Revolutionizing Battery Disassembly: The Design and Implementation of a Battery Disassembly Autonomous Mobile Manipulator Robot(BEAM-1)

Jul 09, 2024

Abstract:The efficient disassembly of end-of-life electric vehicle batteries(EOL-EVBs) is crucial for green manufacturing and sustainable development. The current pre-programmed disassembly conducted by the Autonomous Mobile Manipulator Robot(AMMR) struggles to meet the disassembly requirements in dynamic environments, complex scenarios, and unstructured processes. In this paper, we propose a Battery Disassembly AMMR(BEAM-1) system based on NeuralSymbolic AI. It detects the environmental state by leveraging a combination of multi-sensors and neural predicates and then translates this information into a quasi-symbolic space. In real-time, it identifies the optimal sequence of action primitives through LLM-heuristic tree search, ensuring high-precision execution of these primitives. Additionally, it employs positional speculative sampling using intuitive networks and achieves the disassembly of various bolt types with a meticulously designed end-effector. Importantly, BEAM-1 is a continuously learning embodied intelligence system capable of subjective reasoning like a human, and possessing intuition. A large number of real scene experiments have proved that it can autonomously perceive, decide, and execute to complete the continuous disassembly of bolts in multiple, multi-category, and complex situations, with a success rate of 98.78%. This research attempts to use NeuroSymbolic AI to give robots real autonomous reasoning, planning, and learning capabilities. BEAM-1 realizes the revolution of battery disassembly. Its framework can be easily ported to any robotic system to realize different application scenarios, which provides a ground-breaking idea for the design and implementation of future embodied intelligent robotic systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge