Naman jain

On the Robustness of Human Pose Estimation

Aug 18, 2019

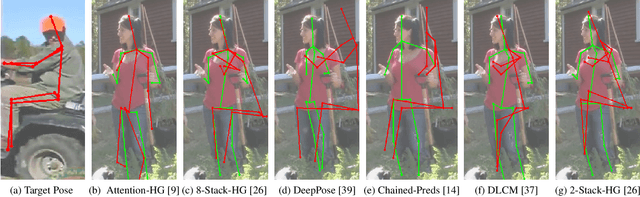

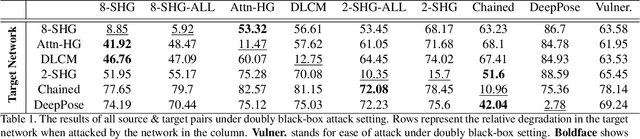

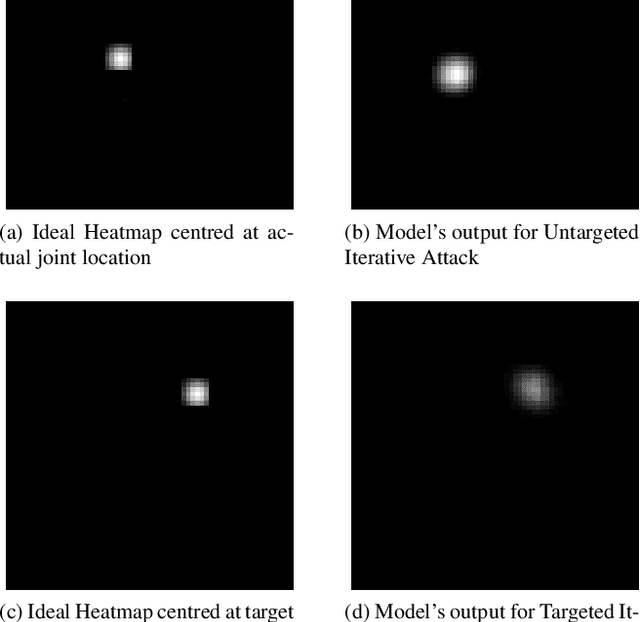

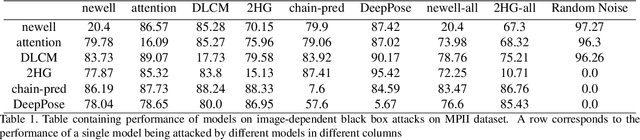

Abstract:This paper provides, to the best of our knowledge, the first comprehensive and exhaustive study of adversarial attacks on human pose estimation. Besides highlighting the important differences between well-studied classification and human pose-estimation systems w.r.t. adversarial attacks, we also provide deep insights into the design choices of pose-estimation systems to shape future work. We compare the robustness of several pose-estimation architectures trained on the standard datasets, MPII and COCO. In doing so, we also explore the problem of attacking non-classification based networks including regression based networks, which has been virtually unexplored in the past. We find that compared to classification and semantic segmentation, human pose estimation architectures are relatively robust to adversarial attacks with the single-step attacks being surprisingly ineffective. Our study show that the heatmap-based pose-estimation models fare better than their direct regression-based counterparts and that the systems which explicitly model anthropomorphic semantics of human body are significantly more robust. We find that the targeted attacks are more difficult to obtain than untargeted ones and some body-joints are easier to fool than the others. We present visualizations of universal perturbations to facilitate unprecedented insights into their workings on pose-estimation. Additionally, we show them to generalize well across different networks on both the datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge