Nada AlSallami

Deep Learning for Size and Microscope Feature Extraction and Classification in Oral Cancer: Enhanced Convolution Neural Network

Aug 06, 2022Abstract:Background and Aim: Over-fitting issue has been the reason behind deep learning technology not being successfully implemented in oral cancer images classification. The aims of this research were reducing overfitting for accurately producing the required dimension reduction feature map through Deep Learning algorithm using Convolutional Neural Network. Methodology: The proposed system consists of Enhanced Convolutional Neural Network that uses an autoencoder technique to increase the efficiency of the feature extraction process and compresses information. In this technique, unpooling and deconvolution is done to generate the input data to minimize the difference between input and output data. Moreover, it extracts characteristic features from the input data set to regenerate input data from those features by learning a network to reduce overfitting. Results: Different accuracy and processing time value is achieved while using different sample image group of Confocal Laser Endomicroscopy (CLE) images. The results showed that the proposed solution is better than the current system. Moreover, the proposed system has improved the classification accuracy by 5~ 5.5% on average and reduced the average processing time by 20 ~ 30 milliseconds. Conclusion: The proposed system focuses on the accurate classification of oral cancer cells of different anatomical locations from the CLE images. Finally, this study enhances the accuracy and processing time using the autoencoder method that solves the overfitting problem.

* 21 pages

A Novel Solution of Using Mixed Reality in Bowel and Oral and Maxillofacial Surgical Telepresence: 3D Mean Value Cloning algorithm

Mar 17, 2021

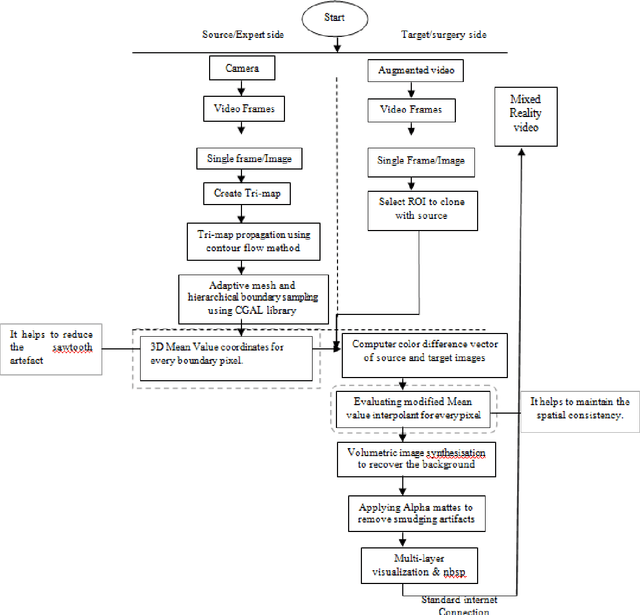

Abstract:Background and aim: Most of the Mixed Reality models used in the surgical telepresence are suffering from discrepancies in the boundary area and spatial-temporal inconsistency due to the illumination variation in the video frames. The aim behind this work is to propose a new solution that helps produce the composite video by merging the augmented video of the surgery site and the virtual hand of the remote expertise surgeon. The purpose of the proposed solution is to decrease the processing time and enhance the accuracy of merged video by decreasing the overlay and visualization error and removing occlusion and artefacts. Methodology: The proposed system enhanced the mean value cloning algorithm that helps to maintain the spatial-temporal consistency of the final composite video. The enhanced algorithm includes the 3D mean value coordinates and improvised mean value interpolant in the image cloning process, which helps to reduce the sawtooth, smudging and discolouration artefacts around the blending region. Results: As compared to the state of the art solution, the accuracy in terms of overlay error of the proposed solution is improved from 1.01mm to 0.80mm whereas the accuracy in terms of visualization error is improved from 98.8% to 99.4%. The processing time is reduced to 0.173 seconds from 0.211 seconds. Conclusion: Our solution helps make the object of interest consistent with the light intensity of the target image by adding the space distance that helps maintain the spatial consistency in the final merged video.

* 27 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge