Ahmad Alrubaie

A novel solution of deep learning for enhanced support vector machine for predicting the onset of type 2 diabetes

Aug 05, 2022Abstract:Type 2 Diabetes is one of the most major and fatal diseases known to human beings, where thousands of people are subjected to the onset of Type 2 Diabetes every year. However, the diagnosis and prevention of Type 2 Diabetes are relatively costly in today's scenario; hence, the use of machine learning and deep learning techniques is gaining momentum for predicting the onset of Type 2 Diabetes. This research aims to increase the accuracy and Area Under the Curve (AUC) metric while improving the processing time for predicting the onset of Type 2 Diabetes. The proposed system consists of a deep learning technique that uses the Support Vector Machine (SVM) algorithm along with the Radial Base Function (RBF) along with the Long Short-term Memory Layer (LSTM) for prediction of onset of Type 2 Diabetes. The proposed solution provides an average accuracy of 86.31 % and an average AUC value of 0.8270 or 82.70 %, with an improvement of 3.8 milliseconds in the processing. Radial Base Function (RBF) kernel and the LSTM layer enhance the prediction accuracy and AUC metric from the current industry standard, making it more feasible for practical use without compromising the processing time.

* 18 pages

A Novel Solution of Using Mixed Reality in Bowel and Oral and Maxillofacial Surgical Telepresence: 3D Mean Value Cloning algorithm

Mar 17, 2021

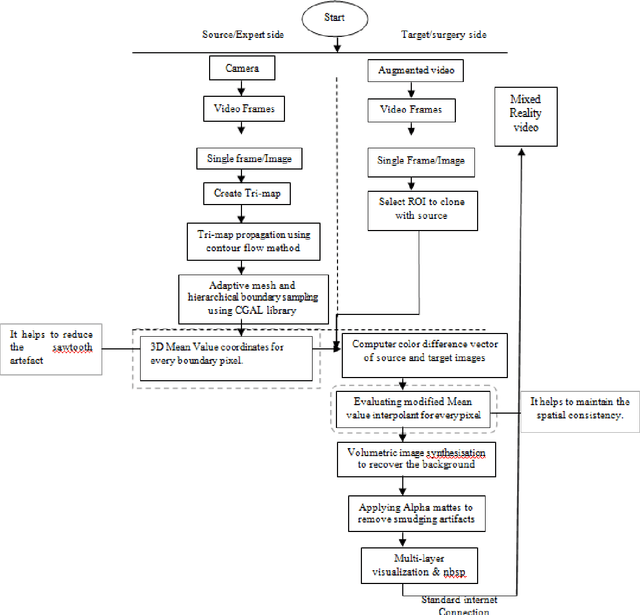

Abstract:Background and aim: Most of the Mixed Reality models used in the surgical telepresence are suffering from discrepancies in the boundary area and spatial-temporal inconsistency due to the illumination variation in the video frames. The aim behind this work is to propose a new solution that helps produce the composite video by merging the augmented video of the surgery site and the virtual hand of the remote expertise surgeon. The purpose of the proposed solution is to decrease the processing time and enhance the accuracy of merged video by decreasing the overlay and visualization error and removing occlusion and artefacts. Methodology: The proposed system enhanced the mean value cloning algorithm that helps to maintain the spatial-temporal consistency of the final composite video. The enhanced algorithm includes the 3D mean value coordinates and improvised mean value interpolant in the image cloning process, which helps to reduce the sawtooth, smudging and discolouration artefacts around the blending region. Results: As compared to the state of the art solution, the accuracy in terms of overlay error of the proposed solution is improved from 1.01mm to 0.80mm whereas the accuracy in terms of visualization error is improved from 98.8% to 99.4%. The processing time is reduced to 0.173 seconds from 0.211 seconds. Conclusion: Our solution helps make the object of interest consistent with the light intensity of the target image by adding the space distance that helps maintain the spatial consistency in the final merged video.

* 27 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge