Muru Selvakumar

Multi-Label Text Classification using Attention-based Graph Neural Network

Mar 22, 2020

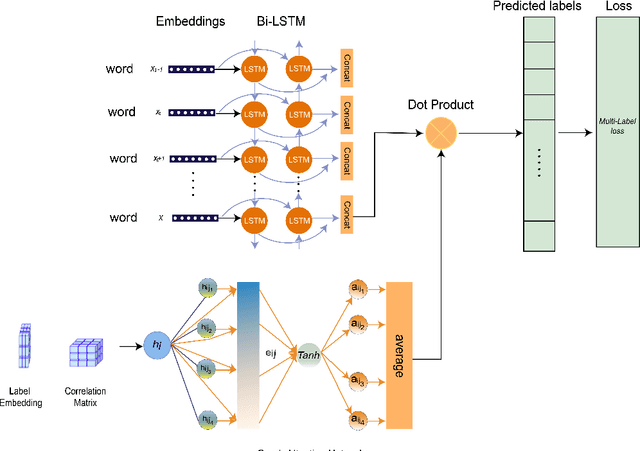

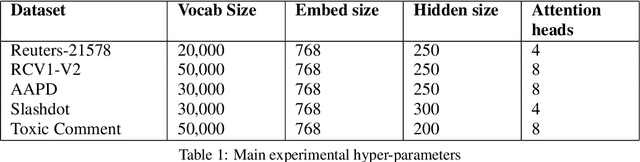

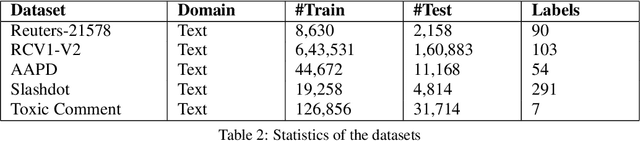

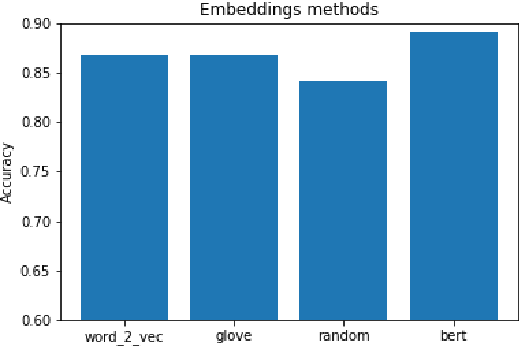

Abstract:In Multi-Label Text Classification (MLTC), one sample can belong to more than one class. It is observed that most MLTC tasks, there are dependencies or correlations among labels. Existing methods tend to ignore the relationship among labels. In this paper, a graph attention network-based model is proposed to capture the attentive dependency structure among the labels. The graph attention network uses a feature matrix and a correlation matrix to capture and explore the crucial dependencies between the labels and generate classifiers for the task. The generated classifiers are applied to sentence feature vectors obtained from the text feature extraction network (BiLSTM) to enable end-to-end training. Attention allows the system to assign different weights to neighbor nodes per label, thus allowing it to learn the dependencies among labels implicitly. The results of the proposed model are validated on five real-world MLTC datasets. The proposed model achieves similar or better performance compared to the previous state-of-the-art models.

Compositional Attention Networks for Interpretability in Natural Language Question Answering

Oct 30, 2018

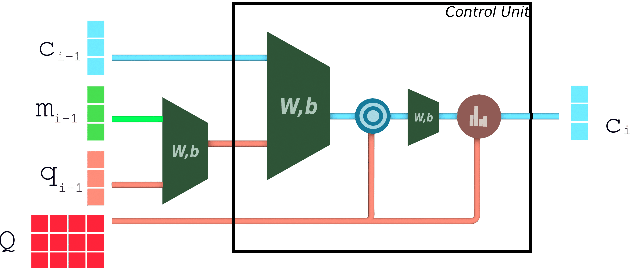

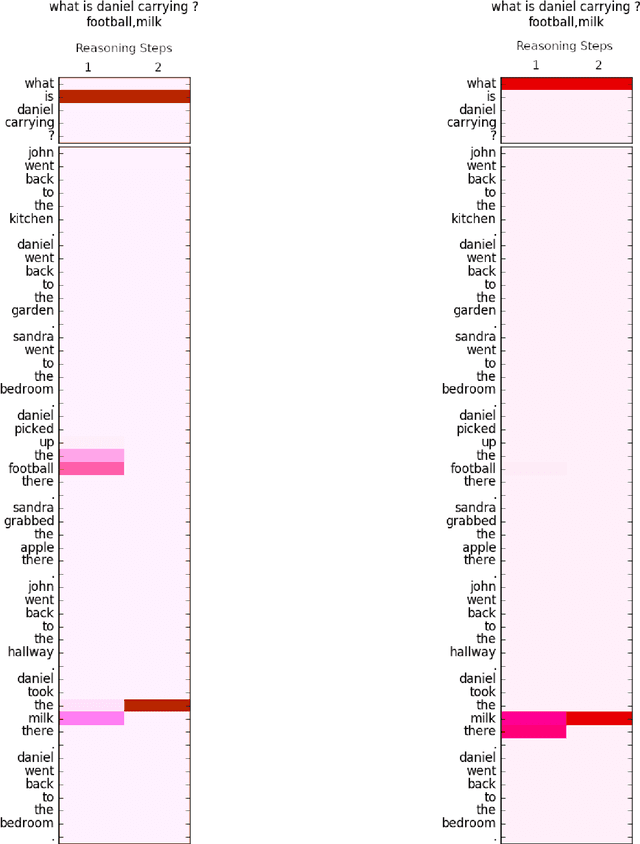

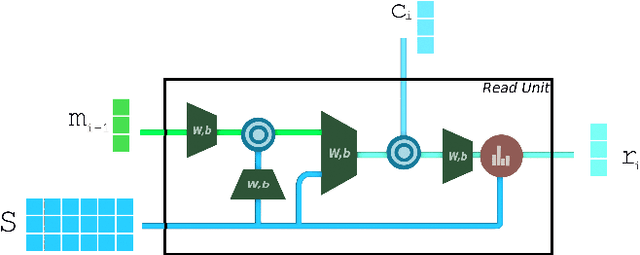

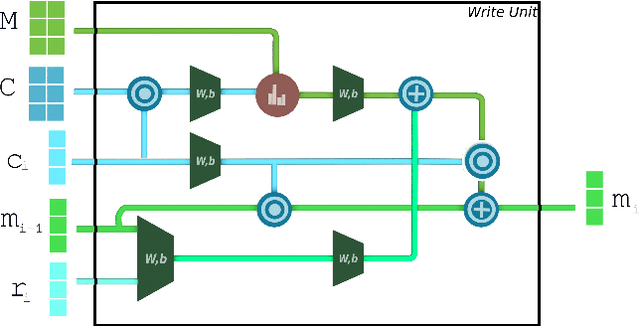

Abstract:MAC Net is a compositional attention network designed for Visual Question Answering. We propose a modified MAC net architecture for Natural Language Question Answering. Question Answering typically requires Language Understanding and multi-step Reasoning. MAC net's unique architecture - the separation between memory and control, facilitates data-driven iterative reasoning. This makes it an ideal candidate for solving tasks that involve logical reasoning. Our experiments with 20 bAbI tasks demonstrate the value of MAC net as a data-efficient and interpretable architecture for Natural Language Question Answering. The transparent nature of MAC net provides a highly granular view of the reasoning steps taken by the network in answering a query.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge