Suriyadeepan Ramamoorthy

Automatic Contact Tracing using Bluetooth Low Energy Signals and IMU Sensor Readings

Jun 13, 2022

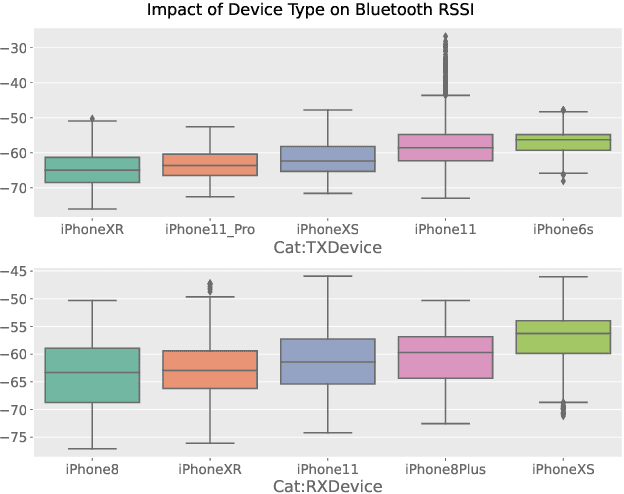

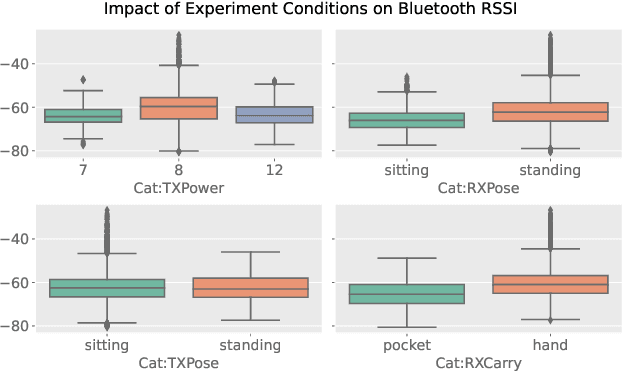

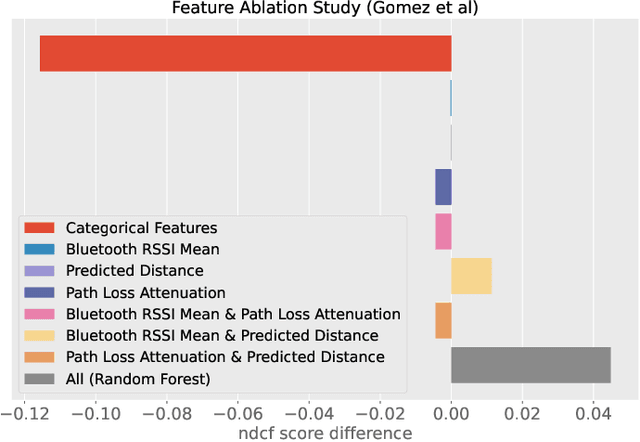

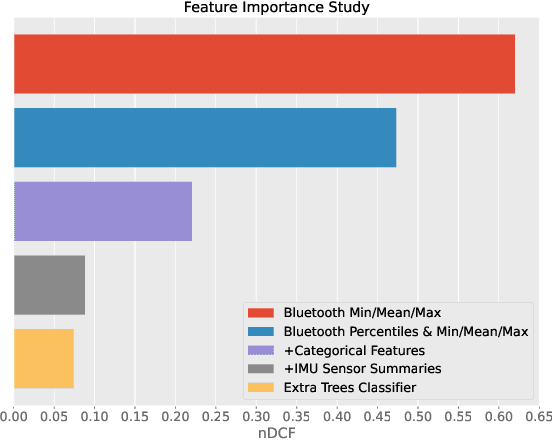

Abstract:In this report, we present our solution to the challenge provided by the SFI Centre for Machine Learning (ML-Labs) in which the distance between two phones needs to be estimated. It is a modified version of the NIST Too Close For Too Long (TC4TL) Challenge, as the time aspect is excluded. We propose a feature-based approach based on Bluetooth RSSI and IMU sensory data, that outperforms the previous state of the art by a significant margin, reducing the error down to 0.071. We perform an ablation study of our model that reveals interesting insights about the relationship between the distance and the Bluetooth RSSI readings.

Compositional Attention Networks for Interpretability in Natural Language Question Answering

Oct 30, 2018

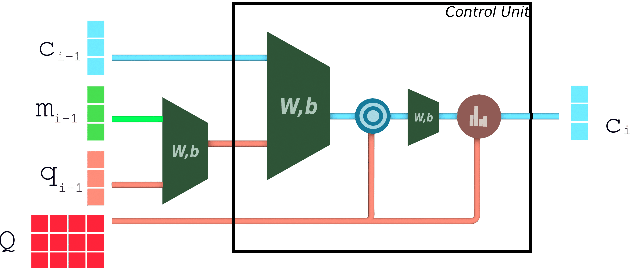

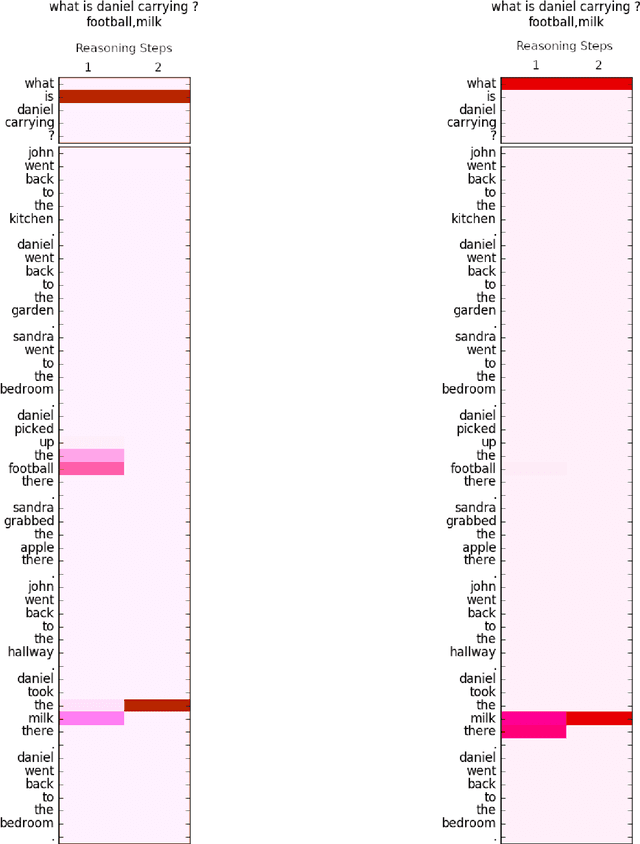

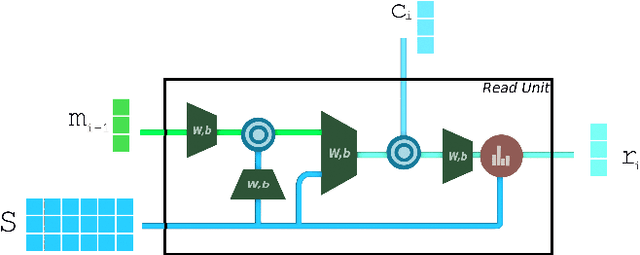

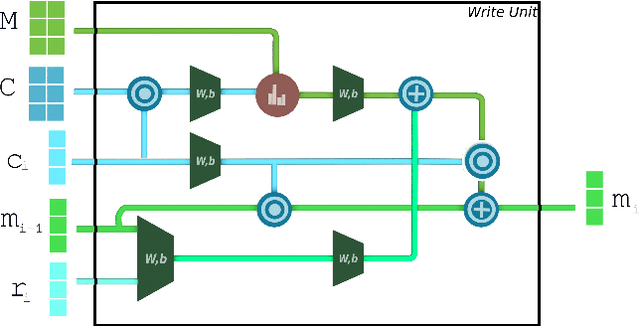

Abstract:MAC Net is a compositional attention network designed for Visual Question Answering. We propose a modified MAC net architecture for Natural Language Question Answering. Question Answering typically requires Language Understanding and multi-step Reasoning. MAC net's unique architecture - the separation between memory and control, facilitates data-driven iterative reasoning. This makes it an ideal candidate for solving tasks that involve logical reasoning. Our experiments with 20 bAbI tasks demonstrate the value of MAC net as a data-efficient and interpretable architecture for Natural Language Question Answering. The transparent nature of MAC net provides a highly granular view of the reasoning steps taken by the network in answering a query.

An Attentive Sequence Model for Adverse Drug Event Extraction from Biomedical Text

Jan 02, 2018

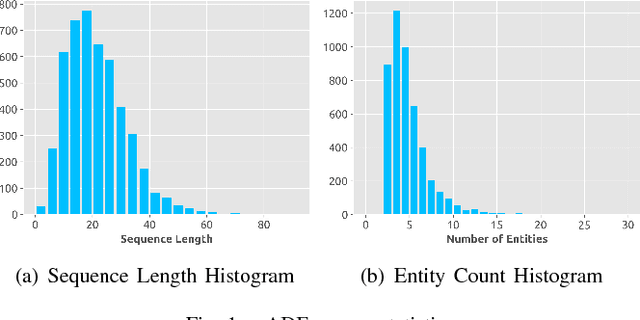

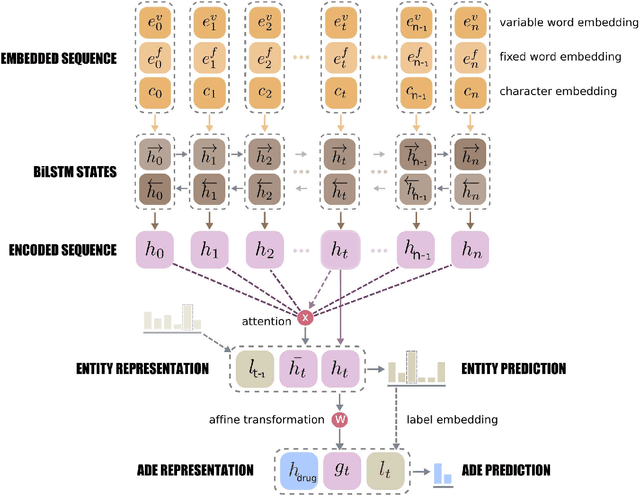

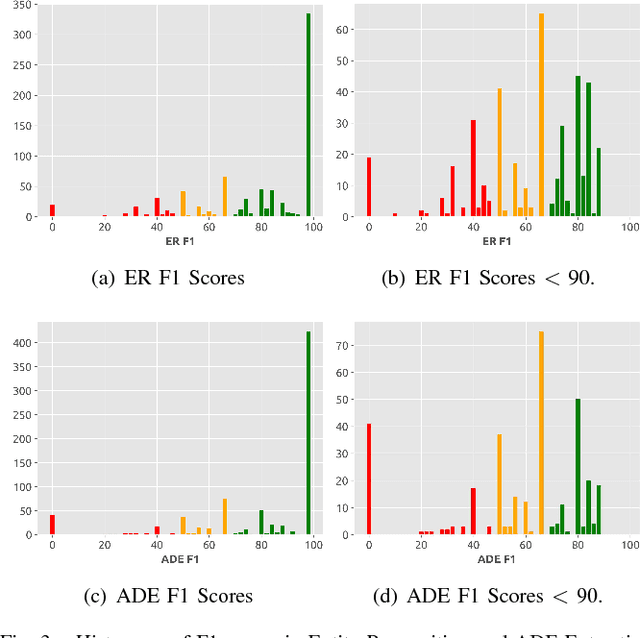

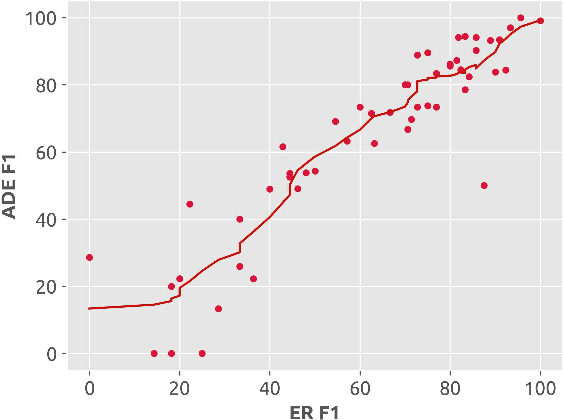

Abstract:Adverse reaction caused by drugs is a potentially dangerous problem which may lead to mortality and morbidity in patients. Adverse Drug Event (ADE) extraction is a significant problem in biomedical research. We model ADE extraction as a Question-Answering problem and take inspiration from Machine Reading Comprehension (MRC) literature, to design our model. Our objective in designing such a model, is to exploit the local linguistic context in clinical text and enable intra-sequence interaction, in order to jointly learn to classify drug and disease entities, and to extract adverse reactions caused by a given drug. Our model makes use of a self-attention mechanism to facilitate intra-sequence interaction in a text sequence. This enables us to visualize and understand how the network makes use of the local and wider context for classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge