Murray Ireland

Creating a Safety Assurance Case for an ML Satellite-Based Wildfire Detection and Alert System

Nov 08, 2022

Abstract:Wildfires are a common problem in many areas of the world with often catastrophic consequences. A number of systems have been created to provide early warnings of wildfires, including those that use satellite data to detect fires. The increased availability of small satellites, such as CubeSats, allows the wildfire detection response time to be reduced by deploying constellations of multiple satellites over regions of interest. By using machine learned components on-board the satellites, constraints which limit the amount of data that can be processed and sent back to ground stations can be overcome. There are hazards associated with wildfire alert systems, such as failing to detect the presence of a wildfire, or detecting a wildfire in the incorrect location. It is therefore necessary to be able to create a safety assurance case for the wildfire alert ML component that demonstrates it is sufficiently safe for use. This paper describes in detail how a safety assurance case for an ML wildfire alert system is created. This represents the first fully developed safety case for an ML component containing explicit argument and evidence as to the safety of the machine learning.

Autonomous Agent Behaviour Modelled in PRISM -- A Case Study

Feb 22, 2016

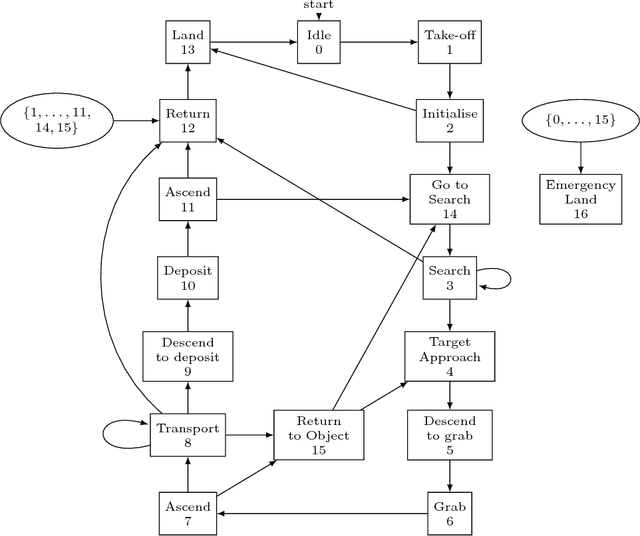

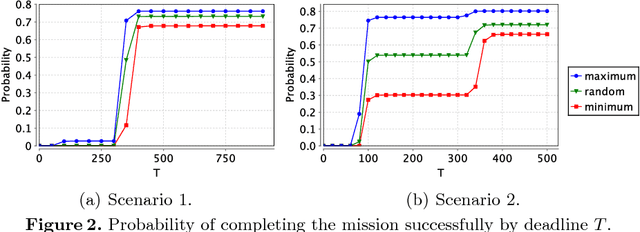

Abstract:Formal verification of agents representing robot behaviour is a growing area due to the demand that autonomous systems have to be proven safe. In this paper we present an abstract definition of autonomy which can be used to model autonomous scenarios and propose the use of small-scale simulation models representing abstract actions to infer quantitative data. To demonstrate the applicability of the approach we build and verify a model of an unmanned aerial vehicle (UAV) in an exemplary autonomous scenario, utilising this approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge