Murchana Baruah

Truveta Mapper: A Zero-shot Ontology Alignment Framework

Jan 24, 2023

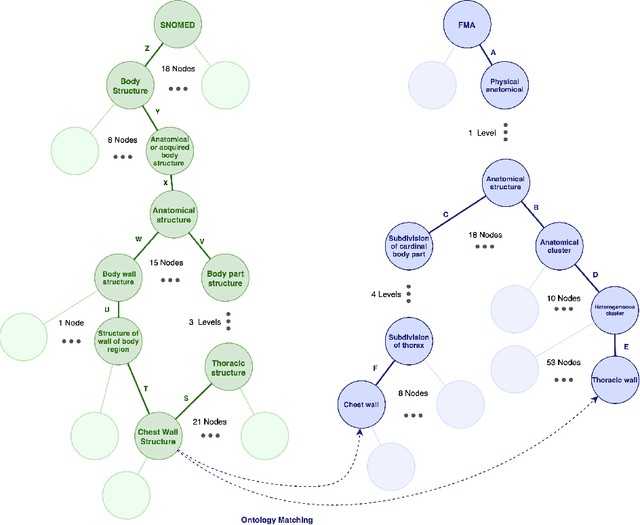

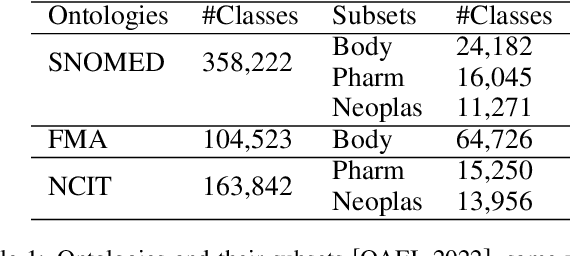

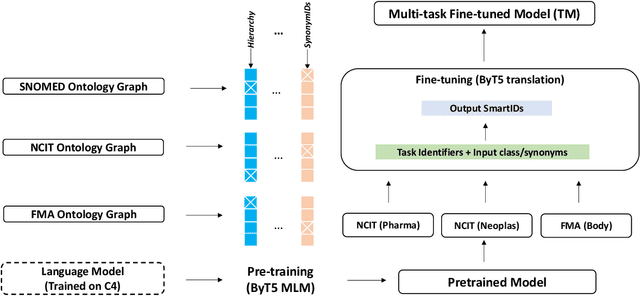

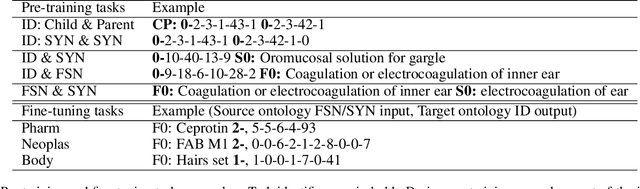

Abstract:In this paper, a new perspective is suggested for unsupervised Ontology Matching (OM) or Ontology Alignment (OA) by treating it as a translation task. Ontologies are represented as graphs, and the translation is performed from a node in the source ontology graph to a path in the target ontology graph. The proposed framework, Truveta Mapper (TM), leverages a multi-task sequence-to-sequence transformer model to perform alignment across multiple ontologies in a zero-shot, unified and end-to-end manner. Multi-tasking enables the model to implicitly learn the relationship between different ontologies via transfer-learning without requiring any explicit cross-ontology manually labeled data. This also enables the formulated framework to outperform existing solutions for both runtime latency and alignment quality. The model is pre-trained and fine-tuned only on publicly available text corpus and inner-ontologies data. The proposed solution outperforms state-of-the-art approaches, Edit-Similarity, LogMap, AML, BERTMap, and the recently presented new OM frameworks in Ontology Alignment Evaluation Initiative (OAEI22), offers log-linear complexity in contrast to quadratic in the existing end-to-end methods, and overall makes the OM task efficient and more straightforward without much post-processing involving mapping extension or mapping repair.

Synthesizing Skeletal Motion and Physiological Signals as a Function of a Virtual Human's Actions and Emotions

Feb 15, 2021

Abstract:Round-the-clock monitoring of human behavior and emotions is required in many healthcare applications which is very expensive but can be automated using machine learning (ML) and sensor technologies. Unfortunately, the lack of infrastructure for collection and sharing of such data is a bottleneck for ML research applied to healthcare. Our goal is to circumvent this bottleneck by simulating a human body in virtual environment. This will allow generation of potentially infinite amounts of shareable data from an individual as a function of his actions, interactions and emotions in a care facility or at home, with no risk of confidentiality breach or privacy invasion. In this paper, we develop for the first time a system consisting of computational models for synchronously synthesizing skeletal motion, electrocardiogram, blood pressure, respiration, and skin conductance signals as a function of an open-ended set of actions and emotions. Our experimental evaluations, involving user studies, benchmark datasets and comparison to findings in the literature, show that our models can generate skeletal motion and physiological signals with high fidelity. The proposed framework is modular and allows the flexibility to experiment with different models. In addition to facilitating ML research for round-the-clock monitoring at a reduced cost, the proposed framework will allow reusability of code and data, and may be used as a training tool for ML practitioners and healthcare professionals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge